ChatGPT has triggered enthusiasm and fear since its launch in November 2022. Its apparent mastery of the semantics and syntax—but not yet the content—of different languages surprises users expecting an ordinary chatbot. Some universities immediately banned students from using ChatGPT for essay writing since it outperforms most human students. Newspaper op-eds announced the end of education—not only because students can use it to do homework, but also because ChatGPT can provide more information than many teachers. Artificial intelligence seems to have conquered another domain that, according to classical philosophy, defines human nature: logos. Panic grows with this further loss of existential territory. The apocalyptic imagination of human history intensifies as climate collapse and robot revolt evoke end times.

The end times were no stranger to the moderns. Indeed, in Karl Löwith’s 1949 book Meaning in History, the philosopher showed that the modern philosophy of history, from Hegel to Burckhardt, was a secularization of eschatology.1 The telos of history is what makes the transcendent immanent, whether the second coming of Jesus Christ or simply the becoming of Homo deus. This biblical or Abrahamic imagination of time offers many profound reflections on human existence more generally, yet also stands in the way of understanding our future.

In the 1960s, Hans Blumenberg argued against Löwith’s thesis of secularization, as well as Carl Schmitt’s claim that “all significant concepts of the modern theory of the state are secularized theological concepts.”2 Blumenberg contended that the understanding of the modern as the secularization or transposition of theological concepts undermines the legitimacy of the modern; a certain significance of modernity remains irreducible to the secularization of theology.3 Likewise, the novelty and significance of artificial intelligence is buried by the eschatological imaginary, by modern stereotypes of machines and industrial propaganda.

The Crusaders’ siege of Jerusalem in 1099 is depicted in a miniature from Descriptio Terrae Sanctae (Description of the Holy Land) by Burchard of Mount Sion, 13th century.

This is not to say that we should deny climate change and resist artificial intelligence. To the contrary, fighting against climate change should be our top priority, as should be developing a productive relation between humans and technology. But to do this we must develop an adequate understanding of artificial intelligence, beyond a merely technical one. The invention of the train, automobile, and later the airplane also triggered great fear, both psychologically and economically, yet today few fear that these machines will slip out of our control. Instead, cars and airplanes are part of daily life, often signifying excitement and freedom. So why is there such fear over artificial intelligence?

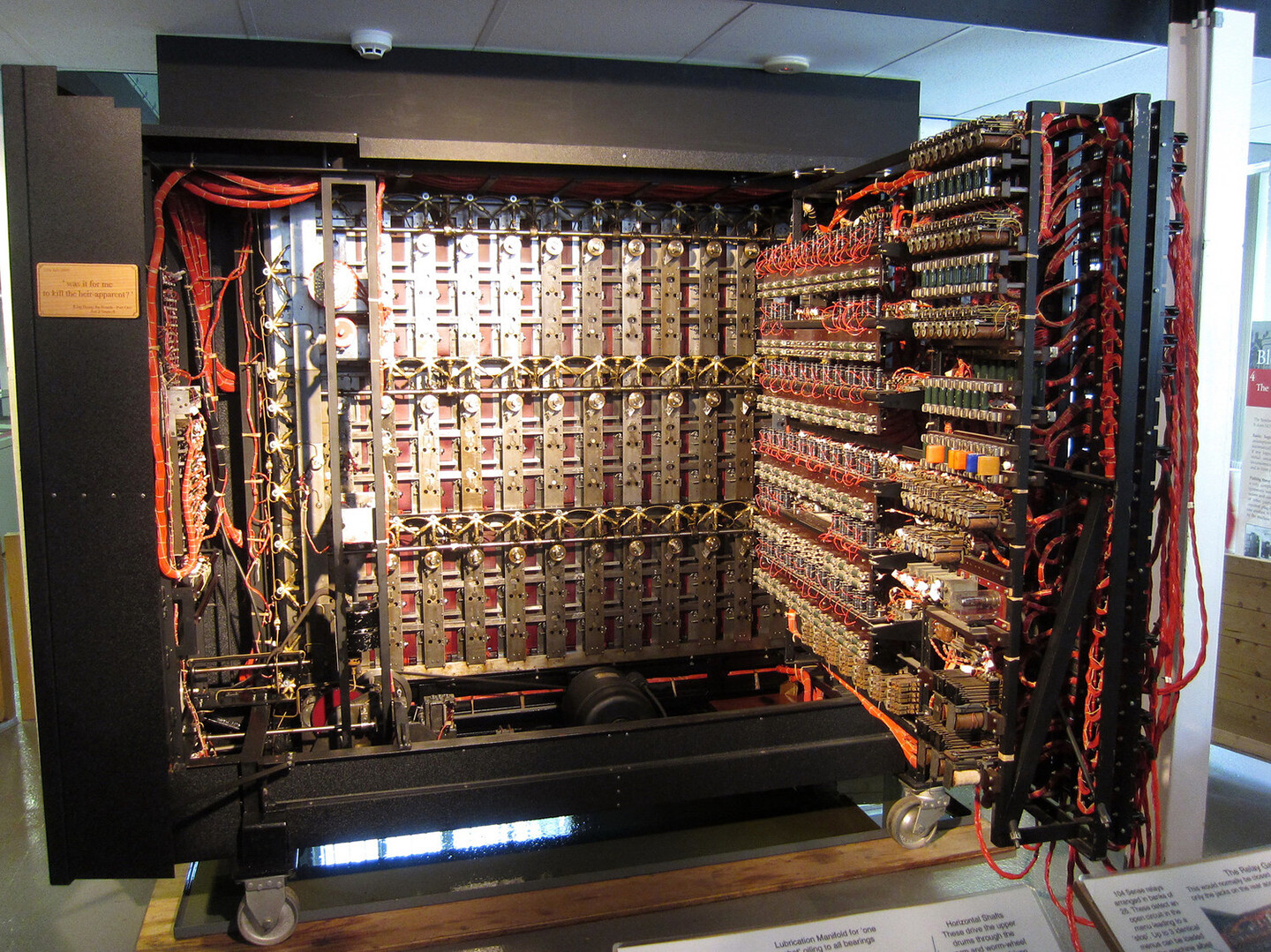

To understand this new wave of technology with ChatGPT at the forefront, we can start with John Searle’s famous Chinese Room thought experiment from 1980, which conceals the most annoying stereotype of computational machines in the guise of logical reasoning. In this thought experiment, Searle imagined himself alone in a room, tasked with following instructions according to a symbol-processing program written in English, in order to respond to inputs written in Chinese and slipped under the door. Searle does not understand Chinese in the experiment: “I know no Chinese, either written or spoken, and … I’m not even confident that I could recognize Chinese writing as Chinese writing distinct from, say, Japanese writing or meaningless squiggles.”4 However, he argues that with the right set of instructions and rules, he could respond in a way that would lead the person outside of the room to believe that he understood Chinese. Simply put, Searle asserts that just because a machine is capable of following instructions in Chinese, it doesn’t mean the machine understands Chinese—a hallmark of so-called strong AI (in contrast to weak AI). Understanding means first of all understanding semantics. While syntax can be hard-coded, semantic meaning changes with situation and circumstance. Searle’s Chinese Room applies to a computer still functioning like an eighteenth-century machine, such as the Digesting Duck or the Mechanical Turk would. However, this is not the type of machine we are dealing with today. Noam Chomsky, Ian Roberts, and Jeffrey Watumull were right in claiming that ChatGPT is “a lumbering statistical engine for pattern matching.”5 However, we must recognize that, while patterns are a primary characteristic of information, ChatGPT is doing more than just pattern matching.

The combat information center aboard a warship—proposed as a real-life analog to a Chinese room, 1988. License: Public Domain.

Such a syntactical critique is based on a mechanistic epistemology that assumes linear causality—a cause followed by an effect. One can reverse this cause-and-effect process to reach the ultimate cause: the prime mover, the default of the first cause, and the ultimate fate of all linear reasoning. In contrast to linear causality and mechanist philosophy, the eighteenth century saw the rise of philosophical thinking based on the organism, with Immanuel Kant’s Critique of Judgment as one of the most significant contributions. As I have claimed before, Kant imposed a new condition of philosophizing, namely that philosophy has to become organic; in other words, the organic marked a new beginning for philosophical thinking.6 Today it is important to recognize that the condition of philosophizing that Kant established has come to its end after cybernetics.7

Cybernetics, a term coined by Norbert Wiener around 1943, was developed by a group of scientists and engineers who attended the Macy Conferences on cybernetics in the late forties and early fifties. Cybernetics was intended to be a universal science capable of unifying all disciplines—a new adaptation of eighteenth-century encyclopedism, according to Gilbert Simondon.8 Cybernetics uses the concept of feedback to define the operation of a new “cybernetic machine” distinct from the “mechanical machines” of the seventeenth century. Wiener claimed in his seminal 1948 book that cybernetics had overcome the opposition between mechanism and vitalism represented by Newton and Bergson, because cybernetic machines were based on a new nonlinear form of causality, or recursivity, instead of on a fragile and ineffective linear causality—fragile because it doesn’t know how to regulate its own mode of operation. Imagine a mechanical watch: when one of the gears fails, the whole watch stops. With this kind of linear mechanism, no exponential increase in the speed of its reasoning can happen without a radical upgrade of hardware.

If the opposition between mechanism and organism characterizes a grand debate of modern philosophy, determining the direction of its development, then the debate persists today, when so many of the statements discrediting AI and ChatGPT assume that machines are only mechanistic and therefore unable to understand semantic meaning. It would be equally wrong to claim that machines are merely a failed imitation of human understanding when it comes to semantic meaning. Philosopher and cognitive scientist Brian Cantwell Smith has sharply criticized this anthropomorphic thinking, defending a machinic intentionality. For him, even if one finds no human intentionality in a machine, it remains a form of intentionality nonetheless; it is semantic, even if not in the sense of human language.9 Such a separation of anthropomorphic semantics from machine semantics is fundamental for rethinking our relations with machines, yet only as a first step.

Searle’s argument fundamentally ignores the recursive form of calculation performed by today’s machines. One may argue that computer science shouldn’t be conflated with cybernetics, since cybernetics is too overarching a science. However, one can also think about Gödel’s recursive function and its equivalence with the Turing Machine and Alonzo Church’s lambda calculus (a well-known story in the history of computation). The term “recursivity” doesn’t only belong to cybernetics; it also belongs to post-mechanistic thinking. The advent of cybernetics only announced the possibility of realizing this recursive thinking in cybernetic machines.

The “intelligence” found in machines today is a reflective form of operation, as both Gotthard Günther and Gilbert Simondon rightly observed. For Günther, cybernetics is the realization of Hegel’s logic, while for Simondon, it was only in the Critique of Judgment’s elaboration on reflective judgment that Kant addressed cybernetics.10 “Reflective thinking” is usually associated with human beings and not with machines, because machines only execute instructions without reflecting on the instructions themselves. But since the introduction of cybernetics in the 1940s, the term can also describe the feedback mechanism of machines. Reflective thinking in machines holds an astonishing power over human beings unprepared to accept its existence, even as a preliminary and basic form of reflection—being purely formal and therefore insufficient for dealing with content. Here we can understand how ChatGPT can be “not particularly innovative” and “nothing revolutionary” for computer scientists such as Yann LeCun.11 It is only by dealing with content that machines can move towards what has been called the technological singularity. So far, the singularity remains a myth—a misleading and also harmful one when presented as the near future. Even if we associate the singularity with theological meaning or eschatology, it doesn’t contribute anything to the understanding of artificial intelligence or its future.

Recursive machines, and not linear machines, are key to understanding the development and evolution of artificial intelligence. How will human beings confront this new type of machine? Simondon raised a similar question by asking: When technology becomes reflexive, what will be the role of philosophy? Brian Cantwell Smith has argued that AI is limited to the capacity of reckoning and not judgment, yet it is hard to say how much longer such a distinction can hold.12 Maybe too much intellectual effort has been lost to making distinctions between machines and humans.

Human beings were not upset when domesticated animals such as horses and cows replaced them as providers of energy. They instead welcomed the relief from repetitive and tiresome labor. The same happened when steam engines replaced animals; they were even more efficient and required even less human attention. Simondon, in his 1958 book On the Mode of Existence of Technical Objects, rightly observed that the replacement of thermodynamic machines by information machines marks a critical moment: human displacement from the center of production. Artisans before the industrial age were able to create an associated milieu in which the artisan’s body and intelligence compensated for their simple tools’ lack of autonomy. In the era of information machines, or cybernetic machines, the machine itself becomes the organizer of information and the human is no longer at the center, even if they still consider themself the commander of machines and organizer of information. This is the moment when the human suffers from their own stereotypical beliefs about machines: they falsely identify themself as the center, and in so doing, they face constant frustration and a panicked search for identity.

Luke Skywalker tests his prosthetic hand, the L-hand 980.

The reality residing in the machine is alienated from the reality in which the human operates. The unavoidable process of technological evolution is driven by the introduction of nonlinear causality, allowing machines to deal with contingency. A learning machine is one that can discern contingent events such as noise and failure. It can distinguish unorganized inputs from necessary ones. And by interpreting contingent events, the learning machine improves its model of decision-making. But even here the machine needs humans to distinguish right decisions from wrong ones in order to continue improving. In developing countries, a new type of cheap labor employs humans to tells machines whether results are correct, be they facial recognition scans or ChatGPT responses. This new form of labor, which exploits workers who toil invisibly behind the machines we interact with, is often overlooked by very general criticisms of capitalism that lament insufficient automation. This is the weakness of today’s Marxian critique of technology.

Simondon raised a key question in On the Mode of Existence of Technical Objects: When the human ceases to be the organizer of information, what role can they play? Can the human be freed from labor? As Hannah Arendt suspected in The Human Condition—published the same year as Simondon’s book—such a liberation only leads to consumerism, leaving the artist as the “last man” able to create.13 Consumerism here becomes the limit of human action. Arendt sees machines from the perspective of human reality, as replacing Homo faber, while Simondon shows that an inability to deal with and integrate the technical reality of machines will foster an unfortunate antagonism between human and machine, culture and technics. Not only is this antagonism the source of fear. It is also based on a very problematic understanding of technology shaped by industrial propaganda and consumerism. It is from this negativity that a primitivist humanism grew, identifying love as the last resort of the human.

More than sixty years have passed since Simondon raised these questions, and they remain unresolved. Worse, they have been obscured by technological optimism as well as cultural pessimism, with the former promoting relentless acceleration and the latter serving as psychotherapy. Both of these tendencies originate from an anthropomorphic understanding of machines that says they are supposed to mimic human beings. (Simondon fiercely criticized cybernetics for holding this view, though this was not entirely justified.) Today the most ironic expression of this mimicry view is in the domain of art, in attempts to prove that a machine can do the work of a Bach or a Picasso. On one hand, the panicked human repeatedly asks what kinds of jobs can avoid being replaced by machines; on the other, the tech industry consciously works to replace human intervention with machine automation. Humans live within the industry’s self-fulfilling prophecy of replacement. And indeed, the industry constantly reproduces the discourse of replacement by announcing the end of this or that job as if a revolution had arrived, while the social structure and our social imaginary remain unchanged.

The discourse of replacement hasn’t been transformed into the discourse of liberation in capitalist societies, nor in so-called communist ones. To be fair, some accelerationists realize this and have sought to revive Marx’s vision of full automation. If high school physics was more popular, we would have a more nuanced concept of acceleration, because acceleration doesn’t mean an increase in speed but rather an increase in velocity. Instead of elaborating a vision of the future in which artificial intelligence serves a prosthetic function, the dominant discourse treats it merely as challenging human intelligence and replacing intellectual labor. Today’s humans fail to dream. If the dream of flight led to the invention of the airplane, now we have intensifying nightmares of machines. Ultimately, both techno-optimism (in the form of transhumanism) and cultural pessimism meet in their projection of an apocalyptic end.

Human creativity must take a radically different direction and elevate human-machine relations above the economic theory of replacement and the fantasies of interactivity. It must move towards an existential analysis. The prosthetic nature of technology must be affirmed beyond its functionality, for since the beginning of humanity, access to truth has always depended on the invention and use of tools. This fact remains invisible to many, which makes the conflict between machine evolution and human existence seem to originate from an ideology deeply rooted in culture.

We live in various positive feedback loops represented as culture. Since the beginning of modern industrial society, the human body has been subordinated to repetitive rhythms, and consequently the human mind has been subsumed by the prophecies of industry. Whether American Dream or Chinese Dream, enormous human potential has been suppressed in favor of a consumerist ideology. In the past, philosophy was tasked with limiting the hubris produced by machines and with freeing human subjects from feedback loops in the name of truth. Today, philosophers of technology are instead anxious to affirm these feedback loops as the inevitable path of civilization. The human now recognizes the centrality of technology by wanting to resolve all problems as though they were technical problems. Speed and efficiency rule the entirety of society as they once ruled only the engineering disciplines. The desire of educators to realize a paradigmatic change in a few years’ time discredits any fundamental reflections on the question of technology, and we end up in a feedback loop again. Consequently, universities continue producing talent for the tech industry, and this talent goes on to develop more efficient algorithms to exploit users’ privacy and manipulate the way they consume. For universities, it should be more urgent to deal with these questions than to ponder banning ChatGPT.

Can the human escape this positive feedback loop of self-fulfilling prophecy so deeply rooted in contemporary culture? In 1971 Gregory Bateson described a feedback loop that traps alcoholics: one glass of beer won’t kill me; okay, I’ve already started, a second one should be fine; well, two already, why not three? An alcoholic, if they’re lucky, might get out of this positive feedback loop by “hitting bottom”—by surviving a fatal disease or a car accident, for example.14 Those lucky survivors then develop an intimacy with the divine. Can humans, the modern alcoholics, with all their collective intelligence and creativity, escape this fate of hitting bottom? In other words, can the human take a radical turn and push creativity in a different direction?

Isn’t such an opportunity provided precisely by today’s intelligent machines? As prostheses instead of rote pattern-followers, machines can liberate the human from repetition and assist us in realizing human potential. How to acquire this transformative capacity is essentially our concern today, not the debate over whether a machine can think, which is just an expression of existential crisis and transcendental illusion. Perhaps some new premises concerning human-machine relations can liberate our imagination. Here are three (though certainly more can be added):

1) Instead of suspending the development of AI, suspend the anthropomorphic stereotyping of machines and develop an adequate culture of prosthesis. Technology should be used to realize its user’s potential (here we will have to enter a dialogue with Amartya Sen’s capability theory) instead of being their competitor or reducing them to patterns of consumption.

2) Instead of mystifying machines and humanity, understand our current technical reality and its relation to diverse human realities, so that this technical reality can be integrated with them to maintain and reproduce biodiversity, noodiversity, and technodiversity.15

3) Instead of repeating the apocalyptic view of history (a view expressed, in its most secular form, in Kojève and Fukuyama’s end of history), liberate reason from its fateful path towards an apocalyptic end. This liberation will open a field that allows us to experiment with ethical ways of living with machines and other nonhumans.

No invention arrives without constraints and problematics. Though these constraints are more conceptual than technical, ignoring the conceptual is precisely what allows evil to grow, as an outcome of a perversion in which form overtakes ground.16 Only when we break away from cultural bias and the self-fulfilling prophecy of the tech industry can we develop greater insight into the possibilities of the future, which cannot be based merely on data analysis and pattern extraction. It is highly probable that, before we get there, the industrial prophets of our time will have already realized that machines can predict the future better than they can.

“This occidental conception of history, implying an irreversible direction toward a future goal, is not merely occidental. It is essentially a Hebrew and Christian assumption that history is directed toward an ultimate purpose and governed by the providence of a supreme insight and will—in Hegel’s terms, by spirit or reason as ‘the absolutely powerful essence.’” Karl Löwith, Meaning in History (University of Chicago Press, 1949), 54.

Carl Schmitt, Political Theology: Four Chapters on the Concept of Sovereignty, trans. George Schwab (University of Chicago Press, 2005), 36.

Hans Blumenberg, “Progress Exposed as Fate,” chap. 3 of part 1, in The Legitimacy of the Modern Age, trans. Robert M. Wallace (MIT Press, 1985).

John. R. Searle, “Minds, Brains, and Programs,” Behavioral and Brain Sciences 3, no. 3 (1980): 418.

Noam Chomsky, Ian Roberts, and Jeffrey Watumull, “The False Promise of ChatGPT,” New York Times, March 8, 2023 →.

Yuk Hui, Recursivity and Contingency (Rowman and Littlefield, 2019).

See Yuk Hui, “Philosophy after Automation,” Philosophy Today 65, no.2 (2021).

Gilbert Simondon, “Technics Learned by the Child and Technics Thought by the Adult,” chap. 2 of part 2, in On the Mode of Existence of Technical Objects (1958), trans. Cecile Malaspina and John Rogrove (Univocal, 2017).

“Many people have argued that the semantics of computational systems is intrinsically derivative or attributed—i.e., of the sort that books and signs have, in the sense of being ascribed by outside observers or users—as opposed to that of human thought and language, which in contrast is assumed to be original or authentic. I am dubious about the ultimate utility (and sharpness) of this distinction and also about its applicability to computers.” Brian Cantwell Smith, On the Origin of Objects (MIT Press, 1996), 10. For an extended discussion of Smith’s work, see Yuk Hui, “Digital Objects and Ontologies,” chap. 2 in On the Existence of Digital Objects (University of Minnesota Press, 2016).

Gotthard Günther, Das Bewusstsein der Maschinen: eine Metaphysik der Kybernetik (Agis-Verlag, 1957); Gilbert Simondon, Sur la philosophie (PUF, 2016), 180.

Quoted in Tiernan Ray, “ChatGPT Is ‘Not Particularly Innovative,’ and ‘Nothing Revolutionary,’ Says Meta’s Chief AI Scientist,” ZDNET, January 23, 2023 →.

Brian Cantwell Smith, The Promise of Artificial Intelligence: Reckoning and Judgment (MIT Press, 2019). I engaged more with Smith’s argument in chapter 3 of Art and Cosmotechnics (e-flux and University of Minnesota Press, 2021).

Hannah Arendt, The Human Condition, 2nd ed. (University of Chicago Press, 1998), 127.

Gregory Bateson, “The Cybernetics of ‘Self’: A Theory of Alcoholism,” in Steps to an Ecology of Mind (Jason Aronson, 1987).

See Yuk Hui, “For a Planetary Thinking,” e-flux journal, no. 114 (December 2020) →.

See the question of evil in F. W. J. Schelling, Philosophical Investigations into the Essence of Human Freedom, trans. Jeff Love and Johannes Schmidt (SUNY Press, 2006).