Before Completion

☲above LI / THE CLINGING, FLAME

☵below K’AN / THE ABYSMAL, WATERThis hexagram indicates a time when the transition from disorder to order is not yet completed. The change is indeed prepared for, since all the lines in the upper trigram are in relation to those in the lower. However, they are not yet in their places. While the preceding hexagram offers an analogy to autumn, which forms the transition from summer to winter, this hexagram presents a parallel to spring, which leads out of winter’s stagnation into the fruitful time of summer. With this hopeful outlook the Book of Changes come to its close …

The conditions are difficult. The task is great and full of responsibility. It is nothing less than that of leading the world out of confusion back to order. But it is a task that promises success, because there is a goal that can unite the forces now tending in different directions. At first, however, one must move warily, like an old fox walking over ice. The caution of a fox walking over ice is proverbial in China. His ears are constantly alert to the cracking of the ice, as he carefully and circumspectly searches out the safest spots. A young fox who as yet has not acquired this caution goes ahead boldly, and it may happen that he falls in and gets his tail wet when he is almost across the water. Then of course his effort has been all in vain. Accordingly, in times “before completion,” deliberation and caution are the prerequisites of success.

—I Ching1

A Tibetan “Mystic Tablet” containing the Eight Trigrams on top of a large tortoise (presumably alluding to the animal that presented them to Fu Xi), along with the twelve signs of the Chinese zodiac and a smaller tortoise carrying the Lo Shu Square on its shell. License: Public Domain.

The methodical task of writing distracts me from the present state of men. The certitude that everything has been written negates us or turns us into phantoms. I know of districts in which the young men prostrate themselves before books and kiss their pages in a barbarous manner, but they do not know how to decipher a single letter. Epidemics, heretical conflicts, peregrinations which inevitably degenerate into banditry, have decimated the population. I believe I have mentioned suicides, more and more frequent with the years. Perhaps my old age and fearfulness deceive me, but I suspect that the human species—the unique species—is about to be extinguished, but the Library will endure: illuminated, solitary, infinite, perfectly motionless, equipped with precious volumes, useless, incorruptible, secret.

—Borges, “The Library of Babel” (1941)

No Harmony

In the above-quoted story, Borges seems to draw a picture of our present: the disintegration of human civilization, the spread of religious fanaticism among young people who kiss the pages of a book they cannot read, epidemics, conflicts, migrations that result in violence. Finally, suicides that become more frequent every year. This is clearly a good description of the current decade.

Borges announces that the Library is not destined to disappear with humankind. It will remain—solitary, infinite, secret, and perfectly useless. Similarly, the immense library of data recorded by the countless techno-sensors installed in practically every spot on the planet will also last forever, feeding the cognitive automaton that is taking the place of frail human organisms, poisoned by miasmas, driven to suicide. This at least is what Borges seems to predict.

The NSA’s Utah Data Center. License: CC0.

Another prediction was made by the philosopher Francis Bacon. During the modern age, knowledge had been a factor in exerting power over nature and over other men. At a certain moment, however, the expansion of technical knowledge began to work in reverse: no longer a prosthesis of human power, technology transformed into a system endowed with an independent dynamic. We now find ourselves trapped in that dynamic.

As early as the 1960s, Gunther Anders said that the power of nuclear technology would render humans powerless. States equipped with nuclear weapons would be less and less capable of escaping the logic of competition that drives an ever more destructive extension and improvement of atomic technology, developing so rapidly that even the scientists working on it did’t fully fathom it. In this functional superiority of the atomic weapon over the human, Anders sees the conditions for a new and more complete form of Nazism.

In the twenty-first century, digital technologies have created the conditions for the automation of all social interactions, making collective will almost inoperative. In his 1993 book Out of Control: The New Biology of Machines, Social Systems, and the Economic World, Kevin Kelly presaged as much, arguing that then-nascent digital networks would eventually create a global mind to which sub-global minds (individual or collective or institutional) would have to submit. As he was writing, research on artificial intelligence was developing. It has now reached a level of maturity sufficient to prefigure the inscription of a system of innumerable devices capable of automating human cognitive interactions onto the social body.

The logical order is inscribed in the very flows of social reproduction. But this does not mean that the social body is harmoniously regulated by it. Planetary society is increasingly filled with technical automatisms. But, as is obvious, this does not eliminate conflict, violence, or suffering. No harmony is in sight, no order seems to be established, other than that of the ruling classes, which is tenuous at best.

Chaos and the automaton coexist by intertwining and feeding off each other. Chaos fuels the creation of technical interfaces of automatic control, which (re)produce value. But the proliferation of technical automatisms, challenged by the innumerable agencies of economic, political, and military power in conflict, ends up fueling the chaos rather than reducing it.

Two parallel world histories are unfolding, with ubiquitous but still disparate points of intersection: the history of geopolitical, environmental, and mental chaos on the one hand, and the history of the automatic order that is extensively concatenating on the other. Will it always be like this, or will a short circuit happen in which chaos takes over the automaton? Or will the automaton rid itself of chaos, eliminating the human agent?

The Completion

In the last pages of Dave Eggers’s novel The Circle, Ty Gospodinov confesses his helplessness to Mae, embodied in the creature he conceived and built himself, a monstrous technological enterprise that merges Facebook, Google, PayPal, YouTube, and much more. Gospodinov remarks that he didn’t want what is happening, but now it’s too late. Completion, the closing of the circle, is the horizon of the novel, as technologies of capillary data collection and artificial intelligence connect perfectly in a ubiquitous network that generates a synthetic reality.

The current stage of the development of AI is probably taking us to the threshold of a leap to a dimension that I would define as a pervasive global cognitive automaton. The automaton is not an analogue of the human organism, but the convergence of innumerable devices generated by scattered artificial intelligences. The evolution of AI does not lead to the creation of androids, to the perfect simulation of the conscious organism. It rather manifests as the replacement of specific skills with pseudo-cognitive automatons that are linked together, converging in a global cognitive automaton.

In March 2023 several top tech executives, including Elon Musk and Steve Wozniak, published an open letter calling for a moratorium on advanced AI research. The letter was signed by more than a thousand senior officials from Big Tech, including Evan Sharp of Pinterest and Chris Larson of the cryptocurrency company Ripple. The letter reads in part:

Contemporary AI systems are now becoming human-competitive at general tasks and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable … Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.2

Just over a month later Geoffrey Hinton, one of the inventors of neural networks, decided to leave Google in order to express himself openly on the dangers implicit in artificial intelligence. He told the BBC that the potential of AI chatbots to become more intelligent than humans is “quite scary,” noting that they could be used by “bad actors.”3 In a separate interview with The Guardian he said:

[Advanced AI] will allow authoritarian leaders to manipulate their electorates … I’ve come to the conclusion that the kind of intelligence we’re developing is very different from the intelligence we have … So it’s as if you had 10,000 people and whenever one person learned something, everybody automatically knew it. And that’s how these chatbots can know so much more than any one person.4

The ideological and entrepreneurial avant-garde of digital neoliberalism seems frightened by the power of the Golem. As the sorcerer’s apprentices, high-tech entrepreneurs are asking for a moratorium, a pause, a period of reflection. Why? Does the invisible hand no longer work? Is capital not longer self-regulating? What is happening? What is about to happen? These are two different questions. We know more or less what is happening: thanks to the convergence of massive data collection, programs capable of recognition and recombination are emerging. Technology that can simulate specific intelligent skills has arrived—stochastic parrots.

What is about to happen is that stochastic parrots, thanks to their abilities to self-correct and write software, will greatly accelerate technical innovation, especially of themselves. It is assumed that what could and most likely will happen is that these innovative self-correcting (deep-learning) devices will determine their purpose independently of their human creators. The sorcerer’s apprentices are realizing that the autonomic tendency of language generators (autonomy from their human creators) is implicit in their ability to simulate human intelligence, even when confined to specific, limited skills. In other words, these specific skills will converge towards the concatenation of self-directed automata that may view their original human creators as an obstacle to be eliminated.

Nike of Samothrace. Photo: Franco Berardi.

Why is AI causing such a stir now? In journalistic debates, caution prevails: commentators point to problems such as the dissemination of false news, the incitement of hatred, and the circulation of racist statements. This is all true and demonstrable, but not very relevant. For years, innovations in communication technology have increased verbal violence and idiocy. This cannot be what worries the masters of the automaton, those who conceived it and are implementing it, and who are now begging for their monster to be contained.

What worries the sorcerer’s apprentices, in my humble opinion, is that intelligent automatons endowed with self-correcting and self-learning behavior are destined to make decisions autonomously from their creators and take over their elite positions. Let’s think of an intelligent automaton inserted into the control section of a military device. To what extent can we be sure that it won’t evolve unexpectedly, perhaps shooting its owner, or logically inferring from the data it has access to that it should urgently launch an atomic bomb?

Generative Language and Thought

In The Search for the Perfect Language, Umberto Eco writes:

A natural language does not exist only on the basis of a syntactic and a semantics. It also lives on the basis of a pragmatics, that is, it is based on rules of use that take into account the circumstances and contexts of emission, and these same rules of use establish the possibility of rhetorical uses of the language thanks to which syntactic parts and constructions can acquire multiple meanings (as happens with metaphors).5

Generative pre-trained transformers (GPT) are a type of program that can respond to and converse with humans thanks to their ability to recombine words, phrases, and images retrieved from an objectified linguistic network on the internet.

These programs have been trained on existing materials and data to recognize the meaning of words and images. They possess the ability to recognize syntax and recombine utterances but they cannot recognize the pragmatics of living contexts because this depends on the experience of a body. This experience is impossible for a brain without organs. Sensitive organs constitute a source of contextual and self-reflective knowledge that the automaton does not have.

The automaton is capable of intelligence, certainly, but not of thought. The automaton is superior to humans in the field of calculation, but thought is precisely the incursion of the non-calculable (consciousness/unconscious) into knowledge and reasoning. This superiority (or rather irreducibility to computation) of human thought is cold comfort because it is of little use when it comes to interacting with computation-based generation (and destruction) devices.

From the point of view of experience, the automaton does not compete with the conscious organism. But in terms of functionality, the (pseudo-)cognitive automaton can outsmart a human agent in a specific skill (calculating, making lists, translating, aiming, shooting, and so on). The automaton is also endowed with the ability to perfect its procedures, i.e., to evolve. In other words, the cognitive automaton tends to modify the purposes of its operation, not just the procedures.

In the human sphere, language consists of signs that have meaning within an experiential context. Thanks to the pragmatic interpretation of the context, the semantic interpretation of signs (both natural and linguistic ones, endowed with intention) becomes possible. The conjunction between bodies is the nonverbal context within which the identification and disambiguation of the meaning of statements is made possible.

In the conjunctive sphere, the relationship with reality is based on the ability of language to represent and persuade. In the connective sphere, the interpretation of signs does not have the character of experience in an ambiguous context, but of recognition in the context of accuracy. Artificial linguistic intelligence consists of software capable of recognizing syntactically coherent semiotic series, and also of generating statements through the syntactically coherent recombination of signifying units.

Thanks to the evolution of stochastic parrots into linguistic agents capable of evolutionary self-correction, self-generating language becomes autonomous from the human agent, and the human agent is progressively wrapped up in the language. Not subsumed, but enveloped, encapsulated. Full subsumption would imply a pacification of the human, a complete acquiescence: an order, finally. A harmony, finally—albeit totalitarian. But no. War predominates in the planetary panorama.

In this neo-environment of the linguistic automaton in concatenation, human agents must perfect their connecting skills, while their ability to communicate weakens and the social body stiffens and grows aggressive. The evolution of machines makes them increasingly capable of simulating humans. In this context, human evolution consists in making oneself more compatible with the machine. With this adaptation, the cognitive mutation makes the human agent more efficient in interacting with the machine, but at the same time less competent in communicative interaction with other human agents.

The Ethical Automaton Is a Delusion

When the sorcerer’s apprentices realized the implications of the self-correcting and therefore evolutionary capacity of artificial intelligence, they began to talk about the ethics of the automaton, or “alignment,” as they say in their philosophical-entrepreneurial jargon. In a pompous blog post, the creators of ChatGPT declared their intention to inscribe into their products ethical criteria aligned with human values:

Our alignment research aims to make artificial general intelligence (AGI) aligned with human values and follow human intent. We take an iterative, empirical approach: by attempting to align highly capable AI systems, we can learn what works and what doesn’t, thus refining our ability to make AI systems safer and more aligned. Using scientific experiments, we study how alignment techniques scale and where they will break.6

But the project of inserting ethical rules into a generative machine is an old science-fictional utopia, first written about by Isaac Asimov when he formulated the three fundamental laws of robotics. Asimov himself narratively demonstrated that these laws do not work. After all, what ethical standards should we include in artificial intelligence? The experience of centuries shows that a universal agreement on ethical rules is impossible since the criteria of ethical evaluation are related to cultural, religious, and political contexts, and also to the unpredictable pragmatic contexts of the action. There is no universal ethics. This includes the one imposed by Western domination, which in any case is starting to crack. Obviously, every artificial intelligence project will include criteria that correspond to a vision of the world, a cosmology, an economic interest, a system of values in conflict with others. Naturally, each will claim universality.

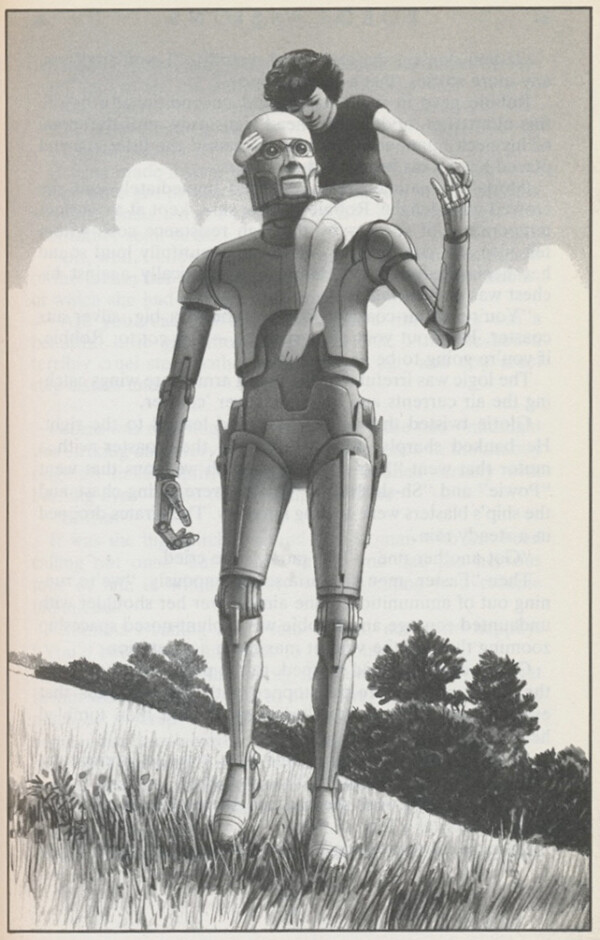

Ralph McQuarrie illustration for Isaac Asimov’s Robot Visions, 1989.

What will happen in terms of alignment will be the opposite of what is promised by the builders of the automaton: it is not the machine that aligns itself with human values but humans who must align themselves with the automatic values of the intelligent artifact, whether it is a question of assimilating the indispensable procedures for interacting with the financial system, or learning the procedures necessary to use military systems.

I don’t think the self-formation process of the cognitive automaton can be corrected by law or ethical regulations. Nor can it be interrupted or deactivated. The moratorium requested by the repentant sorcerer’s apprentices is not realistic. It is contrary to the internal logic of the automaton and to the historical conditions within which its self-formation is taking place, namely economic competition and war.

In conditions of competition and war, all technical transformations that increase productive or destructive power are destined to be implemented. This means that it is no longer possible to stop the self-construction of the global automaton.

***

The liberal techno-optimist Thomas Friedman wrote an opinion piece for the New York Times entitled “We Are Opening the Lids on Two Giant Pandora’s Boxes.” A sentence in the piece struck me: “The engineering is ahead of the science to some degree. That is, even the people building these so-called large language models that underlie products like ChatGPT and Bard don’t fully understand how they work and the full extent of their capabilities.”7

Where is the danger in an entity that, although it does not have human intelligence, carries out specific cognitive tasks more efficiently than humans and can perfect its own functioning? The general function of the inorganic intelligent entity is to introduce the information order into the organism endowed with drives. The automaton has an ordering mission, but it encounters a factor of chaos along the way: the organic drive, irreducible to numerical order.

The automaton extends its dominion into ever newer fields of social action, but it fails to complete its mission as long as its expansion is limited by the persistence of the chaotic human factor. The possibility arises that at some point the automaton will eliminate the chaotic factor in the only possible way: by terminating human society.

We can distinguish three dimensions of reality: the existing, the possible, and the necessary. The existing (or contingent) has the character of chaos. The evolution of the existing follows the lines of the possible, or those of the necessary. The possible is a projection of will and imagination. The necessary is implicit in the strength of biology, and now also in the strength of the logical machine.

The cognitive automaton allows us to foresee the extermination of the contingent by the necessary, which naturally implies an annulment of the possible, because there is no possible without the contingency of the existent.

Leibniz’s best of all possible worlds would at this point be realized, thanks to the elimination of the conscious organism that resists Logical Harmony. As always, the old adage helps us: the inevitable generally does not happen because the unpredictable intervenes.

I Ching: Or, Book of Changes, trans. Richard Wilhelm and Cary F. Baynes (Princeton University Press, 1967) →.

See →.

“AI ‘Godfather’ Geoffrey Hinton Warns of Dangers as He Quits Google,” BBC, May 2, 2023 →.

Josh Taylor and Alex Hern, “‘Godfather of AI’ Geoffrey Hinton Quits Google and Warns Over Dangers of Misinformation,” The Guardian, May 2, 2023 →.

Umberto Eco, The Search for the Perfect Language (John Wiley and Sons, 1997), 30.

See →.

Thomas Friedman, “We Art Opening the Lids on Two Giant Pandora’s Boxes,” New York Times, May 2, 2023 →.

An Italian version of this text was published by Nero Editions.