Machines have eyes. Calculating, extractive, synesthetic, they collect faces, monitor behaviors and habits, capture your fingerprints, eyes, and voice. So what exactly do these machines see, and how? These questions are explored in “Mirror with a Memory” at the Carnegie Museum of Art, a multi-format endeavor encompassing a collection of essays, a podcast, and Trevor Paglen’s exhibition “Opposing Geometries.” At its entrance is a looping video of interviews with artists, curators, and professors discussing the history and current usages of AI and facial recognition, and the impact of these technologies on privacy, governance, and subjectivity. Photography is overwhelmingly indicted as “not innocent,” not objective: it concretizes the perspective of the photographer, they argue, and is a technology of overexposure, riddled with dangerous overlooked biases. Yet as you move through the gallery, Paglen’s own works appear at odds with the responses offered by his peers. The portraits, landscapes, and installations pose more subtle questions about epistemology and art as forms of production.

“Opposing Geometries” consists almost entirely of photographs. Like Ansel Adams’s landscape photography, which Paglen often references in his work, the large-scale images of national parks and other natural wonders—many of which are strangely saturated with hallucinogenic colors, thanks to his application of a semantic segmentation algorithm traditionally used for medical imaging and geological surveys—are portraits devoid of people. The seemingly benign landscapes are instead overlaid with geometric shapes, which Paglen frames as the algorithm’s attempts to make the photographs legible to itself. The neat squares in Pacific Ocean I, Hough Transform; Haar (2018; named after the algorithm used to find and foreground certain shapes in an image) simultaneously recall the kill boxes used in US drone strikes—the darkest side of surveillance—and the overhead satellite images we see regularly on sites like Google Earth. Avoiding overly descriptive labels effects a quiet détournement whereby the shapes signify independently of the pictures over which they lie, and meaning erupts beyond the boundaries of language and intention.

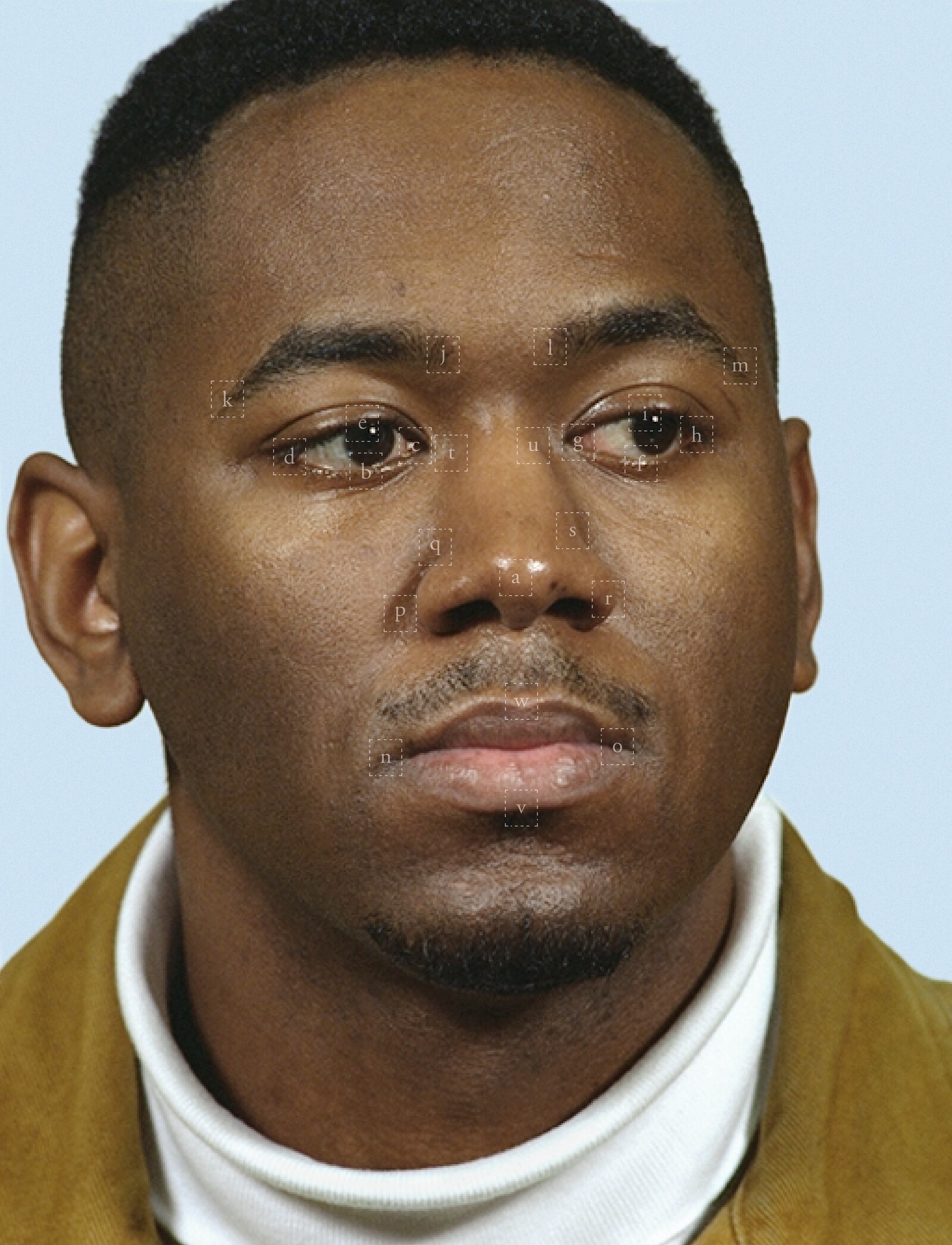

Opposite the landscapes is a row of faces, chosen from thousands of images of military personnel who were employed, in the 1990s, to create FERET, one of the first facial recognition programs. One face, softened by Paglen’s own program, tilts her head beguilingly at the camera, reminiscent of Johannes Vermeer’s Girl with a Pearl Earring (c. 1665). These allusions to famous artworks/artists transform an otherwise prosaic dataset, and expose the “objective” algorithm as also “not innocent” (to echo the introductory video) but beholden as much to its explicit programming as to art history and military R&D. Despite their clear positions in one historical lineage, the portraits also highlight the show’s failure to reckon with the racialized history of photography and facial recognition. Facial recognition algorithms are notorious for having inherited the prejudices and blind-spots of their makers: they have become another tool used for the asymmetrical racial profiling of minorities. Rekognition, a program offered by Amazon, falsely matched students and faculty of color at UCLA with mugshots of arrested individuals. Because these programs are largely trained with datasets composed of white, predominantly male, faces, they also misidentify Black faces at a rate of five to ten times higher than white ones. Faces of varied racial and ethnic origin comprise Paglen’s work on FERET, but the piece is more interested in revealing the military origins of facial recognition and its predation of its own employees by using them as datasets. It is worth noting that the creative team of “Mirror with a Memory” includes Simone Browne, whose work deals explicitly with the intersection of Blackness, surveillance, and biometrics.

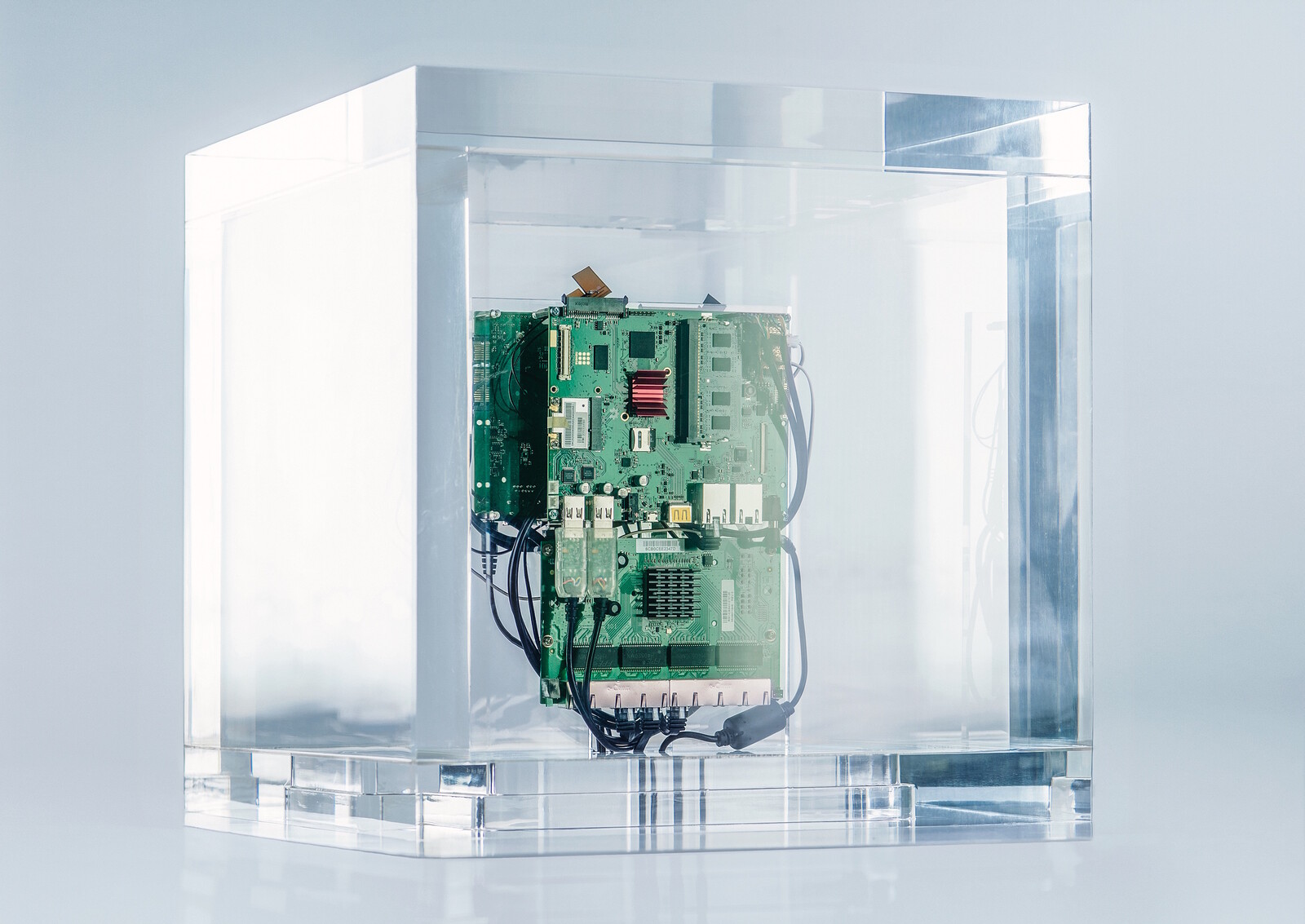

The only non-photograph in the show, a brilliantly paranoid installation called Autonomy Cube (2014–18), consists of a single transparent box of gleaming circuitry, which invites the audience to connect to its customized Tor network and browse the internet in complete privacy. The irony of the piece and its object label—which emphasizes that it cannot and will not collect your information—in the context of the museum itself (which began its data collection as soon as I used my credit card to pay for parking) is not lost. A Tor network conceals online presence through spatial subterfuge, exploiting a global server matrix to mask a user’s real location. Autonomy Cube, meanwhile, masks its imbrication in a larger system of relationality through its spatialization as a lone object. The artwork is not the lucite sculpture, but rather the internet itself. It appears to champion privacy and individualism, but the solitary white room quickly becomes oppressive until the illusions of privacy and self-governance collapse, revealed as unsustainable by the very architecture of the museum. In a gesture part critique, part sardonic wink, the room’s single, Tower-of-London style window neatly frames the Starbucks across the street.

Karl Marx once predicted that capitalist society would evolve to the point where human beings would become mere living appendages of the mighty organism of the machine.1 At first glance, “Opposing Geometries” is a kind of techno-aesthetic augury: a series of portents cast from the bones of existing technologies, politics, and modes of knowledge. Paglen’s work might appear abstract, but its social and political implications are quickly apparent. His 2019 show “Training Humans” (co-created with Kate Crawford) at the Fondazione Prada in Milan was dedicated to exposing the dangers of categorizing people under the auspices of “machine learning.” After the show debuted, ImageNet, an online repository widely used by researchers to train image recognition algorithms, removed 1.2 million images from their database.

“Opposing Geometries” deploys multiple possible visions of human and algorithmic coexistence. It complicates a dystopian futurism, offering a novel beauty that would not be possible without the cooperation of man and machine. It challenges notions of vision, technology, and humanity through the use of AI to expose the polysemy of images. By combining the click of the shutter with the click of a mouse, Paglen reminds us that static truth is not the purpose of art, and that there are no documentary photographs.

Karl Marx, “The Fragment on Machines,” The Grundrisse trans. Martin Nicolaus (London: Penguin Books, 1973), 692.