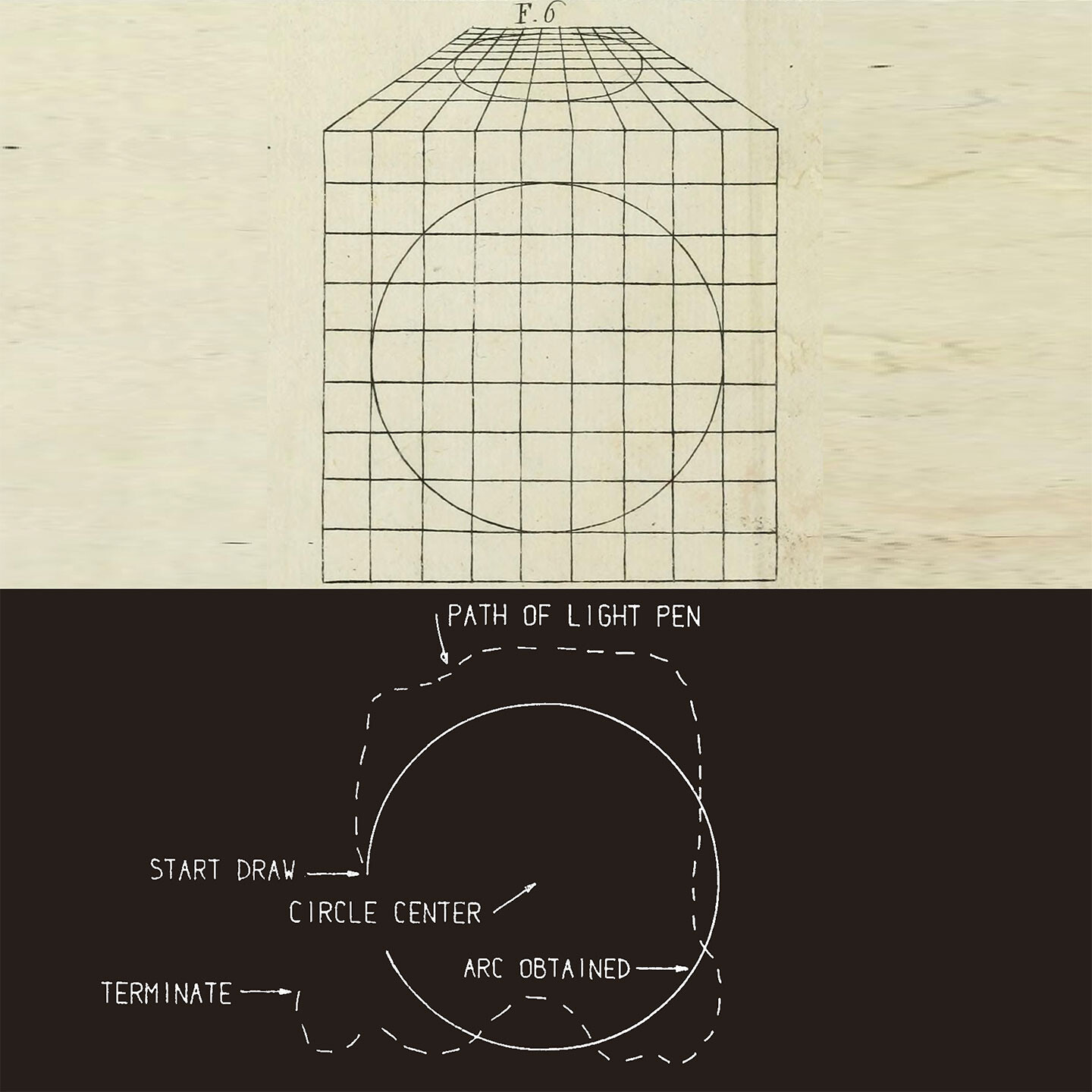

If to err is human, to design corrective systems is all the more so. When in 1962 Ivan Sutherland designed the first drafting program that would allow us, amongst other things, to draw better circles, he was in many ways simply providing an update to Leon Battista Alberti’s circle-drawing system issued some five hundred years earlier in De Pictura. Crucially, in both, one does not have to be able to draw a circle to draw a circle. Sutherland, under Claude Shannon’s wily guidance, radically augmented the corrective capacity of the algorithm at work in an exercise that is not difficult for machines but exceptionally tricky for humans. Whereas Alberti devised a way to draw curved perfection by erecting an approximating join-the-dots scaffold, Sutherland did away with the stepping-stone dots altogether.1

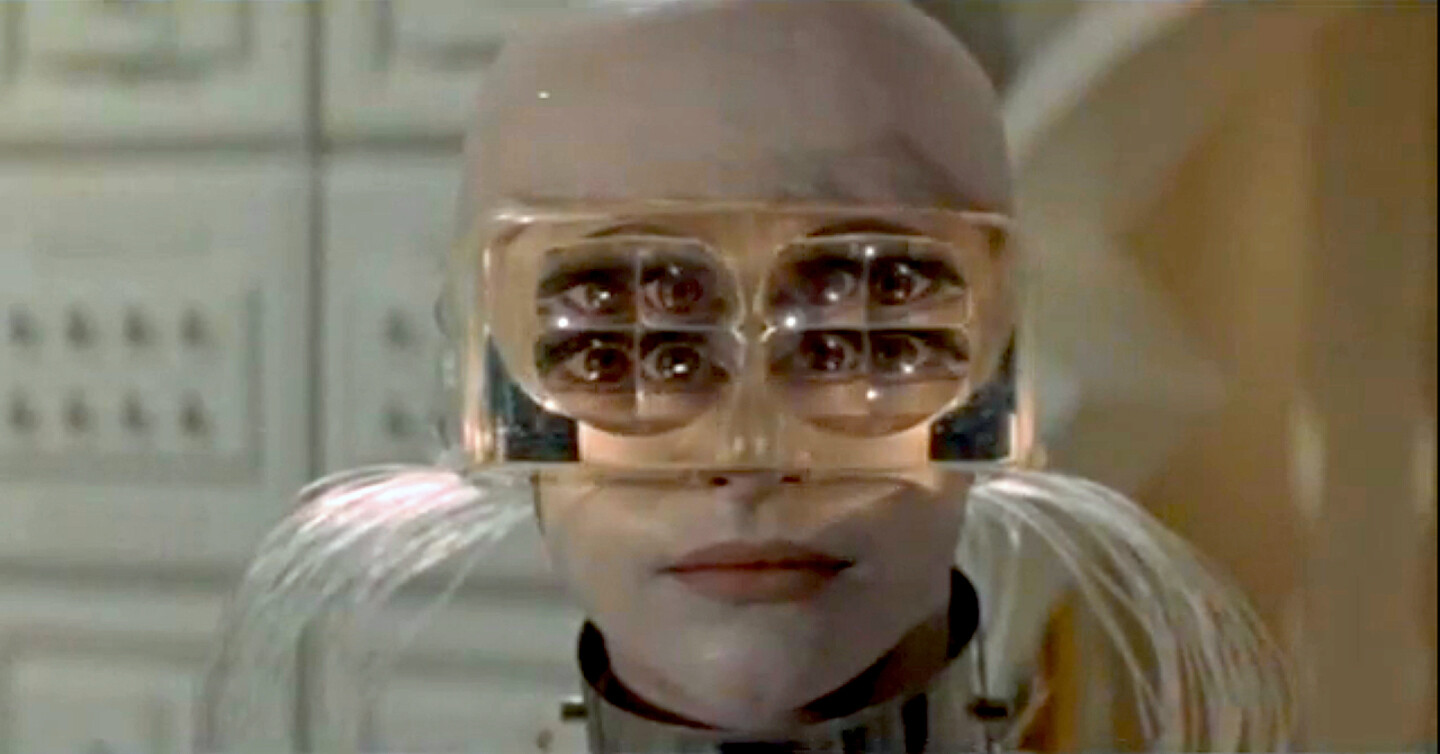

Sutherland’s extraordinarily prescient and elegant program, Sketchpad: a Man-machine Graphical Communication System, inherited everything the US air defense system SAGE had recently invented, and added almost everything else required to make the CAD interface through which so much design is now thought, developed and represented, including the physical mechanics of drawing something—a cursor and hand-held input, first in the form of a touchscreen “light pen” that would later become a mouse—as well as deleting it. By never registering the wobble of the hand-drawn circumference on screen, Sutherland sought to correct error before it could even be recognized by the human eye. Whereas Alberti’s armature gently corrected the erratic radius of our hand, Sketchpad effectively ignores it and uses the light pen’s location only to decide how much arc to draw.

To draw a circle we place the light pen where the center is to be and press the button ‘circle center,’ leaving behind a center point. Now, choosing a point on the circle (which fixes the radius) we press the button ‘draw’ again, this time getting a circle arc whose angular length only [and not its radius] is controlled by light pen position.2

Sketchpad’s design incorporated other strategies for opportunistic correction in the form of prediction using “pseudo-gravity,” whereby the light pen snaps onto any line it gets too close to.3 That is, the software “corrects” us through the assumptions of approximation. The desires of this corrective remit are not limited to the question of the drawing and representation; Sutherland redesigned not only the drawing, but by virtue of a Sketchpad drawing being a “model of the design process,” the design process itself.4 The automatic correction of drawings inaugurated a design process whose legacy is now such that it finally no longer needs a designer in the sense that disegno once imparted. Nor does it need input exactitude from us, which is not to say the product is rough, approximate or in any sense a “sketch.” On the contrary, the corrective nature of what is essentially the beginning of predictive programing allows you to be “quite sloppy,” as Sutherland explains, and still “get a precision drawing out at the same time.”5 When the light pen decides the hand is simply being sloppy and is close enough to a line to snap onto it, the time for doubt or hesitation is foreclosed. Sketchpad signifies, tout court, the end of doubt; predictive programming as preemptive correction.

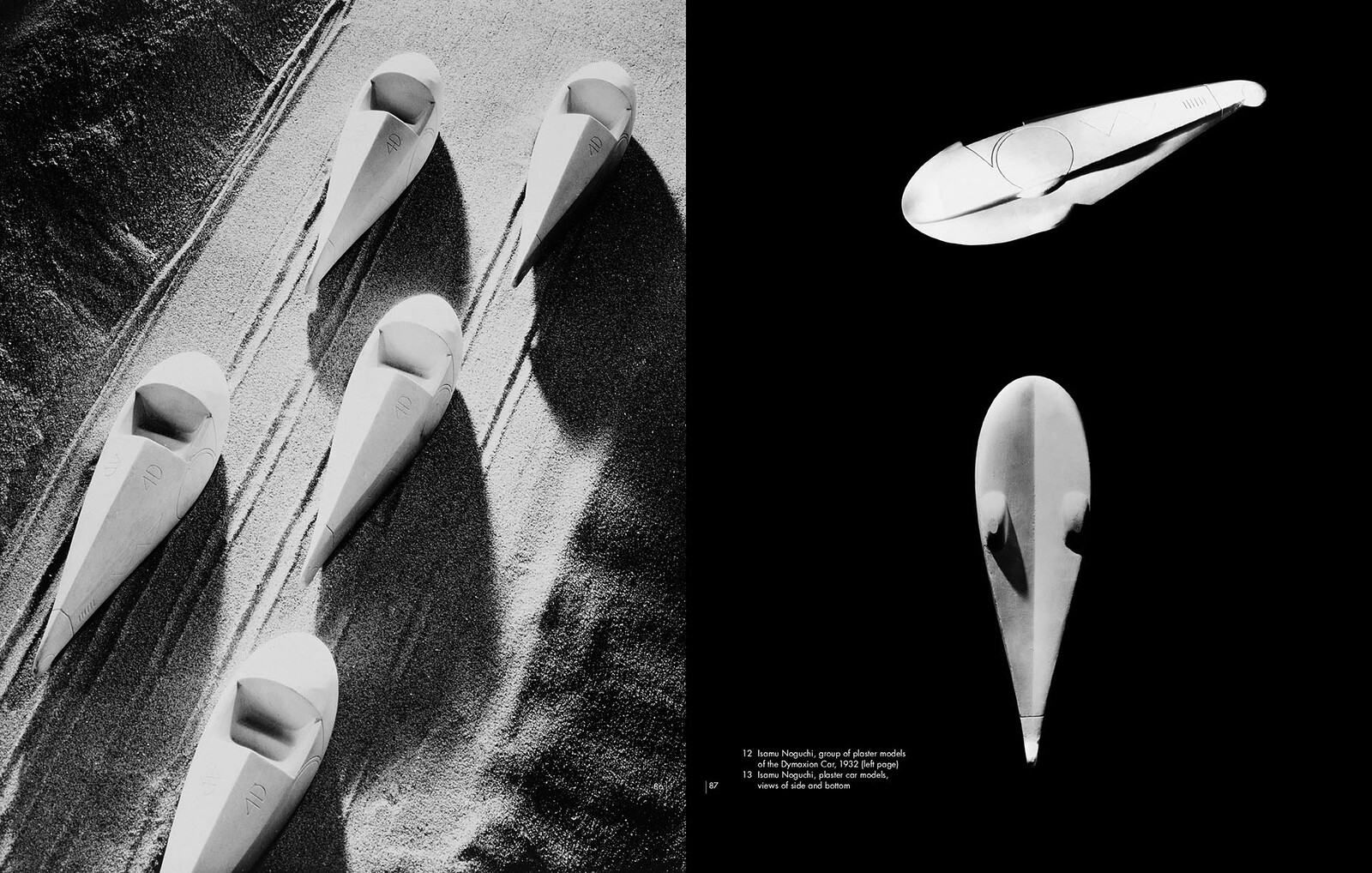

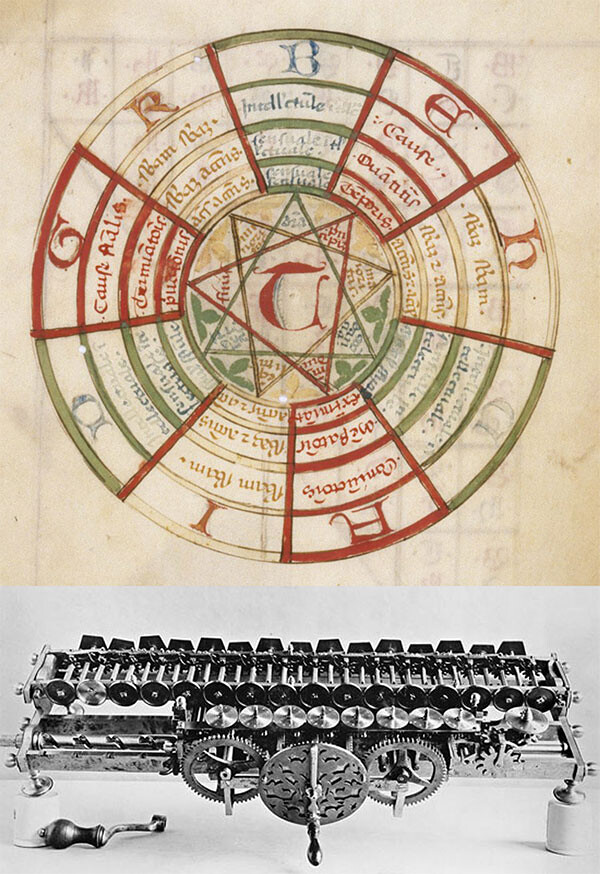

Ramon Lull, Seconda Figura mnemonic wheel. Johannes Bulons, Commentary on Ramon Lull Ars Generalis et Ultima, Venice, 1438. Bodleian Libraries, Oxford. Bottom: Gottfried Wilhelm Leibniz, Stepped Reckoner without the cover, 1694. Encyklopädie der Mathematischen Wissenschaften mit Einschluss ihrer Anwendungen, Vol. 2 Arithmetic und Algebra, Druck und Verlag von E. G. Teubner, Leipzig, 1904.

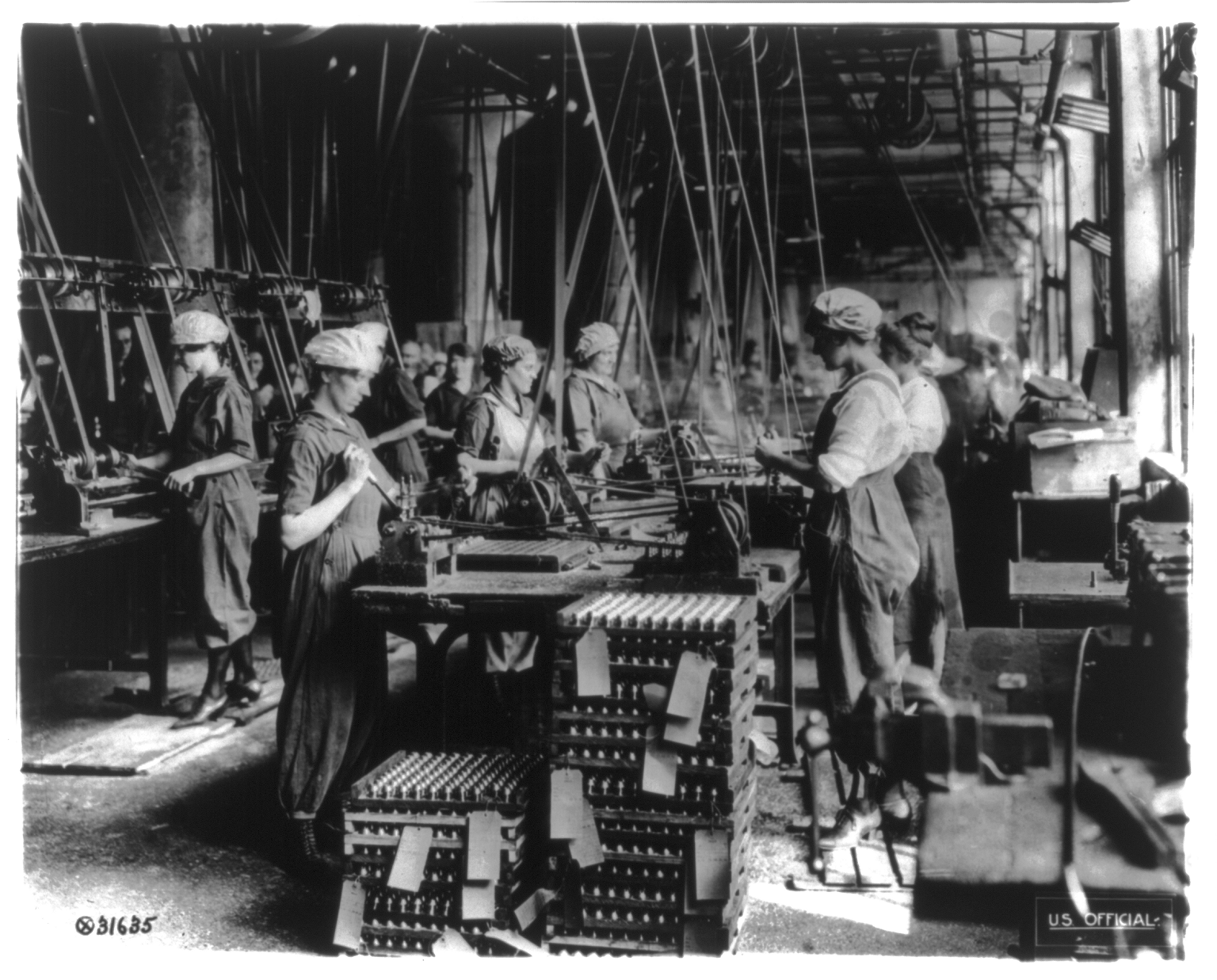

Any corrective system must, by definition, be both reductive and universal—it must be able to rein in an infinite set of erroneous variations and it must be understood, valued and employed by all. Our most ancient and exquisite self-correcting tool has always been neither the pen nor the scalpel, but the algorithm.6 Latent in the pre-history of the algorithms that now organize almost every aspect of the (designed) environment and mediate our every intention or desire is, quite simply, a facility for correction. The verb to err—to drift from a true course—reminds us that the task of any corrective system is primarily circulatory; that is, it is architectural: a set of corridors through which data is irrigated without fear of deviation. For some strange reason we are wont to forget quite how old this architecture is.7 It was there long before John Diebold, who first coined the term “automation” in the 1940s, formulated the difficulty of superimposing a control system on top of an information system, and in so doing initiated what has recently become known as machine learning.

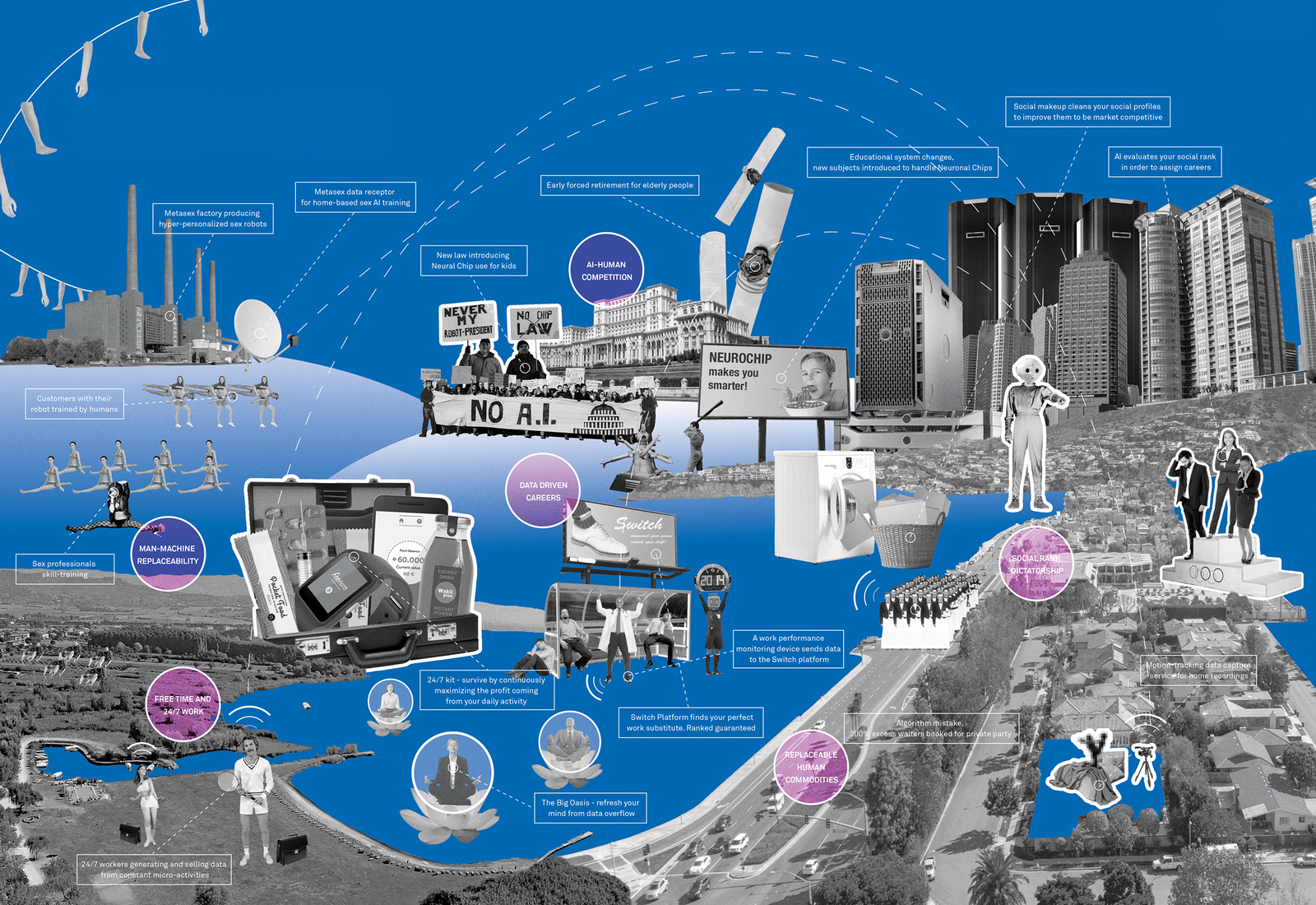

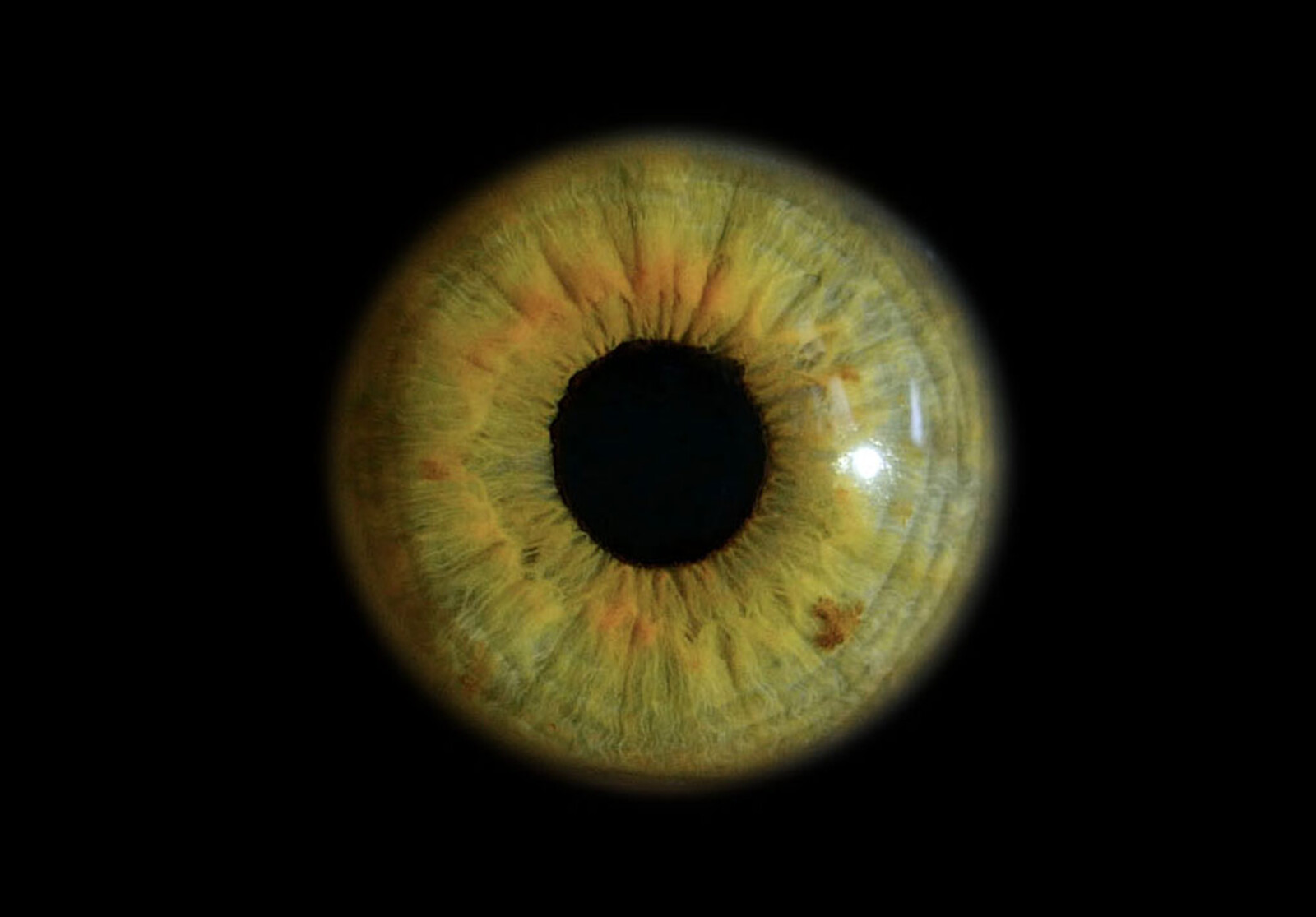

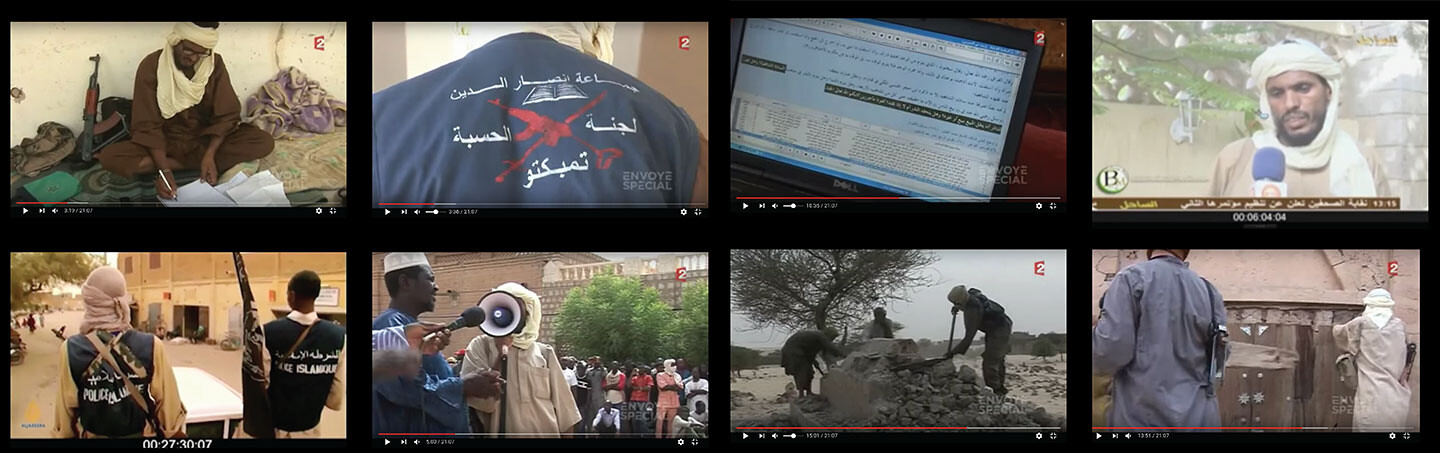

Today, the predictive disposition of algorithms corrects not just how we park our cars or for how much of the day we sit, but also how we spell our best friend’s names or choose whom we will fall in love with next, and sometimes, even why. Like the mnemonic architectures of Antiquity and the Renaissance that, as Harald Weinrich points out in Lethe, had reserved a seat for everything except forgetting, these algorithms similarly allow every output decision except indecision itself.8 In an effort to disarm our innate hostility towards algorithms, Pedro Domingo describes them as “curious children” that “observe us, imitate and experiment.”9 In the evangelical (and paranoid) narratives that surround the search for the Master Algorithm what is often left out is that these badly brought up children, while eating up all the data we can feed them, can’t resist the impulse to correct us.10 Be they Naïve Bayes, Nearest-Neighbor or Decision-Tree-Learner algorithms, they are all shockingly simple in their design; their architectures speak of an almost nihilistic reduction. As does the promise of the Master Algorithm—to be the last thing we will ever need to design, invent or solve, as it will do the rest for us, forever.

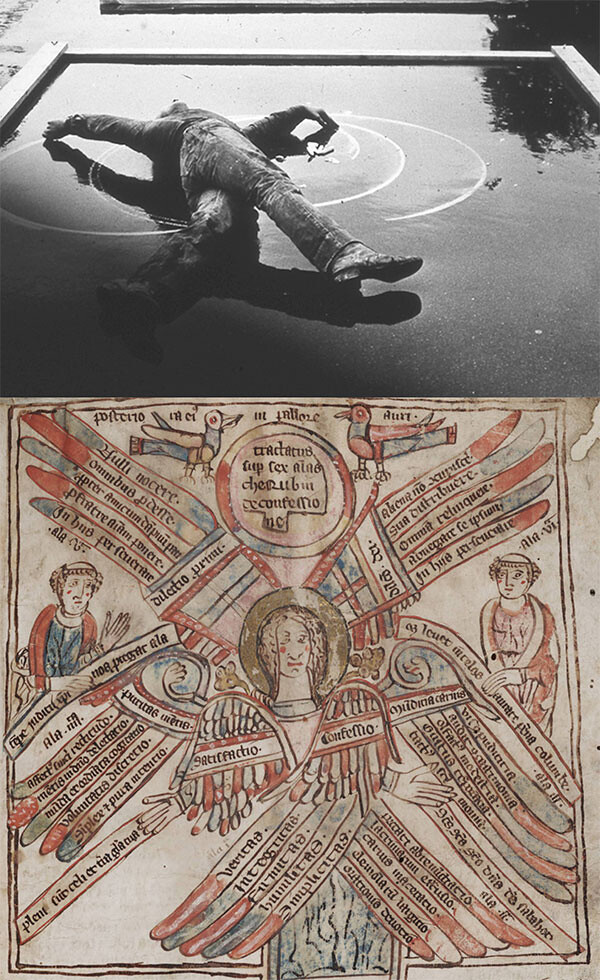

Stuart Brisley, Beneath Dignity, Bregenz, 1977. Photo: Janet Anderson. Courtesy of Stuart Brisely. Bottom: Early fourteenth-century mnemonic cherub from Cistercian monastery of Kamp, Germany. Beinecke Rare Book and Manuscript Library, Yale University.

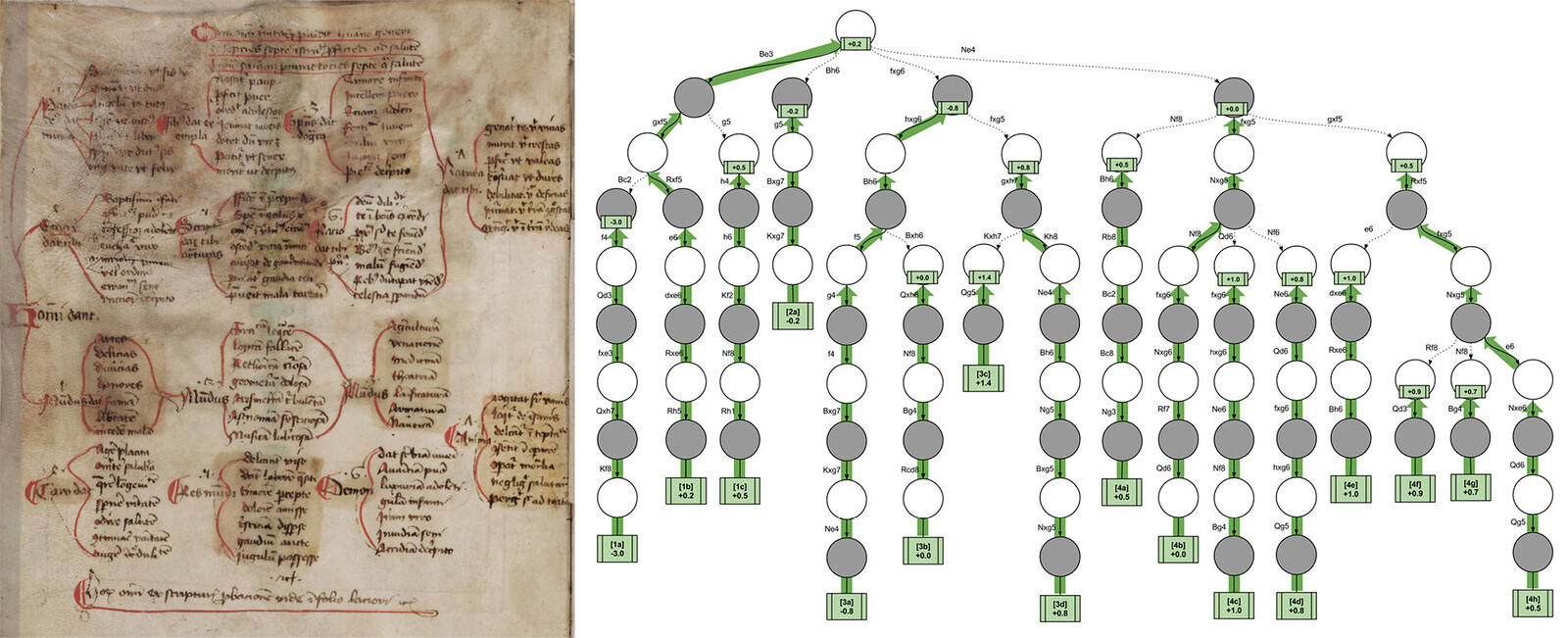

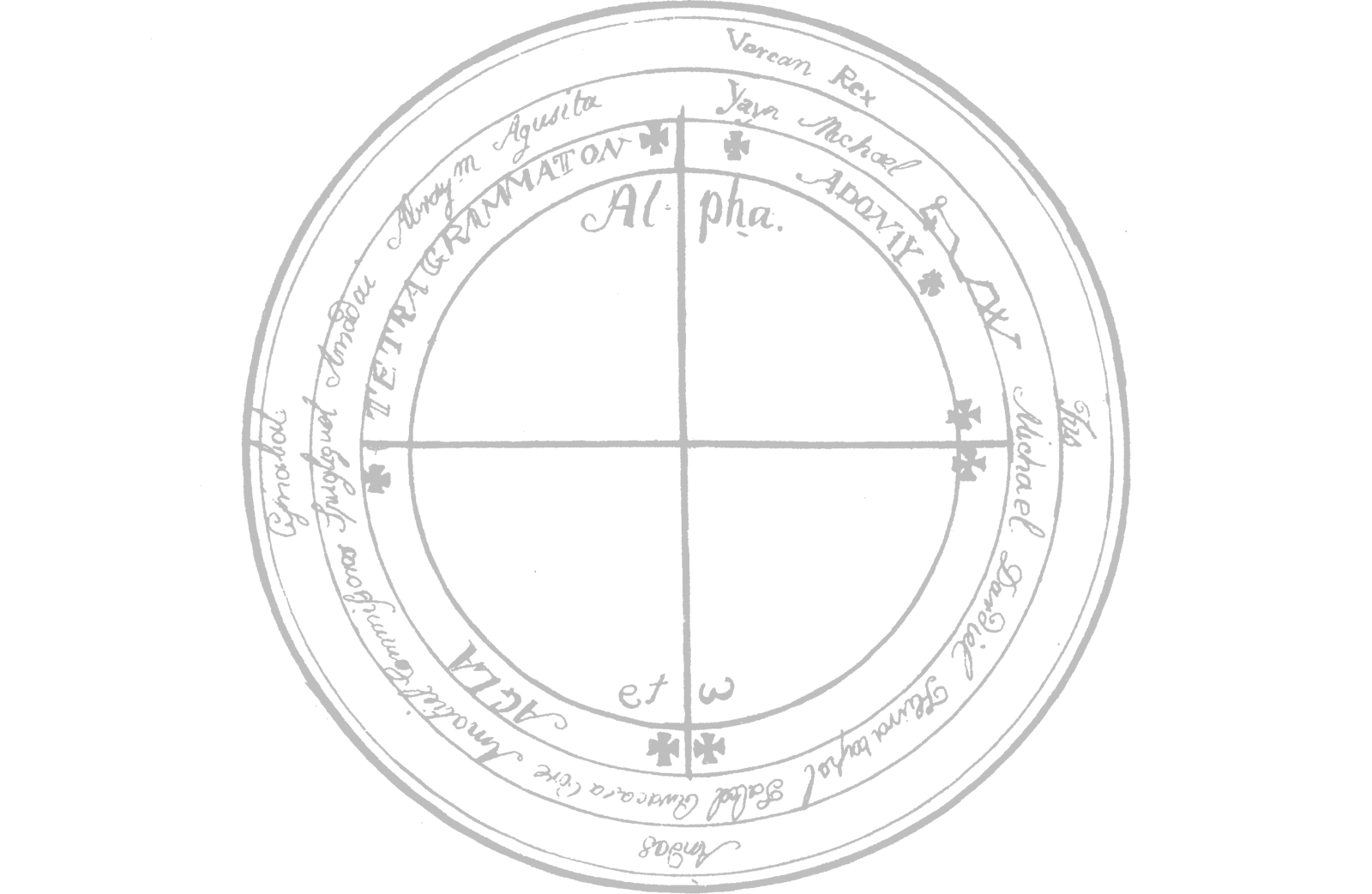

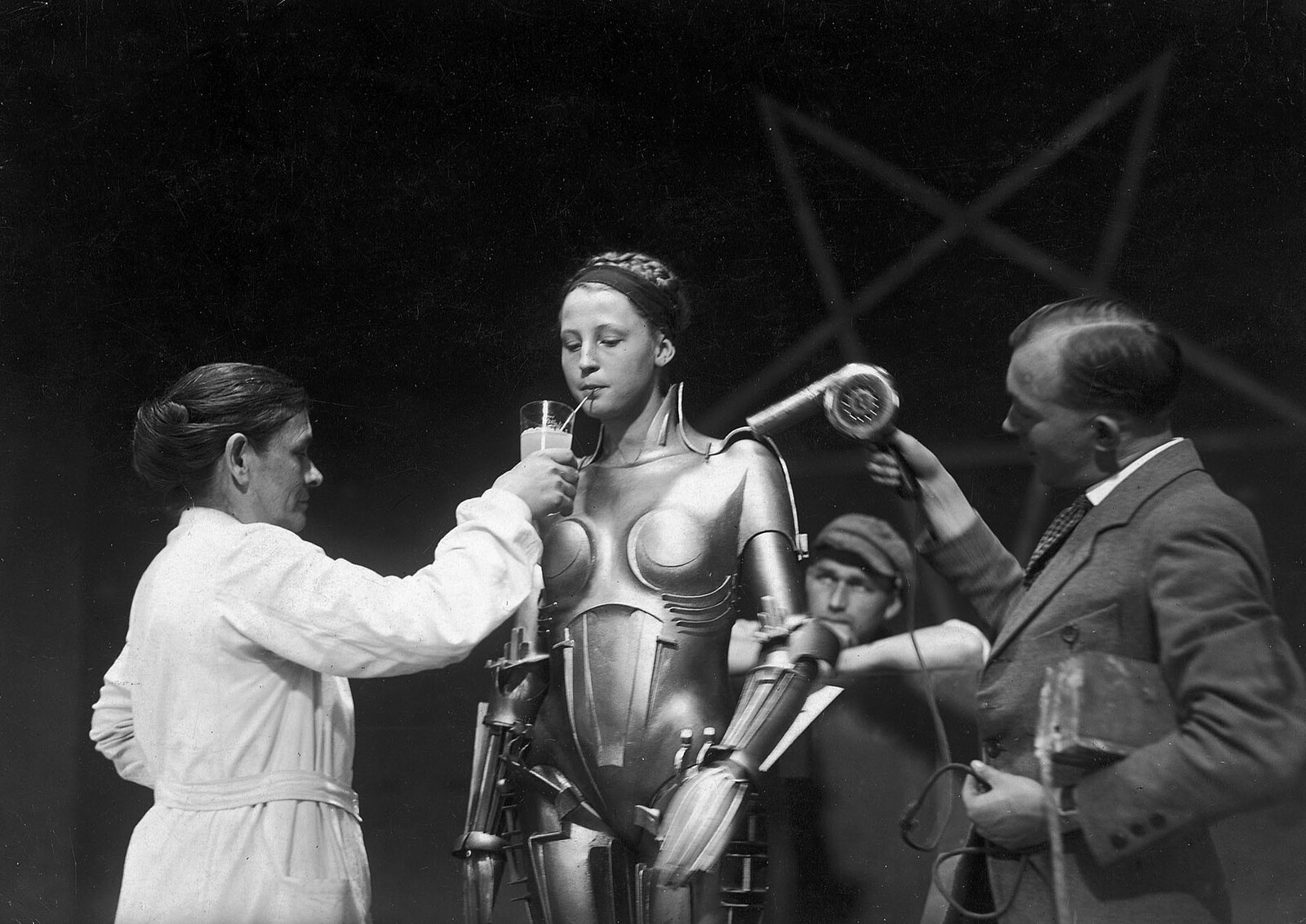

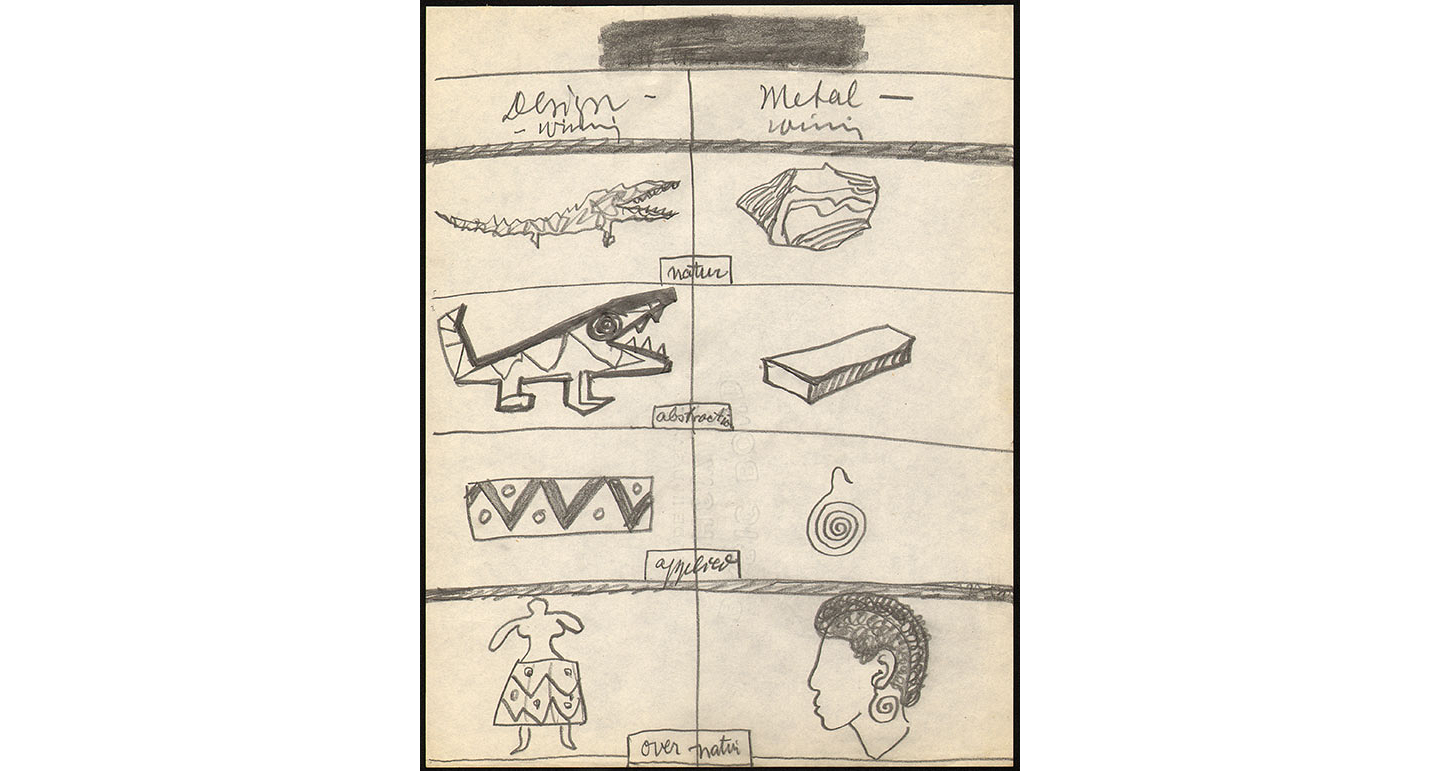

There is a familiar echo in this recourse to a reductive totality that will change everything for good. Far from simply a production of the digital, the algorithm and the genealogy of machines of thought it belongs to has been correcting us for a very long time. The development of a system that might “calculate” the truth is arguably as old as the idea of the machine itself and certainly inseparable from it. Ramon Lull housed his Ars Combinatoria in a notional set of revolving paper wheels long before Gottfried Leibniz’ ratiocinator gave those same wheels materiality (brass) and cogs that would count their way to Charles Babbage, if not to the contemporary digital. But there is more to this entanglement of machine and thought and their mutual bodies: in the historic search for an algorithm that would correct us we have endeavored to equal, and failing that, mimic, the machine long before it even existed. That is, the machine of thought is our most treasured and cunning mirror; the “quest” for Universal Learning (the holy-grail of today), simply a digital reformulation of the early Enlightenment question of a Universal Language.

Two years before Sutherland’s Sketchpad, Paolo Rossi, in writing his preface to Clavis Universalis: Arti Della Memoria e Logica Combinatorial da Lullo a Liebniz, transgressed the customary segregation of the projects of Medieval and Renaissance mysticism from those of Enlightenment rationalism by mapping out the complex fourteenth to seventeenth century feedback loops of the pansophic search for a Universal Method (logic and language).11 According to Leibniz’s arch formulation, a Universal Method was to “Help us to eliminate and extinguish the controversial arguments which depend upon reason, because once we have realized this language, calculating and reasoning will be the same thing.”12 That is, in providing an omniscient system able to calculate the truth, it would act as the bridge that would connect what had been (and still was) God to what (no doubt) will be the Master Algorithm. By strategically recasting Leibniz and his work on logic as poised at the end of the Renaissance rather than at the beginning of Modernism, Rossi exposed a previously concealed complex network of influences that directly connected fourteenth century cabala to seventeenth century Real Characters, and ancient Ars Memoria and rhetoric to Leibniz’s logical “calculus.” That is, Leibniz’s invention of what proved to be the progenitor of modern logic also emerged precisely from within rather than simply in reaction to a milieu that was antithetical to modernity. Therefore he, Francis Bacon and René Descartes, as the “triumvirate of logic,” did not do it alone, nor were their sources quite so logical. Rossi’s heterogeneous network combined scientists, philosophers and educational reformers with, as he puts it, the “less commendable company of magi, cabalists, pansophists and the constructors of memory theatres and secret alphabets.”13 Just as the algorithms that power the data economy and manage our lives today are fed on a staple diet of all our dithering and twitching clicks, encoding all of our fears and prejudices in their very make-up, the project of a Universal Language was also contaminated from the very start by the dark paranoia of the magi and the cabal. All the desires that were embedded in those memory palaces, theatres, wheels, trees and seraphic angel wings were installed in the new architectures of linking chains (catenae) and tables of Universal Languages with their newly potent alphabets of Real Characters, for which “if order is the mother of memory, then logic is the art of memory. Dealing with order is, in fact, the task of logic.”14

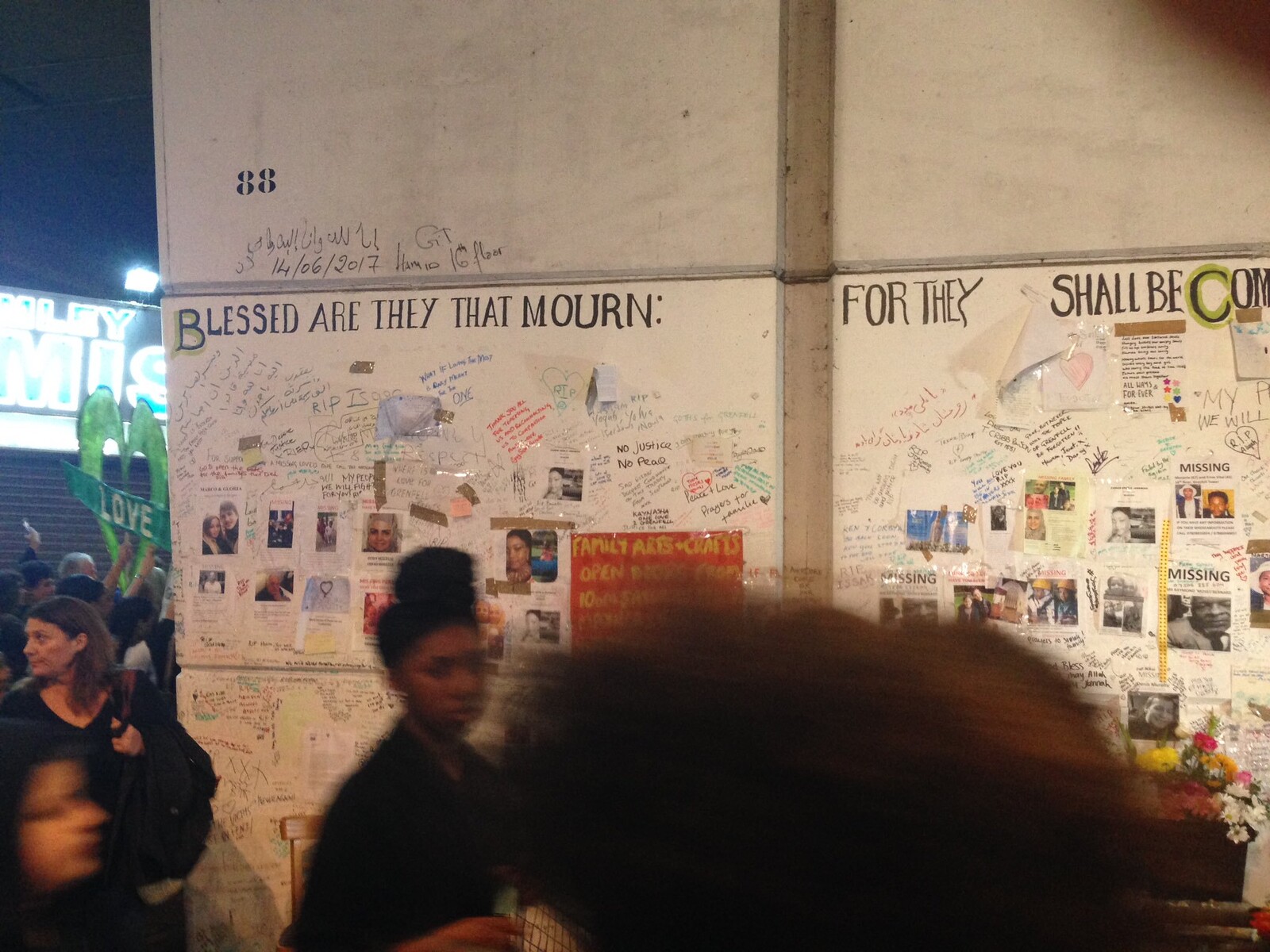

Barely concealed in each of these endeavors and their concomitant architectures is the corrective impulse, usually masked as efficiency, which will short-circuit doubt. A Universal Language must show “a way to remedy the difficulties and absurdities which all languages are clogged with ever since the Confusion, or rather since the Fall, by cutting off all redundancy, rectifying all anomaly, [and] taking away all ambiguity and aequivocation.”15 Bacon’s writings on Real Characters made no bones about his antipathy towards the vagueness and promiscuous slipperiness of words in natural languages, which he regarded not only as an obstacle to thought but as an almost physical barrier, placed between our corporeal existence and the facts and laws of nature, which must be systematically dismantled. Nor were definitions of any use: “definitions can never remedy this evil, because definitions themselves consist of words, and words generate other words.”16 Robert Boyle proposed that words, in their new life as Real Characters, do penance and emulate numbers: “If the design of real characters take effect, it will in good part make amends for what their pride lost them at the tower of Babel … I conceive no impossibility that opposes the doing that in words that we see already done in numbers.”17 Implicit in the corrective project is always punishment: for our wobbly circles, our ill-parked cars, our sedentary days and our impulsive choice of lovers.

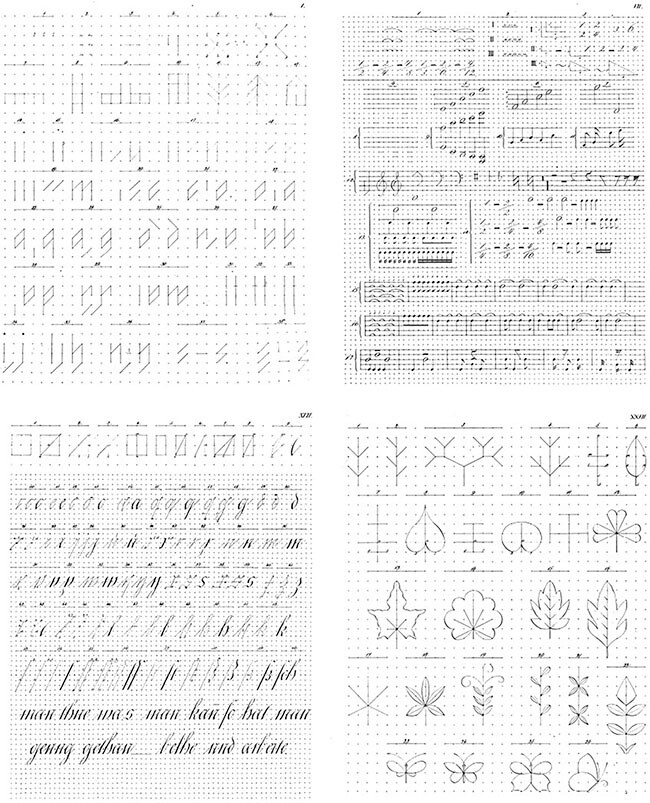

As if artificial languages failed to fully shed the linguistic propensity to morph and propagate of their natural counterparts, Universal Languages proved to be rather promiscuous. Not unlike the software to follow, several competing versions coexisted; within their diversity we can recognize many signature traits inherited by the algorithms that currently design our days.18 All possessed hermetic architectures from which there is no exteriority; nothing can escape their understanding and control, including their authors. All were also projects that required total design; an all-saturating artifice of signs that must mediate between us and every phenomenon in existence. All were lean constructs and shockingly simple; part of the defining efficiency of these engineered languages was the radical compression they achieved in what became an almost aesthetic imperative.19 This was facilitated by the fact that all utilized positional intelligence and had spatial ambitions from the start; every sign in a Universal Language table corresponds to a particular thing, notion or action, with its position revealing the place that the signified thing or action occupies in the universal order of objects and actions. If this language was to also be a mirror, it did not long remain passive; as Leibniz augmented his Universal Language to Ars Inveniendi, the Real Characters transformed from passive signifiers to active designers that would effectively direct learning. “The name which is given to gold in this language would be the key to everything which can possibly be known about gold, rationally and in an orderly fashion, and would reveal what experiments ought to be rationally undertaken in connection with gold.”20 In other words, these languages were learning machines.

The most important obstacle for machine learning today is not David Hume’s fallacy of induction—the Achilles heel of big data that haunts its every move, the question of how can we reasonably make predictions about what we have not observed based on what we have—but the problem of over fitting, of seeing patterns that aren’t really there, of hallucinating logic where none lies. How very human. Denis Diderot already cautioned against the illusory nature of all that was inferred from data analysis in his Encylcopédie: “These methodological divisions aid the memory and seem to control the chaos formed by the objects of nature … But one should not forget that these systems are based on arbitrary human conventions, and do not necessarily accord with the invariable laws of nature.”21 What if we were to consider artificial intelligence to be the most human product of our imagination, in that it has always been driven by our fear of imagination itself, of the slipperiness of thought and the words we weave with their artful dodging of logic, and of our ability to not only hallucinate but also to doubt, to defer decision, and to err? Our fear of artificial intelligence is only our fear of ourselves. We are all our fears and all their products. We are those badly brought up children.

“As it would be an immense labor to cut the whole circle at many places with an almost infinite number of small parallels until the outline of the circle were continuously marked with a numerous succession of points, when I have noted eight or some other suitable number of intersections, I use my judgement to set down the circumference of the circle in the painting in accordance with these indications.” Leon Battista Alberti, On Painting, ed. Martin Kemp (London: Penguin Classics, 1991), 71.

With this you really do not need to be able to draw a circle to draw a circle, indeed you only need to intend a circle. Ivan Sutherland, “Sketchpad: A Man-Machine Graphical Communication System,” The New Media Reader, ed. Wardrip-Fruin and Montford (Cambridge: MIT Press, 2003), 111. My emphasis.

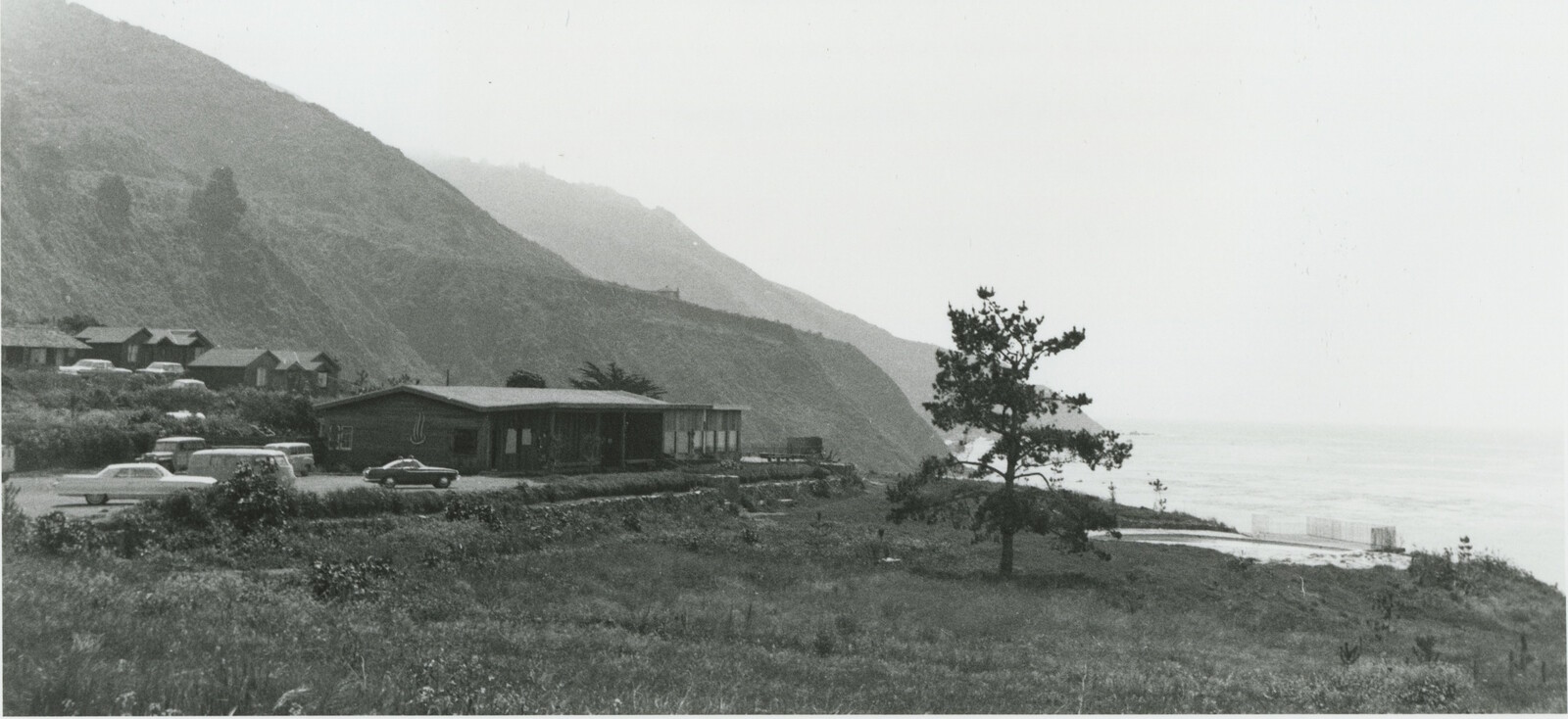

“I did a thing that I called ‘pseudo-gravity’ which said that around lines or around the intersection between lines that the light pen would pick up light from those lines and then the computer would do a computation saying: ‘Gee, the position of the light pen now is pretty close to this line, I think what the guy wants is to be exactly on this line’, and so what would happen is that if you moved the pen near to an existing part or near to an existing line it would jump and be exactly at the right coordinates.” Sketchpad, lecture by Ivan Sutherland, 3 March 1994, Bay Area Computer Perspectives, No. 102639871. US: Sun Microsystems, 1994.

Ibid., Sutherland, New Media Reader, 113.

MIT Science Reporter: Computer Sketchpad, John Fitch, Dir. Russell Morash, WGBH-TV, Boston (21 mins.), 1964.

I am here indebted to Gergely Kovács and to our AA students with whom I have shared numerous conversations regarding the history of the algorithm.

We can find this corrective architecture’s nascent circulatory logics as far back as in the search and retrieval pathways of the memory in Aristotle, the mnemonic architectures of Cicero and Quintilian, and their Renaissance theatrical progeny by Giulio Camillo and Robert Fludd; its exhaustive (but never exhausted) recursive organization in the design of the penance directing wings of Alan of Lille’s Medieval six-winged seraphs; its ambitions for omniscience through radical reduction—one system that could process all data and thus hold all knowledge—in the wheels (the footprint of the machine was there already) of Ramon Lull and Giordano Bruno; and then, as machine met language, in the Universal Languages of John Wilkins, Francis Bacon and Gottfried Leibniz et al. See Mary Carruthers, The Book of Memory: A study of Memory in Medieval Culture, (Cambridge: Cambridge University Press 1990); The Medieval Craft of Memory: an Anthology of Text and Pictures, eds. Carruthers & Jan M. Ziolkowski (Philadelphia: University of Pennsylvania Press, 2002); Frances A. Yates, The Art of Memory (London: Routledge & Kegan Paul, 1966).

Harald Weinrich, Lethe: the Art and Critique of Forgetting (Ithaca: Cornell University Press, 2004).

See Pedro Domingos, The Master Algorithm: How the Quest for the Ultimate Learning Machine will Remake our World (New York: Basic Books, 2015).

Or to correct society in general: in the US an algorithm is being used to help judges granting bail to predict who is likely to succumb to recidivism. Meanwhile, the echo-chambers of news-tailoring algorithms are personalizing the correction of our world-view with unintended consequences. See: “The Power of Learning,” The Economist (20 August 2016), 11.

Paolo Rossi, Clavis Universalis: Arti Della Memoria e Logica Combinatorial da Lullo a Liebniz (Milan: R. Ricciardi, 1960).

Ibid., Yates, 175.

Among them: Johann Alsted, Robert Boyle, Giordanno Bruno, Giulio Camillo, Arthur Collier, Jan Amos Comenius, George Dalgarno, Thomas Hobbes, Sebastiano Izquierdo, Athanasius Kircher, Petrus Ramus, John Ray, Giambattista Vico and John Wilkins. Ibid., Rossi, xxii.

Johann Heinrich Alsted cited in Ibid., Rossi, 132.

George Dalgarno cited in Ibid., Rossi, 158.

Francis Bacon cited in Ibid., Rossi, 148.

Robert Boyle cited in Ibid., Rossi, 152.

The titles of a sample of the artificial languages published during this period speak volumes: The Groundwork of Foundation Laid (or so Intended) for the Framing of a New Perfect Language, Francis Lodowick, 1652; Logopandecteision, or an Introduction to the Universal Language, Thomas Urquhart, 1653; The Universal Character by which all Nations may Understand One Another’s Conceptions, Cave Beck, 1657.

John Wilkins, who whittled his Language down to three thousand “words,” declared that they could be learnt in only two weeks, and that one could achieve a level of fluency in one year that would take forty years to achieve in Latin.

Gottfried Leibniz cited in Ibid., Rossi, 182.

Denis Diderot and Jean le Rond d’Alembert, “Histoire Naturelle,” Encyclopédie, ou Dictionnaire raisonné des Sciences, de arts et des métiers, par une societ´de gens de lettres, Vol VII (Paris: 1751), 230.

Superhumanity is a project by e-flux Architecture at the 3rd Istanbul Design Biennial, produced in cooperation with the Istanbul Design Biennial, the National Museum of Modern and Contemporary Art, Korea, the Govett-Brewster Art Gallery, New Zealand, and the Ernst Schering Foundation.

Category

Superhumanity, a project by e-flux Architecture at the 3rd Istanbul Design Biennial, is produced in cooperation with the Istanbul Design Biennial, the National Museum of Modern and Contemporary Art, Korea, the Govett-Brewster Art Gallery, New Zealand, and the Ernst Schering Foundation.