Noise in the sense of a large number of small events is often a causal factor much more powerful than a small number of large events can be. Noise makes trading in financial markets possible, and thus allows us to observe prices for financial assets… We are forced to act largely in the dark.

—Fischer Black1

In 1986 Fischer Black, one of the founders of contemporary finance, made a rather surprising announcement: bad data, incomplete information, wrong decisions, excess data, and fake news, all make arbitrage—purportedly risk-free investments, such as the profit that can be made when one takes advantage of slight differences between currency exchanges (or the price of the same stack) in two different locations—possible. In his famous article “Noise Trading,” Black posited that we trade and profit from misinformation and information overload. Assuming a large number of “small” events networked together as far more powerful than large scale planned events, the vision of the market here is not one of Cartesian mastery or fully informed decision makers. Noise is the very infrastructure for value.

In an age of meme driven speculation, NFT’s, and democratized options trading, such a statement might seem common sense. Even natural. Does anyone, after all, really think a crypto currency named as a joke for a small dog, or an almost bankrupt mall-based game retailer is intrinsically worth anything, much less billions of dollars? Of course they do. For the past few years, great fortunes and major funds have collapsed and risen on just such bets. In retrospect, everyone seems to have perfect clarity about “value” investing, while at the same time, no one does. “Irrational exuberance” to quote Federal Researve Board Chairman Alan Greenspan in the late 1990s on the dot.com boom, might be the term to describe it. But Greenspan might have gotten it wrong on one crucial point: irrational exuberance is not the sign of market failure, but market success.

For Black, who was the student of Marvin Minsky and also invented the revolutionary trading instrument the Black-Scholes Options Pricing Model, “irrationality” was not an exception, but the norm; the very foundation for contemporary markets. Noise, Black argued, is about a lot of small actions networked together accumulating in greater effects on price and markets than large singular or perhaps even planned events. Noise is the result of human subjectivity in systems with too much data to really process. Noise is also, and not coincidentally, the language of mathematical theories of communication. The idea that we are all networked together and make collective decisions within frameworks of self-organizing systems that cannot be perfectly regulated or guided is ubiquitous today and integrated into our smartphone trading apps and social networks. Furthermore, many from behavioral scientists to tech entrepreneurs to political strategists have come to believe that human judgement is flawed, and that this is not a problem, but a frontier for social networks and artificial intelligence.

The options pricing model that Black invented with his colleague Myron Scholes exemplifies a broader problem for economists of finance: that theories or models, to paraphrase Milton Friedman, “are engines not cameras.”One way to read that statement is that the model does not represent the world but makes it. Models make markets. Models in finance are instruments such as a derivative pricing equations or an algorithm for high-speed trading. There are assumptions built into these technologies about gathering data, comparing prices, betting, selling, and timing bets, but not about whether that information is correct or “true,” or whether the market is mapped or shown in its entirety. These theories are tools, and they let people create markets by arbitraging differences in prices without necessarily knowing everything about the entire market or asset.

These financial models are, to use Donna Haraway’s terms, “god-tricks.” They perform omniscience and control over uncertain, complex, and massive markets. They are also embodiments of the ideology that markets can neither be regulated or planned. These instruments naturalize and enact an imaginary that markets make the best decisions about allocation of value without planning by a state or other organization. This infrastructure for our contemporary noisy trading is not natural or inevitable, however. It was produced by the intersection of neo-liberal theory, psychology, and artificial intelligence. If today we swipe and click as a route to imagined wealth, we should ask how we have come to so unthinkingly and unconsciously accept the dictates of finance and technology.

Networked Intelligence

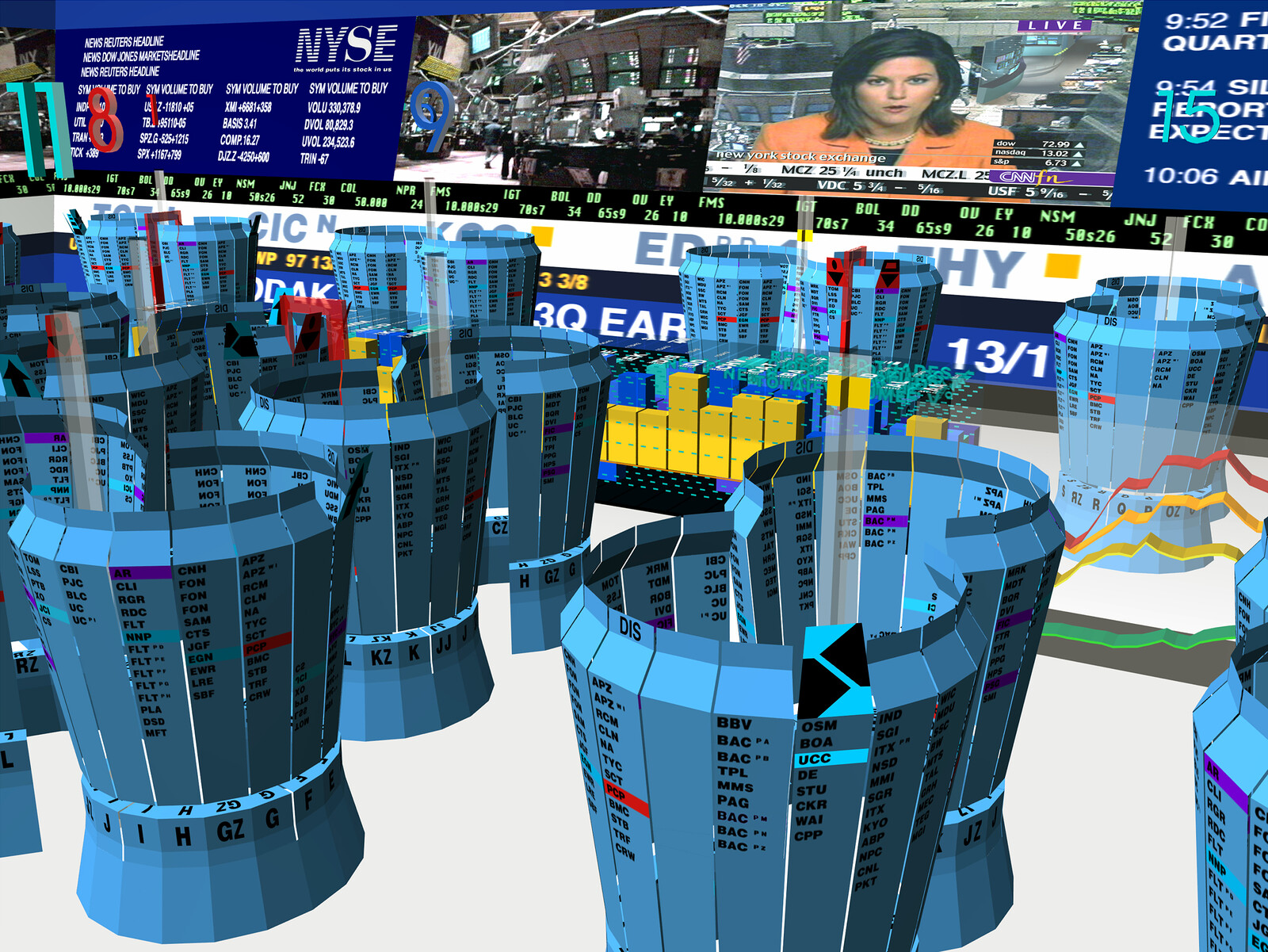

The idea that human judgement is flawed (or corrupt) and that markets could neither be regulated nor fully predicted and planned has long been central to the automation and computerization of financial exchanges. Throughout the middle of the twentieth century, increased trading volumes forced clerks to fall behind on transaction tapes and often omit or fail to enter specific prices and transactions at particular times. Human error and slowness came to be understood as untenable and “non-transparent,” or arbitrary in assigning price.2

In the case of the New York Stock Exchange (NYSE), there were also labor issues. Managers needed ways to manage and monitor labor, particularly lower paid clerical work. As a result, computerized trading desks were introduced to the NYSE in the 1960s. These computerized systems were understood as being algorithmic and rule bound. Officials thought computing would save the securities industry from regulation; that if computers followed the rules algorithmically, there was no need for oversight or regulation.3

This belief in the rationality and self-regulation of algorithms derived from a longer neoliberal tradition that reimagined human intelligence as machinic and networked. According to Austrian born economist Friedrich Hayek writing in 1945:

The peculiar character of the problem of a rational economic order is determined precisely by the fact that the knowledge of the circumstances of which we must make use never exists in concentrated or integrated form, but solely as the dispersed bits of incomplete and frequently contradictory knowledge which all the separate individuals possess. The economic problem of society is thus not merely a problem of how to allocate “given” resources—if “given” is taken to mean given to a single mind which deliberately solves the problem set by these “data.” It is rather a problem of how to secure the best use of resources known to any of the members of society, for ends whose relative importance only these individuals know. Or, to put it briefly, it is a problem of the utilization of knowledge not given to anyone in its totality.4

Human beings, Hayek believed, were subjective, incapable of reason, and fundamentally limited in their attention and cognitive capacities. At the heart of Hayek’s conception of a market was the idea that no single subject, mind, or central authority can fully represent and understand the world. He argued that “The ‘data’ from which the economic calculus starts are never for the whole society ‘given’ to a single mind… and can never be so given.”5 Instead, only markets can learn at scale and suitably evolve to coordinate dispersed resources and information in the best way possible.

Responding to what he understood to be the failure of democratic populism that resulted in fascism and the rise of communism, Hayek disavowed centralized planning or states. Instead, he turned to another model of both human agency and markets. First, Hayek posits that markets are not about matching supply and demand, but about coordinating information.6 And second, Hayek’s model of learning and “using knowledge” is grounded in the idea that networked intelligence is embodied in the market, which can allow for the creation of knowledge outside of and beyond the purview of any individual human.7 This is an intelligence grounded in populations.

Hayek’s idea of environmental intelligence was inherited directly from the work of Canadian psychologist Donald O. Hebb, who is known as the inventor of the neural network model and the theory that “cells [neurons] that wire together fire together.” In 1949, Hebb published the Organization of Behavior, a text that popularized the idea that the brain stores knowledge about the world in complex networks or “populations” of neurons. The research is today famous for presenting a new concept of functional neuroplasticity, which was developed through working with soldiers and other individuals who had been injured, lost limbs, blinded, or rendered deaf from proximity to blasts. While these individuals suffered changes to their sensory order, Hebb noted that the loss of a limb or a sense would be compensated for through training. He thus began to suspect that neurons might rewire themselves to accommodate the trauma and create new capacities.

The rewiring of neurons was not just a matter of attention, but also memory. Hebb theorized that brains don’t store inscriptions or exact representations of objects, but instead patterns of neurons firing. For example, if a baby sees a cat, a certain group of neurons fire. The more cats the baby sees, the more a certain set of stimuli become related to this animal, and the more the same set of neurons will fire when a “cat” enters the field of perception. This idea is the basis for contemporary ideas of learning in neural networks. It was also an inspiration to Hayek, who in his 1956 book The Sensory Order openly cited Hebb as providing a key model for imagining human cognition. Hayek used the idea that the brain is comprised of networks to remake the very idea of the liberal subject. Hayek’s subject is not one of reasoned objectivity, but rather subjective with limited information and an incapacity to make objective decisions.

The concept of algorithmic, replicable, and computational decision making that Hayek forwarded in the Cold War was not the model of conscious, affective, and informed decision making privileged since the democratic revolutions of the eighteenth century.8 Hayek reconceptualized human agency and choice neither as informed technocratic guidance nor as the freedom to exercise reasoned decision making long linked to concepts of sovereignty. Rather, he reformulated agency as the freedom to become part of the market or network. He was very specific about the point that economic or political theories based on collective or social models of market making and government were flawed in privileging the reason and objectivity of the few policy makers and governing officials over the many and quelling the ability of individuals to take action. Hayek elaborated that freedom, therefore, was not the result of reasoned objective decision making but freedom from coercion by the state, or exclusion from chosen economic activities and markets. This interpretation of freedom has serious implications for contemporary politics regarding civil rights and affirmative action.9

Machines

Neoliberal theory posited the possibility that markets themselves possess reason or some sort of sovereignty; a reason built from networking human actions into a larger collective without planning, and supposedly, politics. Just as communication sciences and computing adopted models of the human mind, in the postwar period, many human, social, and natural sciences came to rely on models of communication and information related to computing in their attempts at understanding markets, machines, and human minds. Models of the world such as those embedded in game theory reflected emerging ideas about rationality separated from liberal human reason. Managing systems, including political and economic ones, came to be understood as a question of information processing and analysis.10

In 1956 a series of computer scientists, psychologists, and other scientists embarked on a project to develop machine forms of learning. In a proposal for a workshop at Dartmouth College in 1955, John McCarthy labelled this new concept “artificial intelligence.” While many of the participants, including Marvin Minsky, Nathaniel Rochester, Warren McCulloch, Ross Ashby, and Claude Shannon, focused on symbolic and linguistic processes, one model focused on the neuron. A psychologist, Frank Rosenblatt proposed that learning, whether in non-human animals, humans, or computers, could be modeled on artificial, cognitive devices that implement the basic architecture of the human brain.11

In his initial paper from the Dartmouth program, Rosenblatt details the idea of a “perceptron” and distances himself from his peers. These scientists, he claimed, had been “chiefly concerned with the question of how such functions as perception and recall might be achieved by a deterministic system of any sort, rather than how this is actually done by the brain.” This approach, he argued, fundamentally ignored the question of scale and the emergent properties of biological systems. Instead, Rosenblatt based his approach on theories of networked cognition and neural nets, which he attributed to Hebb and Hayek.12 According to Rosenblatt, neurons are mere switches or nodes in a network that classifies cognitive input, and intelligence emerges only at the level of the population and through the patterns of interaction between neurons.

Grounded as they are theories of Hebbian networks, contemporary neural networks operate on the same principles, in which groups of nets that are exposed repeatedly to the same stimuli become trained to fire together, and each exposure increases the statistical likelihood that the net will fire together and “recognize” the object. In supervised “learning,” then, nets can be corrected through the comparison of their result with the original input. The key feature of this is that the input does not need to be ontologically defined or represented, meaning that a series of networked machines can come to identify a cat without having to be explained what a cat “is.” Only through patterns of affiliation does sensory response emerge.

The key to learning was therefore exposure to a “large sample of stimuli,” which Rosenblatt stressed meant approaching the nature of learning “in terms of probability theory rather than symbolic logic.”13 The perceptron model suggests that machine systems, like markets, might be able to perceive what individual subjects cannot.14 While each human individual is limited to a specific set of external stimuli to which they are exposed, a computer perceptron can, by contrast, draw on data that are the result of judgements and experiences of not just one individual, but rather large populations of human individuals.15

For both Rosenblatt and Hayek, as well as their predecessors in psychology, notions of learning forwarded the idea that systems can change and adapt non-consciously, or automatically. The central idea of these models was that small operations done on parts of a problem might agglomerate into a group that is greater than the sum of their parts and solve problems not through representation but through action. Both Hayek and Rosenblatt take from theories of information, particularly from cybernetics, that posit communication in terms of thermodynamics. According to this theory, systems at different scales are only probabilistically related to their parts. Calculating individual components therefore cannot represent or predict the act of the entire system.16

This disavowal of “representation” continues to fuel the desire for ever larger data sets and unsupervised learning in neural nets which would, at least in theory, be driven by the data. Hayek himself espoused an imaginary of this data rich world that could be increasingly calculated without (human) consciousness, while Rosenblatt’s perceptron is the technological manifestation of the reconfiguration and reorganization of human subjectivity, physiology, psychology, and economy that this theory implies.17 Both were a result of the belief that technical decision making not through governments but at the scale of populations might ameliorate the danger of populism or the errors of human judgement. As a result, by the turn of the millennium the neural net became the embodiment of an idea (and ideology) of networked decision making that could scale from within the mind to the planetary networks of electronic trading platforms and global markets.

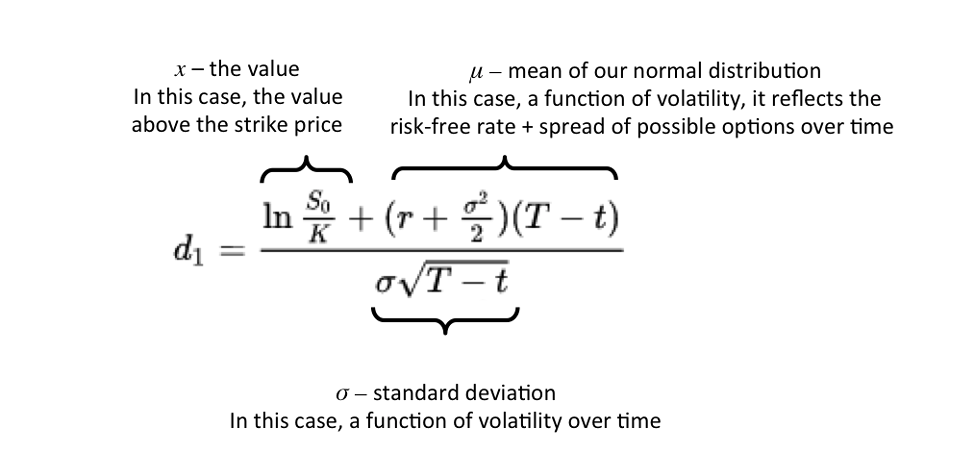

Black-Scholes Model summation. Source: Brilliant.

Derivation

Though it has traditionally been difficult for traders to determine how much the option to purchase an asset or stock should cost, up until the 1970s, it was widely assumed that the value of an option to buy a stock would necessarily be related to the expected rate of return of the underlying stock itself, which in turn would be a function of the health and profitability of the company that issue the stock.18 This understanding not only presumes an objective measure of value, but also that models themselves represent or abstract from something real out there in the world.

In 1973, Black and his colleagues Myron Scholes and Robert Merton introduced the Black-Scholes Option Pricing Model in order to provide a new way of relating options prices to the future.19 What made this model unique in the history of finance was that it completely detached the price of an option from any expectation about the likely value of the underlying asset at the option maturity date. Instead, the key value for Black and Scholes was the expected volatility of the stock, which meant the movement up and down of the price over time. The expected volatility of a stock was not a function of one’s estimated profitability of the company that issued the stock, but was instead a function of the investment market as a whole.20 The Black-Scholes option pricing model, in other words, was not interested in the “true” value of the underlying asset, but rather in the relationship of the stock to the market as a whole.

Scholes and Black had begun working together in the late 1960s while consulting for investment firms, which involved applying computers to modern portfolio theory and automating arbitrage.21 Scholes and Black opened their essay “The Pricing of Options and Corporate Liabilities,” in which they introduced their option pricing equation, with a challenge: “If options are correctly priced in the market, it should not be possible to make sure profits by creating portfolios of long and short positions.” In other words, since people do make money, options cannot be correctly priced, and mispricing—that is, imperfect information transmission—must therefore be essential to the operation of markets. This also meant that traders could not, even in principle, “be rational” in deciding on the risk assigned to an option (by, for example, attempting to determine the true value of the underlying asset). As a result, the insights of reasonable traders might matter less in pricing assets than they would in measuring the volatility of a stock.

The Black-Scholes Option Pricing Model effectively extends the assumptions inherent within both neural network theory and neoliberal economic theory at the time to financial instruments that bet on futures. Stocks, they reasoned, behaved more like the random, thermodynamic motions of particles in water than proxies or representations of some underlying economic reality. The market is full of noise, and the agents, the traders within it, do not, cannot know the relationship between the price of a security and the “real” value of the underlying asset. However, if agents recognize the limits of their knowledge, they can focus on what they can know: namely, how a single stock price varies over time, and how that variation relates to the price variations of other stocks. Black and Scholes assumed that all stocks in the market moved independently, and that measures of information like entropy and enthalpy could apply to the way stock prices “signal” each other. Their innovation was to posit that in order to price an option, one needed only to take the current price and the price changes of the asset, and figure out the complete distribution of share prices.22

Within weeks of the article’s publication, numerous corporations were offering software for such pricing equations.23 This was in part a consequence of the fact that the model joined communications and information theories with calculation in a way that made the equation amenable to algorithmic enactment. As individuals followed Black and Scholes in creating more complex derivation instruments, computers became essential both for obtaining data about price volatility and calculating option prices. An entire industry, and the financial markets of today, were born from this innovation and its new understanding of noise: a literal measure of entropy and variation as in mathematical theories of communication, and an assumption that there are a lot of false or arbitrary signals in the market. As a result, derivative markets have grown massively, by around 25% per year over the last twenty-five years, and now exceed the world’s GDP twenty times.24

There is also a deeply repressed geopolitical history behind these innovations in finance. The derivative pricing equation emerged on the heels of the end of Bretton woods, civil rights, decolonization, and the OPEC oil crisis, to name just a few of the major global transformations taking place at the time. The Black Scholes Model can therefore be seen as an attempt to tame or circumvent extreme volatility in politics, currency, and commodity markets. New financial technologies and institutions such as hedge funds were created in order to literally “hedge” bets: to ensure that risks were reallocated, decentralized, and networked. Derivative pricing instruments emerged specifically to deal with volatility and inflation from events such as the OPEC oil and petro-dollar crisis of the 1970s. Through the likes of derivation technologies such as short bets, credit swaps, and futures markets, dangerous bets would be combined with safer ones and dispersed across multiple territories and temporalities. Corporations, governments, and financiers flocked to these techniques of uncertainty management in the face of seemingly unknowable, unnamable, and unquantifiable, risks.25 The impossibility of prediction, the subjective nature of human decision making, and the electronic networking of global media systems all became infrastructures for new forms of betting on futures while evading the political-economic struggles of the day.

Models and Machines

Neoliberal economics often theorizes the world as a self-organizing adaptive system to counter the idea of planned and perfectly controllable political (and potentially totalitarian) orders. Within this ideology, the market takes on an almost divine, or perhaps biologically determinist capacity for chance and emergence, but never through consciousness or planning. Evolution is imagined against willed action and the reasoned decisions of individual humans; it is the result of networked learning through markets (or machines).26 This networked “nature” of human subjectivity and intelligence can serve as an argument against historical accountability or structure. If humans are subjective, no one can objectively perceive the structures of society or the market (which are now the same). Therefore, historical or structural injustices cannot be represented or dealt with through conscious planning; only market actions. The implication being that directed or conscious efforts to manipulate choices or decisions is seen as a violation of freedom and intelligence; directed planning is an obstacle to the market’s ability to “learn” and evolve.

Emerging out of the historical context of civil rights and calls for racial, sexual, and queer forms of justice and equity, the negation of any state intervention or planning (say affirmative action) has been naturalized in the figure of the neural net and the financial instrument. We have become attuned to this model of the world where machines and markets are syncopated with one another. These models, however, might also have the potential to remake our relations with each other and the world. As cultural theorist Randy Martin has argued, rather than separating itself from social processes of production and reproduction, algorithmic and derivative finance actually demonstrates the increased inter-relatedness, globalization, and socialization of debt and precarity. By tying together disparate actions and objects into a single assembled bundle of reallocated risks to trade, new market machines have made us more indebted to each other. The political and ethical question thus becomes how we might activate this mutual indebtedness in new ways, ones that are less amenable to the strict market logics of neoliberal economics.27

The future lies in recognizing what our machines have finally made visible, and what has perhaps always been there: the socio-political nature of our seemingly natural thoughts and perceptions. Every market crash, every sub-prime mortgage event, reveals the social constructedness and the work—aesthetic, political, economic—it takes to maintain our belief in markets as forces of nature or divinity. And if it is not aesthetically smoothed over through media and narratives of inevitability, they also make it possible to recognize how our machines have linked so many of us together in precarity. The potential politics of these moments has not yet been realized, but there have been efforts, whether in Occupy, or more recently in movements for civil rights, racial equity, and environmental justice such as Black Lives Matter or the Chilean anti-austerity protests of 2019, to name a few. If all computer systems are programmed, and therefore planned, we are forced to contend with the intentional and therefore mutable nature of how we both think and perceive our world.

Fischer Black, “Noise,” The Journal of Finance 41, no. 3 (1986).

Devin Kennedy, “The Machine in the Market: Computers and the Infrastructure of Price at the New York Stock Exchange, 1965–1975,” Social Studies of Science 47, no. 6 (2017).

Ibid.

Friedrich Hayek, “The Use of Knowledge in Society,” The American Economic Review XXXV, no. September (1945): 519-20. Italics mine.

Ibid.

A critical first step, as historians such as Philip Mirowski have noted, towards contemporary notions of information economies. Philip Mirowski, Machine Dreams: Economics Becomes a Cyborg Science (Cambridge: Cambridge University Press, 2002); “Twelve Theses Concerning the History of Postwar Neoclassical Price Theory,” History of Political Economy 38 (2006); Machine Dreams: Economics Becomes a Cyborg Science (Cambridge: Cambridge University Press, 2002).

“The whole acts as one market, not because any of its members survey the whole field, but because their limited individual fields of vision sufficiently overlap so that through many intermediaries the relevant information is communicated to all.” Hayek, “The Use of Knowledge in Society,” 526.

Paul Erickson et al., How Reason Almost Lost Its Mind: The Strange Career of Cold War Rationality (Chicago: University Of Chicago Press, 2015).

Friedrich Hayek, The Constitution of Liberty, 2011 ed. (Chicago: University of Chicago Press, 1960).

Orit Halpern and Robert Mitchell, The Smartness Mandate (forthcoming 2023).

Frank Rosenblatt, Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms. (Washington D.C.: Spartan Books, 1962).

Ibid., 5. Italics mine.

Frank Rosenblatt, Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms (Washington, D.C.: Spartan Books, 1962), 386-408.

“The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain,” Psychological Review 65, no. 6 (1958): 288-89.

Ibid., 19-20, italics my emphasis.

For more on the influence of cybernetics and systems theories on producing notions of non-conscious growth and evolution in Hayek’s thought: Paul Lewis, “The Emergence of ‘Emergence’ in the Work of F.A. Hayek: A Historical Analysis,” History of Political Economy 48, no. 1; Gabriel Oliva, “The Road to Servomechanisms: The Influence of Cybernetics on Hayek from the Sensory Order to the Social Order,” Research in the History of Economic Thought and Methodology, no. 161-198 (2016).

He was apparently fond of quoting Alfred North Whitehead’s remark that “it is a profoundly erroneous truism… that we should cultivate the habit of thinking what we are doing. The precise opposite is the case. Civilization advances by extending the number of important operations we can perform without thinking about them.” Alfred Moore, “Hayek, Conspiracy, and Democracy,” Critical Review 28, no. 1 (2016): 50. I am indebted to Moore’s excellent discussion for much of the argument surrounding Hayek, democracy, and information. This quote is from Hayek, “The Use of Knowledge in Society.”

For an account of earlier nineteenth and twentieth century models for pricing options, see MacKenzie, 37-88.

The three men most often credited with the formalization of the derivative pricing model are Black, an applied mathematician who had been trained by artificial intelligence pioneer Marvin Minsky; Myron Scholes, a Canadian-American economist from University of Chicago who came to MIT after his PhD under Eugene Fama; and Robert Merton, another economist trained at MIT. Collectively they developed the Black-Scholes-Merton derivative pricing model. While these three figures are hardly singularly responsible for global financialization, their history serves as a mirror to a situation where new computational techniques were produced to address geo-political-environmental transformation. See George G. Szpiro, Pricing the Future: Finance, Physics, and the 300 Year Journey to the Black-Scholes Equation, vol. Kindle Edition (New York: Basic Books, 2011), 116-17.

As Black noted in 1975, “{m}y initial estimates of volatility are based on 10 years of daily data on stock prices and dividends, with more weight on more recent data. Each month, I update the estimates. Roughly speaking, last month’s estimate gets four-fifths weight, and the most recent month’s actual volatility gets one-fifth weight. I also make some use of the changes in volatility on stocks generally, of the direction in which the stock price has been moving, and of the ‘market’s estimates’ of volatility, as suggested by the level of option prices for the stock” (Black 1975b, p. 5, cited in MacKenzie 321, note 18.

A “portfolio” is a collection of multiple investments, which vary in their presumed riskiness, and which aim to maximize profit for a specific level of overall risk; “arbitrage” refers to purportedly risk-free investments, such as the profit that can be made when one takes advantage of slight differences between currency exchanges—or the price of the same stack—in two different locations.

Robert Merton added the concept of continuous time and figured out a derivation equation to smooth the curve of prices. The final equation is essentially the merger of a normal curve with Brownian motion. Satyajit Das, Traders, Guns, and Money: Knowns and Unknowns in the Dazzling World of Derivatives (Edinburgh: Prentice Hall: Financial Times, 2006), 194-95.

MacKenzie, An Engine, Not a Camera How Financial Models Shape Markets, 60-67.

Michael Schaus, “Narrative and Value: Authorship in the Story of Money,” Proceedings of RSD7, Relating Systems Thinking and Design 7, 23-26 Oct 2018, Turin, Italy, fig. 18. See ➝.

It is worth noting that the Black Scholes Derivative pricing equation inaugurating the financialization of the global economy was introduced in 1973. For an excellent summary of these links and of the insurance and urban planning fields please see: Kevin Grove, Resilience (New York: Routledge, 2018).

Joshua Ramey, “Neoliberalism as a Political Theology of Chance: The Politics of Divination,” Palgrave Communications (2015).

Randy Martin, “What Difference do Derivatives Make? From the Technical to the Political Conjuncture”, Culture Unbound, Volume 6, 2014: 189-210.

On Models is a collaboration between e-flux Architecture and The Museum of Contemporary Art Toronto.

Category

Subject

Research for this article was supported by the Mellon Foundation, Digital Now Project, at the Center for Canadian Architecture (CCA) and by the staff and archives at the CCA. Further funding was given by the Swiss National Science Foundation, Sinergia Project, Governing Through Design.