It is for the sake of the present and of the future that they are willing to die.

—Frantz Fanon1But if we rediscover time beneath the subject, and if we relate to the paradox of time those of the body, the world, the thing, and others, we shall understand that beyond these there is nothing to understand.

—Maurice Merleau-Ponty2

As institutions prepare for economies of the future there is an increased interest in identifying new developmental pathways by collecting large quantities of data to model human behavior. Higher education institutions in the United Kingdom, in particular, have become increasingly more reliant on technological solutions to achieve developmental targets. New lecture recording software, campus-owned data centers, sophisticated student tracking and surveillance systems, and biometrics and other data driven processes are being implemented throughout the UK in hopes of improving operational efficiency, reducing economic strain, and increasing what is being described as student satisfaction.

The intersection of student satisfaction and data modeling is a crucial element in institutional strategies for self-improvement. For students, this means the transfiguration of their campus experiences into data on recruitment, retention, and employability. For the educator, knowledge-sharing and care are grossly misaligned with the arbitrary values of service provision, such as teaching quality and post-study work opportunities for students. For the staff administrator, day-to-day operations are revised as measures of competency against arbitrarily-established labor targets. This is not to mention the most precarious of university labor, the cleaners, security, and front desk personnel, who remain untranslatable to the institution in terms of happiness and wellbeing.

Approaches to data modeling are thought to widen student participation while reducing “data deficits,” or perceived gaps between institutional operation, economic performance, and student expectation. These deficits are believed to impede upon the decision-making capacity of the institution, which is increasingly organized around the convergence between data, student satisfaction, and economic vitality. For Black and ethnic minority students, staff, and educators, data deficits translate into the day-to-day experiences of racism and racialization on campus. According to a 2019 study conducted by Goldsmiths, University of London titled “Insider-Outsider: the role of race in shaping the experiences of black and minority ethnic students,” although almost half of Goldsmiths’ student body identifies as Black or ethnic minority (BAME), over a quarter (26%) have frequent experiences of overt racism from white students and staff, including the use of racial slurs, and questioning of their nationalities due to skin color.3 These aggressions, according to the report’s author Sofia Akil, places BAME students at risk in an environment that has promised a commitment to equitable learning, safety, and care. This is in addition to research commissioned by the University and College Union (UCU) which found that BAME university staff face a 9% pay gap compared to their white peers, rising to 14% for Black staff.4

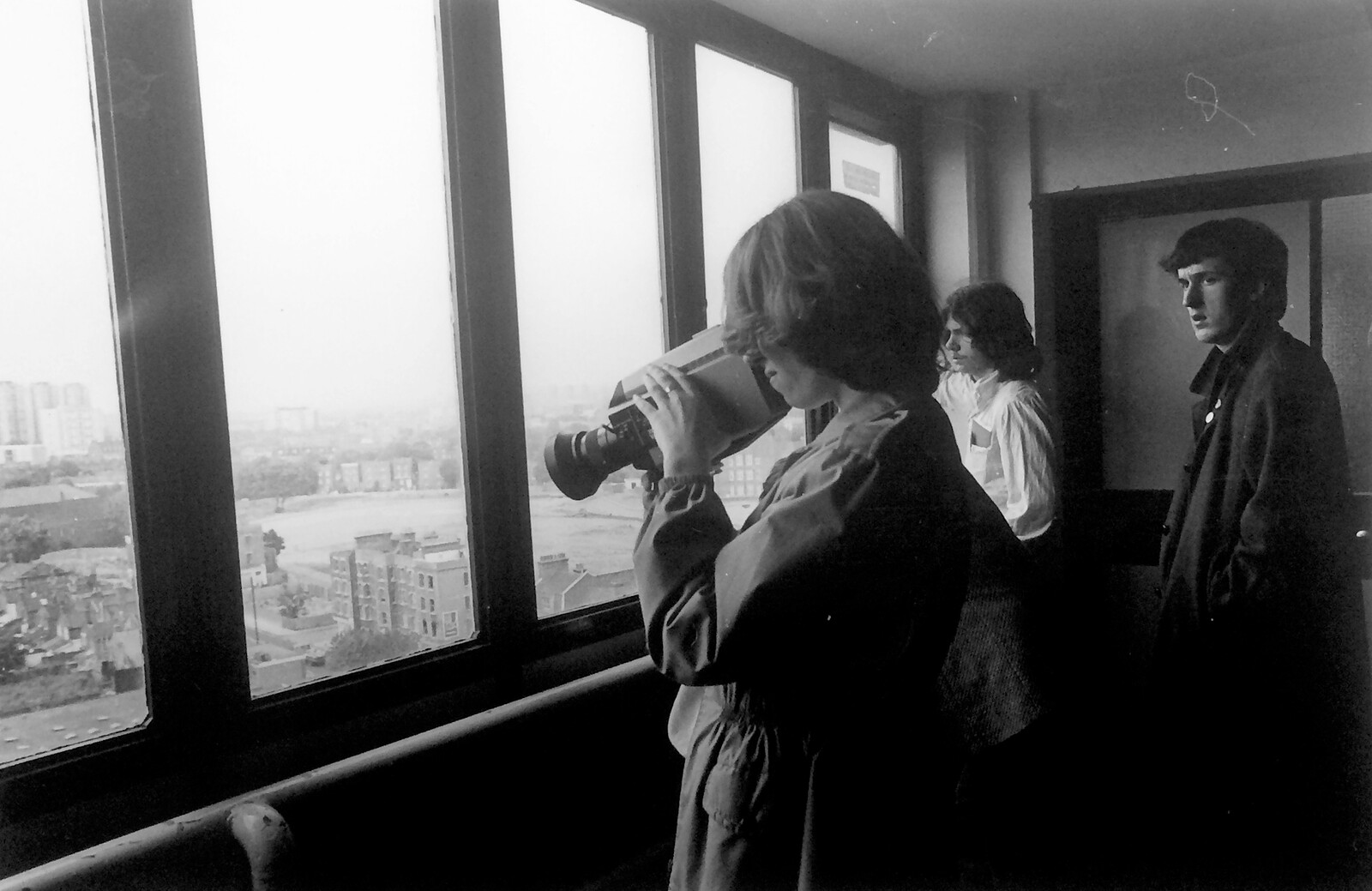

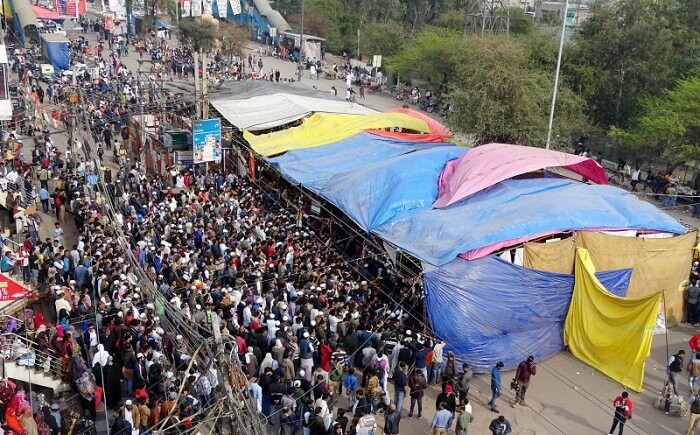

The “Insider-Outsider” and UCU reports challenges narratives of universal care and the notion of equitable learning spaces, as promoted by many UK higher education institutions. The report was published just weeks following a 137-day student-led occupation of Deptford Town Hall, the main administration building at Goldsmiths.5 The occupation, organized by Goldsmiths Anti-Racist Action (or GARA), called for a collective challenge to institutional racism and for the transformation of the campus into sites of anti-racist action, including mandatory anti-racist training for all staff and senior managers; cultural competency training; money for reparative justice; and public conversations with local communities to decide what should be done with existing statues of slave owners and colonialists on campus. GARA ended the occupation after a series of their demands were met by the senior management.

While the day-to-day experiences of racialized individuals in higher education are indicative of a continual gap in wellness, retention, and attainment, institutions such as Goldsmiths have become increasingly reliant on more universal technological solutions, as demonstrated by algorithmically-driven timetabling systems and recruitment projections. Investments in data modeling is thought to provide a practical tutorial on the diversification of student experience as well as institutional strategic planning. They are abstemious with the use of languages that seek to describe spaces of learning and the bodies within them as potential sites of future value, thereby subsuming the racialized experiences of BAME students and staff into metrics and the mitigation of excess liability. Experiences of racism are at odds with overarching narratives of satisfaction and equitable participation. The languages of wellbeing, as owned and managed by institutions, have sought participation towards a solution in the racialized individual that is willing to offer themselves up to analysis in the form of increased surveillance, questionnaires, and surveys. Data models, as such, supply the necessary tools by which racial contingency can be translated into future risk scenarios.

While data modeling might support the development of a universal language by which group and individual experience are organized, they can, even inadvertently, reinforce existing racial architectures. Data also constitutes the very foundation of a neoliberal expansion that lives in close kinship with the techno-racial imaginary. It is here, at the intersection of student satisfaction and data, that models gain the power of speculation as they collapse the future human variation into a racialized present. Throughout history, in the assignment of labels from feature variables, the data model has produced false equivalencies between data deficits and taxonomic group organization. A lack of commitment towards a greater understanding of the model’s racialized historicity leaves contemporary data models, such as machine learning models, vulnerable to outputs that reduce the potential for self-determinism and human variation.

On the other hand, the entanglements between data, race, and modeling can be viewed as a speculative exercise in the assignments of affirmative human value. I do not seek to suggest that an empirically-driven understanding of institutional practices are always in direct relation to racial segregation. To the contrary, any attempt at the empirical modeling of institutional spaces is foremost an engagement with, as Kara Keeling has argued, the temporality of Black political possibility. In this sense, terms of possibility locate a space where the Black body can break from the operations of history to articulate something otherwise within the socio-technical imaginary. This provocation emerges in the potential to dislodge the Black body from the languages of institutional speculation, and instead introduce new explorations towards a more affirmative Black future. The ultimate aim is to shift the racialized individual away from the stereoscopic image of categorical difference and move towards a non-representational mode of self-determinism from within or outside of the institutional structure.

Targets inspire us toward pattern recognition

Machine learning models use data for the process of self-learning, which is organized by a two-step process: learning (or training) and classification. Training data typically consists of “tuples” (also known as samples, examples, instances, data points, or objects). Tuples constitute database records, and are initially derived from previously aggregated data sets. Tuples are also assigned to output classes. Once a tuple has been given an identity (or a specific class), it is referenced by the algorithm as input data. The algorithm is then instructed to classify the tuple based on its initial input. For instance, an input tuple:

var named = (E, “Enrolled”);

var named = (P, “Fees Paid”);

should match the output classification:

var unnamed = (Answer: E + P, Message: “Student”);

To achieve successful classification, various training methods are used depending on performance objectives. In supervised learning, tuples are “supervised” or forced into appropriate output classifications. With unsupervised learning (or clustering), on the other hand, output classes are left unlabeled. In unsupervised learning, algorithms are instructed to make best guesses and repeat the process until tuple classes are understood. Unlike supervised examples, unsupervised examples are more universal, since prior classifications have not been determined. This is beneficial in problems that require the grouping (or clustering) of similar data, in situations where data in complex environments needs to be mapped onto two- or three-dimensional visualizations, or to gain deeper insights from database records. Another widely used learning method is reinforcement learning, which is a beneficial technique in instances where decision-based actions are necessary. Reinforcement learning algorithms discover the most optimal outcome by trial and error, in contrast to a predefined set of outcomes in supervised learning. However, complications arise when output quality can only be assessed indirectly or longitudinally before any clarity of results can emerge. If the classifier has a high success rate, then it is used on future unknown (or previously unseen) data. For example, data from previous student applications, such as race, socioeconomic background, place of residence, and so forth, can be used to predict rates of retention for future students.

In complex environments, target functions are an important formula in machine learning algorithms. The target function is the formula that an algorithm feeds data to in order to calculate predictions. It is useful for real world machine learning applications, where—unlike training sets—outputs are unknown, or in situations where accurate performance is difficult to replicate outside of controlled environments. In controlled environments, performance accuracy is likely to be higher than in real-time results. By analyzing large quantities of data related to a specified problem, an algorithm can detect consistencies in the data, while also gaining understanding of previously unspecified rules about how the subject under study operates. The success of this operation determines the learning capability of the algorithm, which is fed multiple tuples in multiple cycles until desired rates of successful classification are achieved.

According to computer scientists Jiawei Han and Micheline Kamber, once an algorithm is trained in a complex environment, new rules and patterns can be established to either classify or pre-empt similar phenomena.6 For example, a financial institutions might use a data model to solve the problem of “creditworthiness” by classifying consumers based on income, previous loan acceptance, immigration status, length of residence, or other variables that are understood to influence the likelihood of default. Based on analysis, a number of outputs are possible: “accepted with x or y% interest rate,” “denied”, or “more information needed.” Similarly, a national security agency that has established the problem of “radicalism” might use facial recognition, social media posts, and other behavioral data to determine what persons might be deemed “suspicious,” a “threat,” or “sympathetic” to radicalism against state power.

Machine learning algorithms approximate target functions from noisy data, which is commonly thought to negatively impact performance. This is significant, considering the general understanding of noise as data deemed irrelevant or inconsequential to a computational problem at hand. While some algorithms are sophisticated enough to detect noise, they are sensitive to underfitting and overfitting. Underfitting occurs when the learning algorithm can neither model the training data nor generalize new samples for data representation and classification. This is particularly useful in environments that are overly complex, where data aggregation is too expensive, or in cases where the best methods for approach to the problem are unknown. Underfit models tend to have poor performance, for example, when applying a linear model to non-linear data. In this case, the model is too simple to explain the variance in the data, resulting in higher degrees of bias. Overfitting, on the other hand, takes place when a target function is too closely fit to a limited quantity of data, resulting in a model that matches too closely to the error within it. Overfitting is problematic when attempting to find patterns or develop elaborate theorems from the data, as they might reduce predictive power with less accuracy. With overfitting, what might appear to be a pattern in the data might in reality be a chance occurrence, particularly when the model is applied to real-world problems.

The Goldsmiths Students’ Union (GSU) boycott of the United Kingdom’s National Student Survey (NSS) is a striking example of potential overfitting and underfitting.7 The NSS is an annual survey of students across the United Kingdom, commissioned by the Office for Students (OfS) on behalf of UK funding and regulatory bodies. Students are asked to give collective voice to their experiences at UK higher education institutions to help “shape the future” of their courses.8 The survey promotes anonymity and honesty, and governed by what NSS describes as a “50% response rate with at least ten students responding” to ensure quality before publication. Although the survey promotes honesty, it is unclear on what criteria a 50% response rate by at least ten students is based.

Promoted by what is described on their website as “a flawed and arbitrary survey, which has been discredited by the Royal Statistics Society as a way to measure a university student experience,” the GSU rejected Goldsmiths’ (University of London’s) use of NSS data to determine student course satisfaction. GSU posits that the data is instead exclusionary since it is based only on students in their final year. They also argue that while the data might appear to reveal crucial information about student wellbeing, they are—to the contrary—of interest primarily for the university’s own ranking targets as compared to other institutions. Even more so, GSU questions the value of increased university rankings that compare institutions based on categorical labels, like “Course satisfaction,” “Teacher quality,” or “Career after six months,” without a more comprehensive understanding of local conditions that might impact the relationship between theses labels and student experience, such as an atmosphere of racism, ageism, homophobia, xenophobia, or misogyny on campuses. The boycott effectively reduces the amount of data fed into the survey, and data that is submitted is not guaranteed to be accurate.

Data analysts have long addressed the problem of underfitting and overfitting though temporal mathematics. Statistically, although data might be overfit or underfit, errors or distortions in present datasets can be used as intelligence for future models. In these cases, emphasis is placed on historical data sets. New thresholds are then placed on the hybrid past-present data account for present error. The Guardian University Guide 2020, an annual ranking of university data in the United Kingdom, illustrates this maneuver:

Not every university is in the overall table. Some specialist institutions teach very few subjects so we can’t rank them alongside more general universities, but they will still appear in the subject tables. There are a few gaps in the columns, where data is missing. There are various reasons for this: one of them is a partial student boycott of the National Student Survey last year. Where data is missing, the Guardian score has been calculated based on previous performance for that metric, and the remaining measures. To be listed at all, a university cannot be missing more than 40% of its data.9

Temporal manipulation allows for speculation on any number of observable or non-observable gaps in the data. Variables, in these cases, are treated as if they are explicit numbers. By treating variables in this way, a range of problems can be solved in a single calculation. In basic mathematics, a simple problem can be solved by replacing a variable with its respective coefficient of the equation. In more complex calculations, however, variables are symbols that represent mathematical objects—either a single numeral, vector, or matrix—that carry their own set of complex computations reduced to a single alphabetic character.

Gaining control over variability is a crucial exercise for data analysts that seek to reduce negative risk factors. For this, analysts might turn to reinforcement learning or continuously valued learning algorithms. Reinforcement learning is useful for the assignment of threshold values, which can be thought of as a boundary or line that separates data of interest from what I argue is “Othered data,” or data valued as inconsequential for a specific computational problem. Othered data are variable inputs, such as the fragments of human behavior that live outside the desired population. Depending on the established problem, a desired population might be measured in terms of an acceptable rate of retention, or in some cases, exclusion from the process. In the former, to achieve a more desirable population, institutions might aim to reduce data deficits, as mentioned above. This would mean that those in the population that are not aligned with thresholds of achievement or “satisfaction” might be singled out or targeted for future action. In the latter, as is the case with strategies such as UK Prevent, the Othered population attains a less desirable designation and is targeted for removal from participation.10

Unlike many notions of exclusion like clustering and classification that seek to single out data based on either a categorical or discrete set of characteristics, reinforcement learning is an area of machine learning concerned with the assignment of cumulative rewards for actions taken in an environment. Reinforcement learning enables the generalization of continuous variables in the data, which are variables that can keep counting over time. Continuous variables can be infinite, without ending. Variables can also take many shapes, as long as the values can be assigned measure. Whereas a discrete variable, say the number of university students is, 8,523, a person’s age in years could be 17 years, 8 months, 3 days, 6 hours, 12 minutes, 2 seconds, 1 millisecond, 8 nanoseconds, 99 picoseconds, and so on. Someone’s weight could be 149.12887462572309874896232… kg, and counting. Accounting for continuous variability is advantageous in reinforcement learning, since variables require less specificity in more dynamic environments. From a modeling perspective, reinforcement learning helps simplify assumptions about data structures. They hold the statistical power to identify hidden relationships between two variables, which can be arbitrary or specified. As result, reinforcement learning models are less dependent on accuracy of variable quantities. Instead, they focus on finding a balance between exploiting current knowledge and exploring unknown patterns or correlations. Reinforcement learning is well suited for problems that include long-term versus short-term reward trade-offs or risk assessments (for instance, timetabling, space allocation, student recruitment targeting in the university environment; and robot control, telecommunications, and AlphaGo in computational environments).

Recognizing Patterns

Despite variability, models rely on the recognition of patterns in data. This assists in the production of representations, such as spatial and temporal data visualizations, and multimedia or textual graphs. In statistics, pattern recognition models are crafted around dynamic rule sets, and are concerned with the discovery of regularities in data, which are then classified into discrete classifications. Tuple classifications are known in advance, and are sometimes specified after initial training and inspection. Pattern recognition may also utilize machine learning approaches to optimize the function of adaptive models; and in many cases, tuples are condensed and simplified to increase algorithmic efficiency and processing speeds. Pattern recognition models use machine learning techniques to increase the field of vision by subsuming randomness, or the contingency of variation, into a generalized group of patterns.

An essential function of pattern recognition is the ability to make “nontrivial” predictions from new data sets. Data can also be modulated between black box patterns (structures are incomprehensible and thus hidden from view) and transparent box patterns (which reveal explicit structures). It is assumed that key insights can be inferred from either method. However, Han and Kamber note that not all inferences are of interest to researchers.11 They argue that this raises serious concerns for data mining, and that for a pattern to be “interesting,” it must generate knowledge to that satisfies the following criteria: (1) the pattern is easily understood by humans; (2) it is valid on new or training data with some certainty; (3) it is potentially useful; and, (4) it is novel. Han and Kamber’s appeal to interest as insight reveals the fragility of machine perception as a function of human desire and expectation. Han and Kamber blur the relation between human and machine as an activity of self-interest diluted under the logics of comprehension. Pattern recognition models, as with similar approaches, are what brings the Other into view. They are methods of establishes variable lines of normality for institutional conformity along with the admission of individuals based on the successful allegiance to power and the abstraction of quantitative analysis.

A powerful component to this method is its sensitivity to the relations between seemingly independent objects and variables. Take Stochastic Pattern Theory (SPT), a revised approach to pattern recognition that identifies the hidden variables of a data set using real-world, real-time data rather than artificial information. SPT champions David Mumford and Agnès Desolneux draw on Ulf Grenander’s groundbreaking work in pattern theory to argue for a move away from traditional methods in pattern recognition to account for what they call meta-characteristics.12 This approach is applicable to facial recognition and other algorithms where recognizable patterns are desired. These meta-characteristics are motivated by the identification of cross-signal patterning that correlate distinct patterns or signals with either within single or multiple systems. While data denotes the information contained within a signal, signals represent the way we communicate (for instance, by speech, a transaction, where we travel and how often, what we read, how often we attend classes, a hand gesture, or other body languages). SPT models are thought to improve operational efficiency by offering, say, a security agency a comprehensive study on the random movements of their targets. For service organizations like higher education institutions faced with “chaotic” student behavior, SPTs promote operational efficiency. Although distortions or misrepresentations in stochastic models are difficult to trace, for instance when assigning a smile, grin, or grimace to an individual’s face, Mumford and Desolneux argue that by modeling the cross-pollination of distinct attributes, one can derive practical insights from what may initially appear to be noise or variability. As such, an SPT is less reliant on error in the present, as any distortion in results can be used to improve future stochastic models. In this way, predictive models become champions of economies of risk and future scenario building. They are staged as the vanguards of population control reinforced by the power of mathematical assumption, where the generalization of discrete desires, behaviors, and movements are put into service of capital or operational gain.

The attributes recently sprung from the model ask to be interrupted

While models are useful for data analysis in the present-day, they derive from early experiments in artificial self-learning. Arthur Samuel, an early pioneer in gaming and machine learning research, introduced the Checkers-Playing Program in 1959, one of the first successful self-learning computer models. The model was successful at evaluating the dynamic spatial positions of distinct entities on closed grids, much like the movement of checkers pieces on the board game. Unlike the Checkers board game, however, the pieces from Samuel’s model were semi-autonomous. The pieces were governed by a set of parameters that could be modified depending on the movement of each piece and the spatial relationships between them. The program simulated the movement and anticipation of relations within a closed environment, with each piece governed by its own set of parameters, as well as those of the collective. As such, the final outcome was an amalgamation of both self- and collectively-determined relations. The learning procedure was iterative, and could be optimized with each cycle to compensate for any errors (which were seen to be beneficial to the overall outcome, since error provided specific insight into how parameters could be adjusted to reach the specified hypothesis). As Richard Sutton notes, Samuel’s program was successful in its capacity to self-learn to the point where it gained enough knowledge of an overall system to play against a human being.13

Similar to Samuel’s Checkers-Playing Program is John Holland’s 1985 Bucket Brigade, an adaptive “classifier system” where conflict resolution is carried out by competitive action. Like the Checkers-Playing Program, specific rules in the Bucket Brigade were dynamically adjusted in iterative cycles based on the movement of semi-autonomous entities. The Bucket Brigade was less dependent on the final collective outcome, and more concerned with historical movement and future interaction. Unlike the Checkers-Playing Program, however, rule sets within the Bucket Brigade were modified based on numerical parameters. The rule sets were pre-assigned unique messages that were matched to list of messages. If a rule set, for instance, “bid” or matched its own message with a message on the list, then it gained “strength.” Gaining strength meant it was awarded the right to post its message onto the working memory of the competitive data structure. Within the competitive data structure, the rule set with the highest number of successful bids was rendered active and allowed to post its message to any new list used in the next round of the game. Ultimately, active rule sets were encouraged to contribute to the list of future protocols by associating themselves with other past, present, and future rule sets. Significantly, less successful rule sets were deemed weak and unable to contribute to future rule sets, which effectively created a competitive advantage for some rule sets over others. Bucket Brigades was based on a competitive cycle of value achieved through the exchange of information between rule sets. The cycles had eugenic undertones in that only rule sets and codes that were determined to be of value to the overall dominant system were encouraged to survive, while those deemed less beneficial were further alienated from the cycle of production. Today, traces of strength-based learning are found in search algorithms that prioritize certain webpages based on popularity or current rankings, as well as user preference based on their historical search preferences. Strength can also determine recommendation functions that display outcomes based on prior user interests and the strength of other users’ associated searches and desires.

Other data models have also been widely criticized for justifying racism and racial segregation. One of the most controversial examples is a 1960s study conducted by criminologists Sheldon and Eleanor Glueck. In their paper “Predicting Delinquency and Crime,” the Gluecks used data on individuals such as age, IQ, and neighborhood of residence to determine possible correlations between these factors and behavior patterns, like family stability, affection, and discipline in the home. The Gluecks concluded that based on individual attributes, some juveniles were more likely to show criminal delinquency than others youths. Unsurprisingly, the study was met with considerable objection. Critics argued that the study assumed objectivity where none existed. The Gluecks ignored any social, political, or economic conditions that might have contributed to the correlations, yet they insisted that their model was not meant to determine delinquency with any certainty. Instead, they argued that the model provided insights into the mere probability of outcome based on the above factors. They insisted that although prediction tables should be flexible enough to accommodate the dynamic conditions of life, models are not designed to account for every variable influence possible. The Glueck’s assertion reinforced notions of an epistemic neutrality, while effectively lessening the role institutional racism as a contributing factor to the reduction of life chances.

Later data models extended beyond the fields of basic play to more complex software mappings via learning agents, such as cities, landscapes, and even institutions of higher education. A learning agent is a software program that is able to act and adapt to its surroundings based on present and new information. Although agents are typically governed by specific rules or targets, they are semi-autonomous in that they can make decisions based on feedback from other agents, as well as the larger system. They develop new experiences by taking action in the now to improve future action. Agents are not limited to single persons, objects, or entities; they can also include homogenous or heterogeneous collectives, clusters, or even environmental phenomenon. They are represented by description and coded to conduct a number of specific actions. For instance, they may be instructed to make autonomous decisions based on logical deduction; use decision-making to resemble practical reasoning in humans; combine deductive and other decisional frameworks; or, not use reasoning at all. Agents are adaptable in that decision-making can be made in either controlled environments or environments with various levels of uncertainty. Agent-based models (or ABMs) are one such approach. ABMs simulate and predict the behavioral patterns of the semi-autonomous agents, who may learn, adapt or reproduce within the parameters of a predetermined rule set. Variable selection can also be determined based on historical agent performance. In other words, ABMs can use error to strengthen and systematically reveal which independent behaviors might correlate with larger patterned behavior.

Thomas Schelling’s 1969 “Models of Segregation” is one of the first functional ABMs, as well as one of the most controversial. The model was designed to help gain insight into the dynamics of racial segregation in urban areas in North America. The rule set was made up of a series of generalized classifications, such as “color” (race), sex, age, income, first language, taste, comparative advantages, and geographical location. Semi-autonomous agents, labelled Black (W) and White (W), were randomly distributed across a special grid or neighborhood. Once their initial conditions were established, agents were given decision-making capabilities and the power to determine their preferred location in the neighborhood based on feedback from their nearest neighbors, including their race, sex, income and other classifications. To universalize experience across the neighborhood, a parameter labelled “Contentment” was established to govern all agent movements. Simply put, if a Black or White agent was content with the demographics of their neighbors, they would decide to remain in their local area. If not, then a threshold of discontent was reached at which point the agent would self-segregate and move to local area with a more agreeable demographic. For instance, after several iterations, a line of agents BBBWBWWW in a neighborhood was shown to move away from other neighbors and re-arrange themselves to become BWBWBWBW. However, in an egregious display of racial segregation, it was also shown that when an initial arrangement of Black agents exceeded the number of White agents in a local area, such as BBWBWBBW, then the White agents would surpass their contentment thresholds and flee to areas with an equal or less number of Blacks. The same choreography was observed to produce the same result for Black agents when the number of White agents exceeded an equal number.

As one of the pioneering examples of data modeling, Schelling’s experiment is a damning report on the ease at which data can stand in as reference for more universal racial ideology, not to mention the means by which racial division is abstracted into the algorithmic under the assumption of objective observation. The model relies on a series of outcomes that mirror existing racial sentiments, yet the conditions of race-based decision-making are reduced to a number of arbitrary classification, as if they are instinctual and innately occurring. Matthew Fuller and Graham Harwood write:

The specific categories upon and through which segregation operates are described as if natural, not even worthy of equivocation as to their relation to social structure. The racism of the work is both that it operates by means of racial demarcation as an autocatalytic ideological given and secondly that it provides a means of organizing racial division at a higher level of abstraction. To say that Schelling operates within an ideologically racialized frame is not to aver either way as to whether Schelling as a person is consciously racist, but that, in these papers, racial division is an uncontested, “obvious” social phenomena that can be reduced in terms of its operation to a precise set of identifiers and operations.14

Fuller and Harwood draw attention to the reification of racial division in computationally-aided narratives. They furthermore bring to light the processes by which racial division is naturalized under the terms of universal assumption. The data are hallowed out of the violences of race and racial decision, and further dislocated from human accountability. Decision under the general label of “Contentment” produces a net neutral effect on issues surrounding the embedded conditions of Black survival, where the pre-assignment of value—as in racial threshold—is rendered neutral under the pretense of semi-autonomy. Life, as being-in-relation to race, is rendered imperceptible in the service of eugenic determinacy, further naturalizing struggles for self-determinism as rational forms of pleasure and happiness. Data gain their value when alliances are built into the logics of race and epistemic justification. In the case of Schelling’s model, data assists in the invention of race as the very definition of one’s situation in life, whereby happiness becomes the justificatory mechanism for the continued maintenance of institutional control.

As machine learning models gain more intuitive insights into human behavior, we might consider how the dynamics of institutional survival unfold as an act of universal assessment. Within our grids of relation, we might also seek to locate the logics of race within contemporary modeling practice. At stake is the genesis of the racialized being within a system that views self-determinism as an assignment of operational value. Self-genesis in this sense corresponds to the problem of discontent, resolved as an issue of operational efficiency. As the guardians of the grid of social relation, not to mention student and staff data, institutions take ownership over well-being by reserving the right to judgement and decision under the illusion of semi-autonomy. In doing so, they enter into a cycle of speculation that substitutes the variability of human life for—borrowing from Fanon—the “thousand details, anecdotes, [and] stories” that might emerge from data analysis. This weaving together of human life creates what Anne McClintock describes as a “patchwork of overlapping and incomplete rights to rule,” whereby accountability is shifted to the algorithmic and any attempt at resolution narrativized as a matter self-isolation.

We were never meant to survive

Data deficits are assumed to define certain problems in higher education. Models provide a means by which data can be analyzed to achieve a quantitative threshold value, which is often translated into a series of targeted actions. These actions take shape through the reduction of variability and the segregation of human behaviors by calculable measure. The power of mathematics take shape as a method for universal observation, revealing patterns in the data as well as insights that might be out of view. When variability is too high within the system of interest, a series of assumptions are set forth using historical data. By doing so, historical data can be bought into the present to fill any gaps in analysis, and the field of relation can be purportedly stabilized. The past-present data gains its dynamism as the past and present are shifted over time. This allows for the future, in terms of prediction, to change and shift in accord.

Issues arise when computational problems are devoid of actual human participation. Many computational problems rely on mathematical representations of human behaviors to achieve certain levels of human understanding. Human input is not established in the beginning of the process, and is emptied of its variability in pursuit of quantified knowledge. As a consequence, the guardians of the data maintain ownership over the general problem, the methods of analysis, the thresholds against which certain metrics are valued, as well as—and perhaps more consequential—the associated narratives surrounding the results.

The problem with institutional data modeling is that neither the institution nor the beings within it are meant to survive. As Kara Keeling posits, we change: “We are no longer who we were or who we would have been.”15 This means that the prescription of future institutional value based on present and historical data overdetermines a future that has yet to materialize. Although models provide a mathematical base for future planning, they conflate the variability of gesture with actual human processes of change. Humans are not stable beings that function solely through pattern. Change itself is an act of life the sets and resets the conditions of individual human decision in a recurrent cycle of what Keeling argues is a becoming and unbecoming of the self. This self, however, does not exist in isolation. Our conditions are in parasitic dialogue with our own temporalities, as well as those of others—inclusive of the forces, structures, objects, and extraneous conditions that inform the now. Mathematics, although sophisticated and powerful, do not fully account for the actual variability of life. Mathematics can only represent life through the symbolic reduction of that which can be converted and quantified as a series of discrete or continuous variables.

Life beyond the symbolic, and thereby our satisfaction with any infrastructures or processes within it is, as Keeling describes, produced in the interregnum, or the time between the conditions of practice and thought. In the interregnum, the past does not merely fill gaps in present memory. The past is not assumed complete. Instead it is a process, particularly for the BAME being, that continues to unfold in the now. The past in this sense is a consistency of relation that signals a trans-generational Black struggle that can in many instances remain intangible, as it does not have to be directly experienced. It is a past that is as individual as it is collectively shared and understood without comprehension. It most often appears and reappears at each audible or inaudible utterance of interpellation, gesture, or racist process. The signal draws forth the historical experience of racial utterance at each event, whether they be firsthand or embodied through communal narrative. The narratives combine with the specificities of the individual’s present experience and amplify or modulate accordingly. How does one then account for the parasitic variability of Black racialized experience? How does one locate a Black future that is never one’s own? In what ways can a future be excavated from a past and present that has yet to be completed?

A re-articulation of time challenges notions of a stable individual that can be woven out of Fanon’s “thousand details, anecdotes, [and] stories” to create what Achille Mbembe describes as a “patchwork of overlapping and incomplete rights to rule” by way of racial data.16 The interregnum provides a potential pathway forward. Keeling posits that although the past is not complete, the interregnum signals a political potential. By potential, Keeling suggests that there is a moment of interruption that exists in the spaces between practice and thought. The moment of interruption signals a work to be done, or a series of actions that must be taken in order to make room for the next iteration of change. The actions must be attempted without guarantee, as they can no longer rely on any consummate assumption about the past, present, or future. The past, as such, is no longer self-contained, but remains in flux along with additional flows of present uncertainty. To speak of the human is therefore to speak of a being that emerges through the work to extinguish the self and environment under new terms of survival. This human, while it might experience the subjections of racial imposition in the now, is called to resist the passivity of (self)observation, and while aware of the potential for political action in the now, instead dedicate itself to actions informed by the historical signals of race. It is here that Keeling argues for the “generative proposition [that] another world is possible.”17

The converged white wounds my eyes [so that] accumulated truths extinguish themselves

What would it mean to consider the forging and reforging of oneself in socio-technical ecologies that are organized around institutional targets? To imagine the future of the institution, as well as a Black future within it, is an investment in the renewal of computational thinking and practice—where the work to be done with data is put into service for its own extinction. For an alternative way of living to emerge, existing modeling frameworks must give way to the potential for political change. This begins with an integrated awareness of the affirmative potential of each being in the now, and a capacity to create a new techno-social relation constructed in cooperation with people.

At risk is the institution’s sheltering of variability, as well as their proprietary ownership of the rules of relation. For a more equitable future to emerge, the institution must extinguish exclusive right, and give way to the circulation of knowledges that are presently working towards anti-racist practice and the eradication of racial subjugation. In this way, value can emerge from within the framework of open and active cooperation, as opposed to abstractions of objective truth. If we extend this thought to consider what student satisfaction might mean in the interregnum, then a new model might would alter the threshold of value. These terms might be rooted in actions that render the institution incapable of relying on its present or historical sense of self. These actions furthermore alter existing institutional languages of wellbeing, along with articulations of care through empirical assumption. Space and time become arbitrary, in this sense. Governance becomes ineffective at producing narratives at a distance from that which it produces.

Without these commitments, symbolic gestures through the languages of cost savings and student satisfaction call forth the necessity for alternative measures to meet present challenges. Individuals in the institution are then burdened with disingenuous signals that require political action towards the delivery of more inclusive means of participation. During the GARA occupation, this was achieved by rendering the university incapable of doubling the languages of equitability. Members made use of their status as non-being in the eyes of the institution to rebuild the structures of wellbeing. By taking over the university administration building, they—even unwittingly—stripped the institution of its guardianship over their care. Informed by what they argued was a long history of racist practice on campus, they sought to extinguish present university structures by constructing alternative spaces of learning and cooperation. They exercised self-governance with a flat hierarchy, self-learning, communal food sharing, book exchanges, self-organized educational programs and cultural events, as well as anti-racist workshops. What emerged was an alternative mode of being in the process of becoming a new and more self-reliant student-body. GARA’s work towards self-determination disrupted the myth of student satisfaction, as defined by the institution in its present form. The future of the institution was called into question through a demonstration of that which it could not comprehend: the variable dynamics of Black life, and the necessary actions that must be taken for actual change to materialize.

If we take these actions as a lead towards the shaping of a collective relation, then the future comes into view through the act of participation in the now. Yet we must let go of analysis that disrupts the embrace of human co-composition. A growing awareness of our indebtedness to a more acute sense of the future-as-composite gives rise to a socio-technical responsibility. This responsibility foregrounds the affective extinguishment of a duress that, in many instances, is perpetuated through institutional detachment from the lived experiences of BAME people. The consequences of such acts speak to the overriding need to develop alternative social-technical realities—ones that can flourish through, outside, and between “the poetry, the refrains, the rhythms, and the noise such a world is making.”18

Frantz Fanon, Black Skin, White Masks, trans. Richard Philcox (New York: Grove Press, 2008), 226–227.

Maurice Merleau-Ponty, Phenomenology of Perception, trans. Colin Smith (London and New York: Routledge, 2008), 425.

Sofia Akel, “Insider-Outside: The Role of Race in Shaping the Experiences of Black and Minority Ethnic Students,” Goldsmiths, October 2019, ➝.

“Black academic staff face double whammy in promotion and pay stakes,” University and College Union, October 14, 2019, ➝.

“A public statement from Goldsmiths Anti-Racist Action on the end of the occupation,” Goldsmiths Students’ Union, July 29, 2019, ➝.

Han Jiawei and Micheline Kamber, Data Mining: Concepts and Techniques (Burlington, MA: Morgan Kaufmann Publishers, 2000).

“How you can support the NSS Boycott,” Goldsmiths Students’ Union, ➝.

“Why take the survey,” National Student Survey, ➝.

Judy Friedberg, “University Guide 2020: How to use the Guardian University Guide 2020,” The Guardian, June 7, 2019, ➝.

UK Prevent is a state-run security strategy that seeks to identify “radical” individuals by engages with practices that are enacted in the name of managing risk and uncertainty. See: Charlotte Heath-Kelly, “Counter-Terrorism and the Counterfactual: Producing the ‘Radicalisation’ Discourse and the UK PREVENT Strategy,” British Journal of Politics and International Relations 15, no. 3 (August 2013): 394–415.

Jiawei and Kamber, Data Mining.

David Mumford and Agnès Desolneux, Pattern Theory: The Stochastic Analysis of Real-World Signals (Natick, MA: A K Peters, 2010).

Richard S. Sutton, “Learning to Predict by the Methods of Temporal Differences,” Machine Learning 3, no. 1 (August 1, 1988): 9–44.

Matthew Fuller and Graham Harwood, “Abstract Urbanism.” In How To Be a Geek: Essays on the Culture of Software, ed. Matthew Fuller (Hoboken: Wiley, 2017), 39.

Kara Keeling, Queer Times, Black Futures (New York: New York University Press, 2019), ix.

Fanon, Black Skin, White Masks, 84; Achille Mbembe, Necropolitics (Durham: Duke University Press, 2019), 31.

Keeling, Queer Times, Black Futures, ix.

Ibid.

Architectures of Education is a collaboration between Nottingham Contemporary, Kingston University, and e-flux Architecture, and a cross-publication with The Contemporary Journal.

Category

Subject

This contribution derives from a presentation given by Ramon Amaro at Nottingham Contemporary on November 8, 2019. A video recording of the presentation is available here.

Architectures of Education is a collaboration between Nottingham Contemporary, Kingston University, and e-flux Architecture, and a cross-publication with The Contemporary Journal.