The curb is a simple demarcation between two spaces: the roadway and the sidewalk. While their origin had little to do with transport, they became ubiquitous during the industrial revolution as a means to manage the movement of vehicles, people, and water. The curb today is a highly contested piece of urban real estate in many cities across the globe. It has accommodated and served as a space for pedestrian access to and from the sidewalk, emergency vehicle access, access to public transport, wayfinding for visually impaired people, goods delivery and pick-up, cycling infrastructure, passenger pick-up and drop-off, repair and maintenance, waste management and surface water runoff, commercial activities (kiosks, restaurants, food trucks, cafés, ambulant vendors), and leisure. These and other concurrent demands around the curb space involve a wide range of stakeholders and authorities whose activities are often disjointed and infrequently aligned with broader strategic objectives.

Seemingly mundane, the importance of the curb derives from its role in fixing vital space required for the negotiation of cohabitation between humans and non-human entities. This light piece of infrastructure has also become a crucial reference for driverless vehicles, as it allows them to safely navigate our cities. Driverless cars have the potential to either reduce or increase traffic, make affordable transport more or less accessible, and lead to denser cities or even more urban sprawl. A century ago, cars appeared as a solution for cities immersed in heavy traffic from horse-powered vehicles and animal detritus. The broader social consequences of cars, both good and bad, were entirely unforeseen. Most architects and city planners did not respond actively to their implementation and, as a result, cities around the world bulldozed their city centers and the global explosion of suburban sprawl occurred.1 If we treat driverless cars as a mere a technological solution to the problems associated with cars, once again, their wider impacts will be overlooked.

While developing autonomous vehicles, major players such as Waymo (Google), Volkswagen, and Uber have been invested in envisioning the future of cities. The proposed scenarios tend to emphasize consensual solutions in which idealized images of the streets seamlessly integrate driverless technology, with human behavior and city infrastructure unchanged.2 Greater degrees of autonomy have been seeping into commercial vehicles since the beginning of the twenty-first century, from adaptive cruise control to automatic emergency braking, automatic parking, and automatic lane-keeping. But the wide-scale deployment of autonomous vehicles in dense urban environments will unleash conflicts about public safety, personal privacy, and security. It will trigger meaningful transformations of the city, and perhaps in the near future. Yet the fast, disruptive deployment of driverless technology does not preclude a specific urban solution. It does, however, require imagining how the cohabitation of humans and driverless vehicles might be articulated in the urban environment. The differences in the ways that these two urban agents sense the city will define both the terms of the discussion and the design of these spaces.

Driverless Vision

The way driverless vehicles map their environments is one of the main reasons why the city has resisted the wave of autonomous cars. Urban environments multiply the chances for unforeseen events and dramatically increase the amount of sensorial information required to make driving decisions. The quality and amount of data received by an autonomous vehicle is directly proportional to the price of the technology used to capture it, as well as to the car’s subsequent computational capability to resolve, process, and decide upon eventful situations. Balancing sensor types and precision versus reaction speed and price has up until today defined different approaches to the development of driverless technologies, yet this assumes that there are no inherent tensions between self-driving cars and humans that require modifications to the urban environment.3

The arrival of autonomous vehicles requires a new type of gaze—one that can renegotiate existing codes. Currently, human perception and its means of gathering information define the visual and sonic stimuli that regulate urban traffic. Driverless sensors struggle with this information. The repetition of signage, for example, which is used to capture the driver’s attention, often produces a confusing cacophony for autonomous vehicles. Dirty road graphics, signage misallocations, consecutive but contradictory traffic signs, or even the lack of sign and marking standardization are all reasons for some of the most notorious incidents involving autonomous vehicles.4 The assumption that driverless cars will fully adapt to these conditions is erroneous. It overlooks the history of streetscape transformations driven by changes in vehicular technologies.5 More importantly, it ignores the fact that self-driving cars construct and operate according to images that are incomparable to human perception.

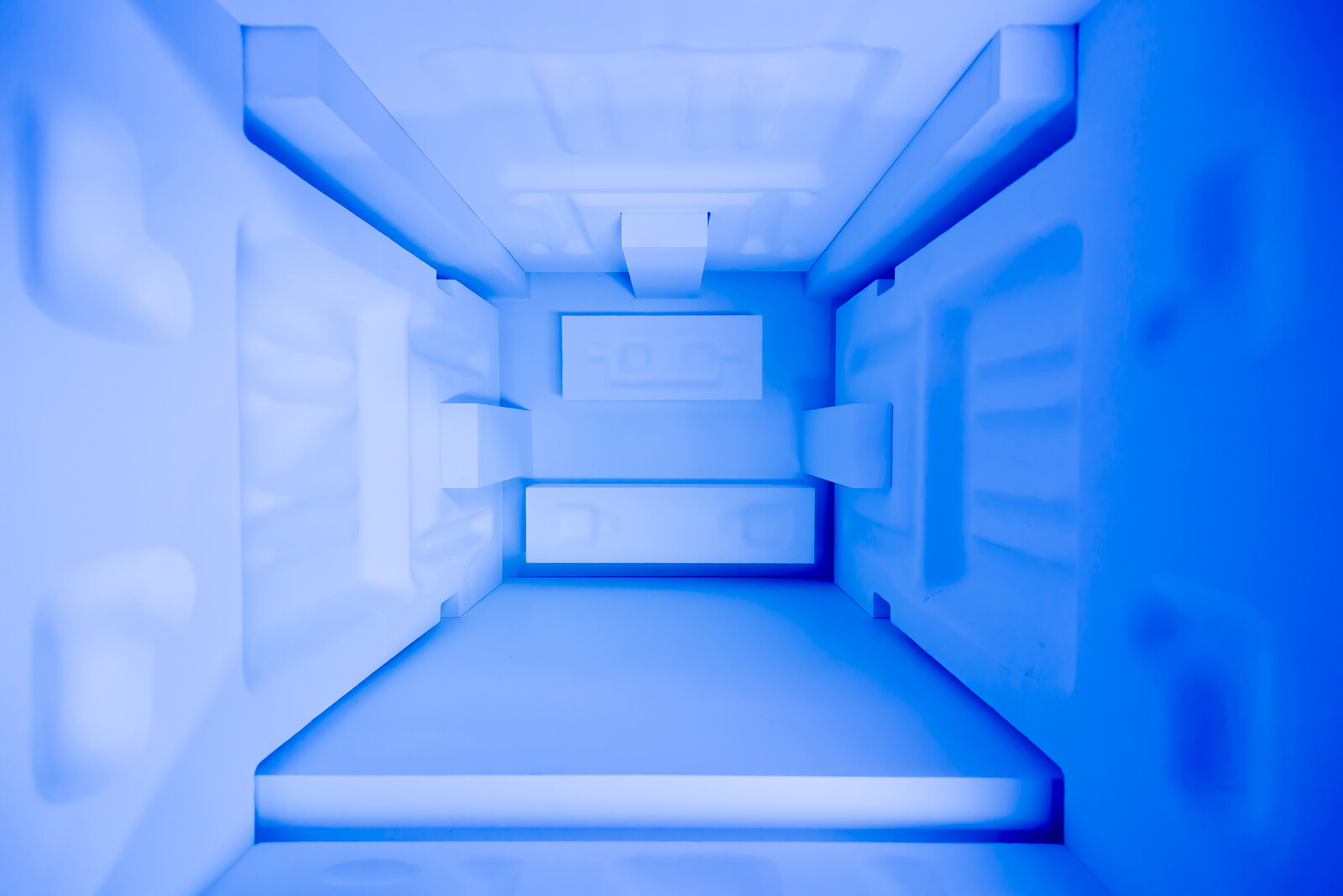

Driverless cars capture real-time data through different on-board sensors. Although there is not an industry standard yet, certain trends are ubiquitous. They use a combination of radar, cameras, ultrasonic sensors, and LiDAR (Light Detection and Ranging) scanners. Driverless cars do not capture environmental sound. Color rarely plays a role in the way they map the city, and with various degrees of resolution, their sensors cover 360° around the vehicle.

The way these sensors function define the potentials and limitations of self-driving cars. Some sensors detect the relative speed of objects in close range, while others capture the reflectivity of static objects from far away. Some are able to construct detailed 3D models of objects no farther than a meter away; others are indispensable for pattern recognition. Human drivers combine eyesight and hearing to make decisions; driverless car algorithms use information from multiple sensors in their decision-making processes. While each sensor in a driverless car captures its surroundings, it also produces a medium-specific map.6

Radar is an object-detection system that use radio waves to determine the range, angle, or velocity of objects. It has good range but low resolution, especially when compared to ultrasonic sensors and LiDAR scanners. It is good at near-proximity detection but less effective than sonar. It works equally well in light and dark conditions and perform through fog, rain, and snow. Although it is very effective at determining the relative speed of traffic, it does not differentiate color or contrast, rendering it useless for optical pattern recognition, which is critical for monitoring the speed of other vehicles and surrounding objects. Radar detects movement, is able to construct relational maps, and captures cross-sections of the electromagnetic spectrum.

Ultrasonic sensors are object-detection systems that emit ultrasonic sound waves and measure their speed of return to define distance. They offer a very poor range, but they are extraordinarily effective in close-range 3D mapping. Compared to radio waves, ultrasonic sound waves are slow. Thus, differences of less than a centimeter are detectable. These sensors work regardless of light levels and also perform well in snow, fog, and rain. They do not detect color contrast or allow for optical character recognition but they are extremely useful in determining speed. They are essential for automatic parking and the avoidance of low speed collisions. They construct detailed 3D maps of the temporary arrangements of objects in proximity to the car.

LiDAR is a surveying technology that measures distance by illuminating a target with a laser light. It generates extremely accurate representations of the car’s surroundings but fails to perform over short distances. It cannot detect color or contrast, it cannot recognize optical characters, and it is not effective for real-time speed monitoring. Because LiDAR relies on light spectrum wavelengths, light conditions do not decrease its functionality, but snow, fog, rain, and dust particles in the air do. In addition to accurate point-cloud models of the city, it can produce air quality maps.7

RGB and infrared cameras are devices that record visual images. They have very high resolution and operate better over long distances than in close proximity. They can determine speed, but not at radar’s level of accuracy. They can discern color and contrast but underperform in very bright conditions as well as when light levels fade. Cameras are key for character recognition software and serve as de facto surveillance systems.

This proliferation of real-time mapping is a defining element of the future urban milieu. These maps are a combination of sections of the electromagnetic spectrum, detailed 3D models, air pollution maps, and interconnected surveillance systems. The base for these maps, however, is not produced by the car’s sensors. Although contemporary autonomous vehicles can theoretically drive by relying solely on this instant mapping, in reality they navigate the environment combining real-time data with HD map stored in their hard drives.

HD maps help the car place itself with a greater degree of accuracy, thus augmenting the sensors in the vehicle. These maps become extremely helpful in challenging situations like rain or snow. More importantly, as they tell the cars what to expect on the road, they allow sensors to focus on processing moving agents like others cars or pedestrians. The inputs to construct these HD maps are threefold. First, there is a base map with information about roads, buildings, and so on. Imagery containing street details, such as Google Street View, is the second main component. Large quantities of real-time GPS location data from people with smartphones in their pockets constitute the third important input. The maps are continuously being updated with information on lane markings, street signs, traffic signals, potholes, and even the height of a curb, with a resolution down to the centimeter.8

Towards a sensorial social contract

Driverless vehicles aren’t just about cars. Rather, they are more akin to the interaction between a government and a governed citizenry. Modern government is the outcome of an implicit agreement — or social contract— between the ruled and their rulers, which aims to fulfil the general will of citizens. If the Enlightenment marked humanity’s transition towards the modern social contract, then determining a sensorial agreement could serve as the first step towards cohabitation between humans and non-human entities. Interconnected sensors and HD maps can create a new common, ubiquitous global sensorium that further dissolves the distinction between nature and artifice. A sensorial social contract embeds the judgment of society, as a whole, in the sensorial governance of societal outcomes.9

Following on from the concept of the social contract, where individuals consent, either explicitly or tacitly, to surrender some of their freedoms for the general good, this new agreement would require individuals to partially hand over safety, control, and privacy. Yet most details of what a potential social exchange might look like is not even knowable today. While there is ongoing legal debate regarding accountability in the case of accidents, the systems controlling these cars are “black boxes.” That is, once the system is trained, data will be fed into it and a useful interpretation will be produced, but the actual decision-making process is not easily accessible, if at all, by humans. Furthermore, the huge amount of processed data includes personal information from drivers, passengers, and pedestrians. Currently, industry agreements on privacy best practices entail increased protection for personally identifiable information, such as geolocation, driver behavior, and biometric data, yet the accumulation of exactly this type of information is valuable for certain entities, such as insurance companies, vehicle manufacturers, advertisers, and law enforcement agencies, whose best interests are not always that of the driver, or the pedestrian. And lastly, security is a vital concern, as the vehicles themselves are vulnerable to being hacked, yet the rivalry between companies means that they are reluctant to share knowledge about cyberattacks and vulnerabilities, or work together to develop more secure designs.10

Every time a major disruptive mobility technology has appeared, a new global agreement has been set. We can see this in the initial International Convention on Motor Traffic in Paris (1909), the three United Nations Conventions on Traffic (Paris 1926, Geneva 1949, and Vienna 1968), the Chicago Convention on International Civic Aviation (1944), and the London Convention on Facilitation of International Maritime Traffic (1965). Vienna’s 1968 Convention on Road Traffic and Convention on Road Signs and Signals set clear precedents on global mobility agreements.11 The Vienna Convention on Road Traffic focused on traffic rules, and different countries agreed upon uniform traffic jurisdiction. The Vienna Convention on Road Signs and Signals focused on the standardization of road signs and signals. All road signs were structured in seven categories, where each class was attributed specific and uniform shapes, sizes, and colors. Road marking specifications were also standardized, which set the geometry, color, and possible word content. The convention also determined traffic lights’ colors and meanings, and set their location and purpose. All of these agreements were based on human perception.

With the arrival of driverless vehicles, agreements need to be renegotiated to accommodate both human and non-human sensing. Different items such as roads signs and traffic lights may eventually disappear as driverless cars gain the ability to navigate roads safely. Road markings, however, will be particularly important as they provide critical information that allows the car to navigate. The visual crispness of lane markers varies dramatically from place to place and presents itself as a prime case study for the required standardization of streetscapes. It is also indicative of the type of light infrastructural changes required in the immediate future for driverless cars.12

On 14 April 2016 at the Informal Transport and Environment Council in Amsterdam, 28 EU ministers of transport endorsed the Declaration of Amsterdam, whereby signatories agreed on objectives that would allow for a more coordinated approach towards the introduction of automated driving.13 But the introduction of driverless cars is not only about transport and its technical requirements, but also about societal implications. This requires the participation not only of industry experts and politicians, but also citizens and experts in other, adjacent fields such as robotics, cybersecurity, insurance, law, ethics, infrastructure, technology, architecture, and urban design. As the development of the technology is still at an early stage, the prospective actors of such a hybrid forum cannot be fixed; future developments in the field will determine the need to incorporate relevant participants, including non-human agents. What is necessary is to guarantee that the right kinds of conversations are taking place. At which point is a self-driving car safe enough to navigate our roads? While industry and politicians have consistently argued that driverless cars will avoid ninety percent of road deaths, this statistic is yet to be proven. Do they need to be three times, or ten times safer than human-driven vehicles? What should it take to obtain a driving license to use an autonomous vehicle? And what about a driving license for an autonomous vehicle itself? As their base data and software are constantly updated, should they be required to submit periodical reports? Should there be a standard of what kind of information is to be reported, if at all?

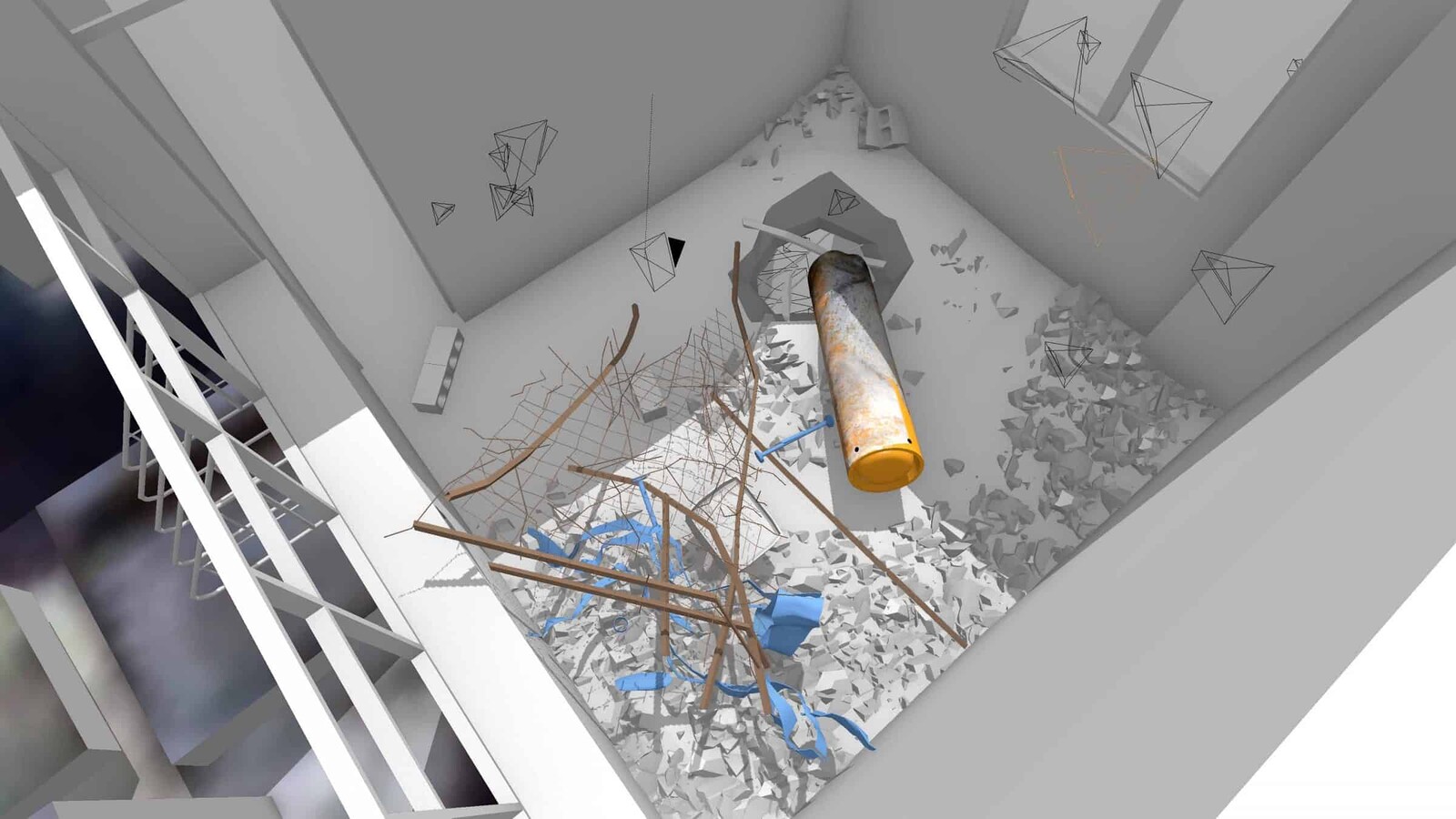

A hybrid forum could also identify other objects of discussion, such as the redesign of streetscapes linked to the implementation of driverless cars. Most of the future scenarios projected by autonomous vehicles accentuate romanticized images of the city, where the integration of driverless vehicles is flawless. In these imaginings, urban infrastructure stays the same while self-driving cars share the streets with humans; there is no apparent tension between the two and no alterations to the urban environment are required.

Historical narratives love smooth transitions, such as that from horses to cars. In reality, there was a period of several decades when the presence of horses started to decline while automobiles began to populate the streets. Accordingly, the street underwent minor but crucial alterations during this period. Street widths remained intact, and the main alterations occurred on the street surface finishes. With cars kicking up powdered horse waste, dust was becoming a serious matter of public concern. In response, the first Road Commission of Wayne County, Michigan in 1906, convened to make roads safer. (Henry Ford served on the commission’s board the first year). The commission would order the construction of the first concrete road in 1909, and conceived of the centerline for highways in 1911. By the 1920s, both were commonly used.

Continuing this historical trend, a hybrid forum could explore modest redesigns and alterations of certain infrastructure to accommodate the driverless car’s singular way of experiencing the built environment. In the current global financial context, intelligent road infrastructure plans seem a remote option for most cities, with local governments having taken the majority of infrastructural responsibility from national governments. Replacing pavements, painting lanes, keeping water systems running efficiently, and filling road holes are common tasks carried out by municipalities on a daily basis, yet ones they can struggle to adequately maintain. Building smart cars, not smart infrastructure, makes it so that only minor infrastructural changes are required in order to accommodate the implementation of driverless vehicles in the immediate future.14 What is necessary are material, tangible links that turn the street into both a laboratory and a political arena so that we can have more informed discussions about the future we want to have. Driverless technologies must be discussed at 1:1.

There are some exceptions such as The Futurists or Le Corbusier who took cars as the driving force in the development of future cities.

Future scenarios tend to focus on how driverless cars, when combined with the sharing economy, could drastically reduce the total number of cars in urban environments. See Brandon Schoettle and Michael Sivak, “Potential impact of self-driving vehicles on household vehicle demand and usage,” University of Michigan Transportation Research Institute (February 2015), ➝; Sebastian Thrun, “If autonomous vehicles run the world,” The Economist. accessed September 2018. http://worldif.economist.com/article/12123/horseless-driverless); Matthew Claudel and Carlo Ratti. “Full speed ahead: how the driverless car could transform cities.” McKinsey&Company (August 2015), ➝; Luis Martinez, “Urban mobility system upgrade. How shared self-driving cars could change city traffic,” International Transport Forum (2015), ➝; Daniel James Fagnant, “Future of fully automated vehicles: opportunities for vehicle-and ride-sharing, with cost and emission savings.” University of Texas Austin (PhD Dissertation, August 2014), ➝.

Tesla’s current sensing system comprises eight cameras that provide 360° degree visibility with a range of 250 meters, as well as twelve ultrasonic sensors and a forward-facing radar. Waymo’s most advanced vehicle, a Chrysler Pacifica Hybrid minivan customized with different self-driving sensors, relies primarily on LiDAR technology, with three LiDAR sensors, eight vision modules comprising multiple sensors, and a complex radar system.

Alexandria Sage, “Where’s the lane? Self-driving cars confused by shabby USA roadways,” Reuters (Marc 31, 2016), ➝; Andrew Ng and Yuanquin Lin, “Self-driving cars won’t work until we change our roads—and attitudes,” Wired (March 15, 2016), ➝; Signe Brewster, “Researchers teach self-driving cars to ‘see’ better at night,” Science (March 20, 2017), ➝.

The relationship between the transformations of the streetscape and the arrival of new vehicular technologies also places driverless cars at the center of the history of architecture. Since its inception, the car has often played a central role in architects’ urban visions. The precepts of the Athens Charter and the images of Le Corbusier’s Ville Radieuse (1930) were explicit responses to the safety and functional issues associated with the popularization of car. The implementation of the Athens Charter was met with varying degrees of success. During the post-war reconstruction of Europe and the global explosion of suburban sprawl, it fuelled architectural controversies that questioned the role of cars in the definition of urban environments.

Michael Barnard, “Tesla & Google disagree about LiDAR — which is right?” Clean Technica (July 29, 2016), ➝.

Warren B. Johnson, “Lidar applications in air pollution research and control,” Journal of the Air Pollution Control Association, vol. 19, no.3 (1969): pp. 176–180.

The need for HD maps, indispensable to the future of driverless vehicles, has produced a new industry. “The battle for territory in digital cartography,” The Economist (June 8, 2017), ➝.

The term “Sensorial Social Contract” derives from “algorithmic social contract” coined by MIT professor Iyad Rahwan, who developed the idea that by understanding the priorities and values of the public, we can train machines to behave in ways that a society would consider ethical. Iyad Rahwan, “Society-in-the-loop: Programming the algorithmic social contract,” Medium (August 12, 2016), ➝.

Simon Garfinkel, “Hackers are the real obstacle for self-driving vehicles,” MIT Technology Review (August 22, 2017), ➝.

See ➝.

All assumptions are based on the current vehicles sensing technologies previously analyzed.

The first high level meeting, organized by the Netherlands, was held in Amsterdam on February 15, 2017 and focused mainly on the technical aspects of automated vehicles. It was attended by no less than twenty-four EU member states, the European Commission, and six industry partners. The next high level meeting was held in Frankfurt on September 14–15, 2017, one of the main outcomes of which was an EU-wide campaign to promote the development of necessary knowledge and realistic expectations among the population, thereby creating an atmosphere of trust. See: “On our way towards connected and automated driving in Europe,” Dutch Ministry of Infrastructure (February 15, 2017), ➝; “Action plan automated and connected driving,” German Federal Ministry of Transport, Building and Urban Development, ➝.

Hod Lipson and Melba Kurman, Driverless: Intelligent cars and the road ahead (Cambridge: MIT Press, 2016).

Becoming Digital is a collaboration between e-flux Architecture and Ellie Abrons, McLain Clutter, and Adam Fure of the Taubman College of Architecture and Urban Planning.

.jpg,1600)