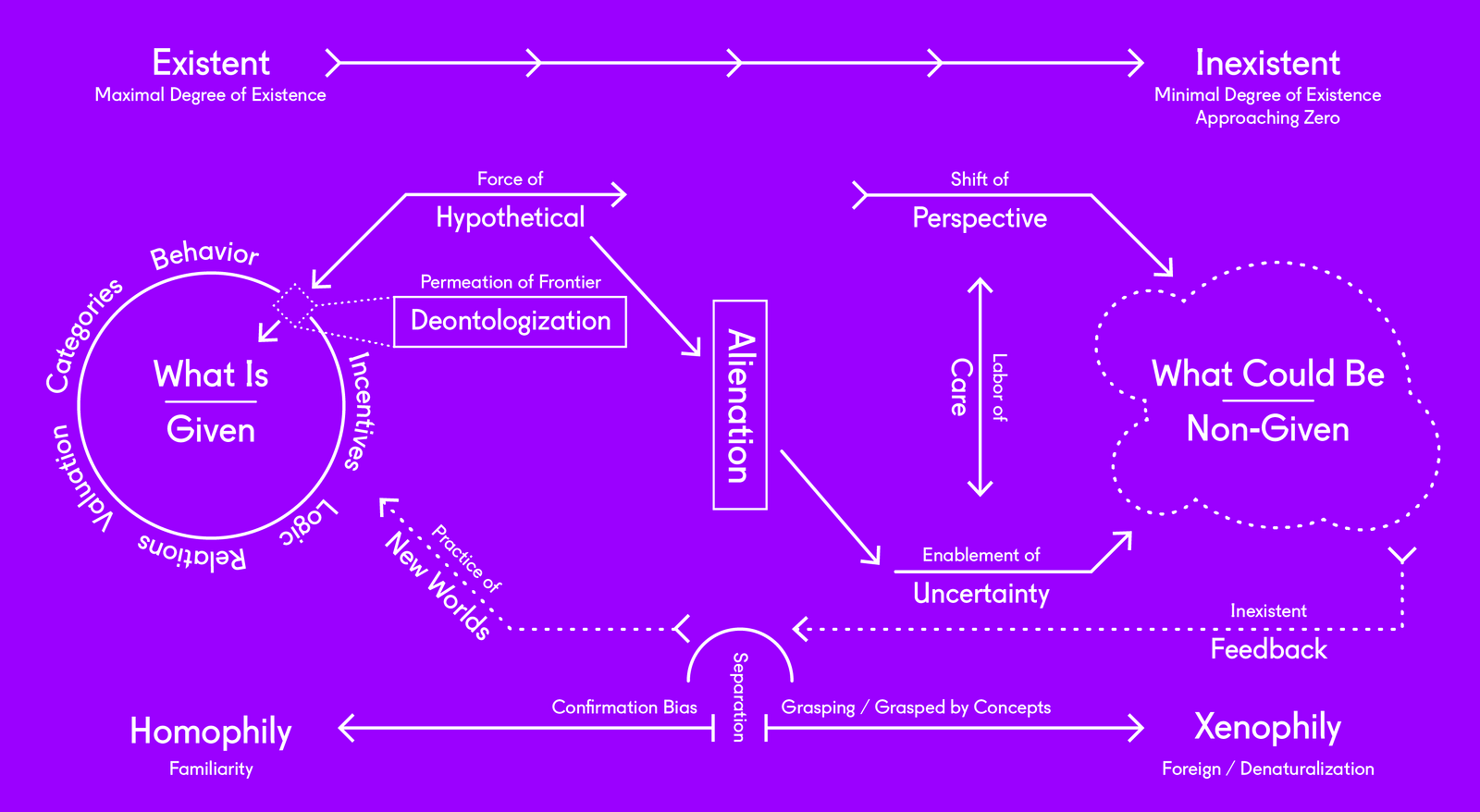

Intensive incompatibility marks our moment. The multiple crises we face, socially, economically, and ecologically (which are impossible to disentangle), are incommensurate with our existing means to justly mitigate them. These crises did not suddenly appear out of nowhere, but are the result of human making; a deeply uneven making, whose acute consequences disproportionately follow well-trodden trajectories of historical domination. Unbridled technological development is partially complicit in amplifying these crises, but this is largely so because it is embedded in particular socio-political diagrams that set far more determinate constraints on what, for example, algorithms do, than what algorithms, as such, could do. The crux here lies in the “could,” which is a question of enablement: in what conditions can, say, the algorithmic serve us, in what conditions will it devour us for spare parts, and in what conditions does it preemptively criminalize the innocent? It’s from this question of enablement, and the conceptual models of planetary-scaled cohabitation diagramming said enablement, that we need to depart. The concepts we construct to model a world delineate a landscape of possibility (what is, or the given), while often violently foreclosing others, making the struggle over them an essential battle to be collectively waged. These models (whether explicitly embraced and known, or mutely practiced) set up chains of incentives, reasoning, relations, valuation, categories and behavior—infusing all manner of material and/or computational actualization and directing the ways in which reality is intervened upon. Today we seem caught in routines of “changeless change,” with “innovation” largely monopolized by shareholders or confined to solutionist (false) promises, because our conceptual models have been calcified by what is.1 There are powerful interests invested in sustaining what is; these “conceptual battles” are also, quite obviously, long-term political ones. Nonetheless, since substantial, enduring transformation is enabled at this conceptual level plotting who we are, where we stand, who/what composes a “we,” and how we understand coexistence, humble first steps need to be taken. This movement marks an affirmative labor in deontologizing that which is given, leveraging a chasm between what is and what could be. To transform these conceptions of givenness, a necessary, albeit always provisional myth, is to intervene in the world on a diagrammatic plane, both because of the potent nexus between ideality, materiality and reality, and because of our ability to grasp and be grasped by concepts.2 Concepts remodel us as much as we model them.3

The most perniciously incompatible diagram at work is the one coercing us into naturalized molds of relentless competition and self-interest, engendering ramifications at a scale that threaten not only well-being, but our collective survival. (No one promised these diagrams were rational.) Derivations of said meta-diagram in the form of the categorical and structural oppressions required for its particular perversion of “sustainability” are often corrosively reproduced through (but not because of) computational automation, highlighting the intertwining of ideality and materialization (an important, constitutive parity).4 When, for example, prevailing normative models uphold “whiteness” as the index for the category we call “human,” our corresponding technologies will be calibrated to that unjust, inadequate bias.5 Or when gendered clichés are reinforced through our digital personal assistant, who takes on servile characteristics as an “idyllic,” complacent woman, politely absorbing any abuse thrown at “her” whilst duly enacting “her” task in sorting out your calendar.6 Despite the impressive feats of today’s most advanced machinic intelligence(s), without correlating transformations at a level of conceptual perspectives, existing categorical prejudices will persist as an articulation of human ignorance, and claims on technology’s power will remain in the hands of a few. This procedure is bidirectional; the mutual contamination between concepts and actualization is a dynamic feedback loop, where the uncertain effects of actualization (which can never be known absolutely), work upon our conceptual perspectives that have enabled them and open a space of mediation provided, however, that we hold the fallibility of our perspectives to be steadfastly true. Without the attentive embrace of fallibility, perspectives ossify into entrenched naturalizations of what is, confusing the accounting of a world with the world as such, like gazing at an object from a single, particular position and insisting on consolidating that view with the object’s full dimensionality.

The labor of perspectival reformatting is an ongoing labor of care, as well as a careful labor, driven by a generative force of alienation. Being grasped by concepts is an alienation from familiar logical/categorical perspectives. Despite the term having been locked down in a negative register, signaling social anomie or dehumanization and positioned as something to be overcome, on a perspectival front, alienation is a necessary force of estrangement from what is. Alienation can never be “total”: it expresses the quality of a relation, and not a thing unto itself; something is alienated from something else, and to properly understand it requires reflection in at least two directions.7 When “alienation” is often taken as a ubiquitous descriptor of life in our technosphere, to avoid the meaninglessness of its (seeming) semantic self-evidence we need to ask: What would a non-alienated condition look like? The consequence of a world without alienation binds us to familiar cognitive schemata since it refuses engagement with the strange, the foreign, and the unknown, fixing “common-sense” to the given. Alienation and abstraction form a kinship in this regard, since both pertain to modes of separation and impersonalization. While certain vectors of these forces undeniably structure our contemporary status-quo for unjust ends, the cognitive potency inherent to them should not be abandoned, for what these capacities can do and are today is more necessary than ever.

Immediate, proximate, concrete reality is often positioned as desirable because it is unalienated, yet this preference indicates two crucial problems that cannot be ignored, especially in view of our complex reality and the means we need to politicize it otherwise. On the one hand, the formulation which pits the concrete against the abstract neglects the constitution of concreteness by abstraction, while on the other, the expression of a preference for concrete immediacy reveals an anthropocentric bias, since the scale at which something is identified as “proximate” is correlated to our particular phenomenal, human interface.8 If we are to adequately cope with the multiple scales of reality and care for the complex entanglements of our planetary condition, our own generic anthropocentric schemata must be effectively “situated,” which as the historical cascading of Copernican humiliations informs us, is becoming increasingly alienated from the center. We’re ex-centric, and to add a further humiliation, the human can no longer claim a monopoly on intelligence, as Artificial General Intelligence stands to multiply what “intelligence” even means and what it could do. This plight cannot be reduced to a simplistic binary framing, of either a techno-evangelist celebration of human limits being trumped by the “perfection” of computational processes, or by naively insisting on the enduring inflation of human exceptionalism (the very concept of exceptionalism that scaffolds our crises). To reduce this transformation to an either/or option curtails possibilities of enablement on both fronts. “Staying with the trouble,” as Donna Haraway proposes, entails a negotiation of the world’s messiness, not a deflation or disavowal of it.9 To stay with the trouble means there is no easy way out, forcing us to navigate through it mesopolitically; not with an “anything goes” attitude, but with careful attention paid to how the “trouble” informs the way we fashion distinctions in the world, for which a clear, fundamental distinction must be drawn between decentering and dehumanization. The decentering of the human does not equal dehumanization; rather, it can simply enable something other. That said, this process will not just “naturally” occur, so to care for and nurture this important non-equation requires a corresponding perspectival recalibration; plotting where we are, in the generic, and provoking a reframing of the human in view of its humiliation. The question before us is not whether we ramp-up or reject these developments, but how we will be grasped and alienated by this Copernican trauma and, vitally, what conceptual architectures will we construct to buttress both its enabling and constraining possibilities?

The required subject—a collective subject—does not exist, yet the crisis [eco-catastrophe], like all the other global crises we’re now facing, demands that it be constructed.

—Mark Fisher10

The proliferation of discourses outlining the planetary-scaled operations of our reality point to descriptions where we humans are, despite the grandiose effects of our cumulative interventions, but a mere tiny particle on the imperfectly round surface of the earth.11 While they raise a pragmatic, logical, and ethical impediment to claims of agency bound to models of subjectivity subtended by principles of immediate causation, proximity, or “authenticity,” adjudicated by first-person, phenomenological experience alone, just as the decentering of the human does not guarantee dehumanization, these systemic accounts do not mean that the question of subjectivity suddenly disappears, is dissolved by scale, or can be brushed aside absolutely as a relic of past conceptual follies. In recognizing the need for a “collective subject” that could measure-up to and transform the impersonal, abstract forces driving the crises of our time (irreducible as they are to moral frameworks of personal responsibility), the subject demanding construction today may, in fact, be far more alien than a purely human composite. Who and what is to compose this collective, and therefore aggregate subject?

It is telling that Fisher’s succinct observation revolves around the problem of eco-catastrophe; a problem overflowing localisation as much as its intensive forces strike localities. Although unaddressed in his formulation, what these types of problems additionally stress is the need for rigorous epistemic traction and the question of how that purchase can translate both libidinally and concretely. As we become increasingly confronted by such complex local/global objects of ungraspable proportion (both large and miniscule) and since these objects defy the category of concrete proximity and sense experience as a justification for reasoning, this epistemic traction can only be an alienated one. Tackling what we could call “average-objects”—objects such as the climate whose residues, like weather, can be experienced, but whose proper existence is one of an abstract mean, being pluri-local, multi-systemic, and (at least anthropocentrically) generational in temporality—entails an engagement with non-presentness (what is not directly available to our senses). We need only recall the inane justifications for the “hoax” of climate change, with the presentation of a snowball as “proof” to the US senate floor, to exemplify how the conflation of the particular with the mean (and vice-versa) leads only to dead-ends (when not outright stupidity).12 Because these “average-objects” elude presentness, getting a foothold on them requires computational intervention; they require modelling and diagrammatic articulation to make them amenable to cognition. The type of collective-subject required to politicize these complex objects is therefore not just a call for maximal human solidarity, slicing through regimes of individualism and alienating subjectivity from selfhood, but also one that accommodates a non-human, computational constituency.13

To insist on cognitive tractability is not to insinuate that these types of complex objects can be fully known, perfectly modelled, controlled or mastered; nor is it to say that knowing more about them directly equates with improved actions or strategies. Mechanical causality is long gone. Knowing for certain that we can never fully know or determine such objects, what they do render increasingly transparent is the necessity to mobilize this integral uncertainty. In an effort to combat the correlation of uncertainty with inaction (of suspending action while waiting for absolute certainty from the sciences that will never come), Wendy Hui Kyong Chun has stressed we should neither “celebrate nor condemn […] scientific models that are necessary to engage with the invisible, inexperienceable risks,” and instead treat these (always imperfect) computational models as “hypo-real tools, that is, as tools for hypothesis.”14 Chun’s central call is for uncertainty to become an enabler of activity because of the capacity of the hypothetical, rather than instrumentalized for inertia. Politicizing uncertainty via the capacities of the hypothetical does not entail a boorish leap towards the unknown as we have already seen in the celebratory hijacking of “uncertainty” qua complexity from the worst of post-modern politics that champion infinite potentiality without qualification.15 As a bridge to the could be, the hypothetical is not a mere leap of faith, but is a reasoned construction of a conceptual horizon (as actual horizons do not exist), orienting us as we move through and work with that which is not directly observable.16 If inductive inference helps us forge cognitive schema over what is observable, it is a mode of reasoning the existent. Hypotheses, on the other hand, enact abductive reasoning, providing narrative perspectives for that which exceeds (or evades) observation and/or known (accountable) reality. The hypothetical opens access to the inexistent, or what could be otherwise, as a novel diagram upon which to maneuver anew. With (generative) alienation describing the separation from what is to what could be, as the articulation of uncertainty’s enablement, the hypothetical is its primary vehicle.

To innovate the agency of the User is then not just to innovate the rights of humans who are Users, but also to innovate the agency of the machines with which the user is embroiled.

—Benjamin Bratton17

As the complexity of our epistemic and material environment increases, interfacing the human with computational capacities will become increasingly indispensable for considered actions and transformative mobility. This marks a potential, one that is irreducible to valances of pure reverence or denunciation. It is volatile, risky, and subject to misuse, which is why politicizing the instrumentalization of this suture is of unrelenting consequence. Because human/machinic coupling is mutually transformative, the perspectival concepts baked into computational design require acute attention and intervention for the quality of activities they facilitate. This is as much a “site” of labor for the humanities as it is for STEM, despite the current, severe power imbalance between the meta-disciplines. Exemplary is how the online world today is, as Chun brilliantly frames it, largely defined by structures segregation through the applied concept of “homophily” in network-design. The concept that “birds of a feather flock together,” that “like breeds like,” etc., enable the now infamous echo chambers, along with their very material, offline ramifications.18 Homophilic network design is essentially the automation of familiarity, upheld by a particular conceptual (sociological) predisposition. When encounters and confrontations with the foreign and the strange are algorithmically prohibited, alienation has, in a distorted, unintended sense, been overcome. There has been little to celebrate in this instance of alienation’s overcoming. If changeless change continues to epitomize our condition, it is only reified by the automation of familiarity, and the price we collectively pay for this initial bias of “similarity breeding connection,” is captured by our deficiency in hypothesizing new perspectives.19 Hegel was right: familiarity does obstruct possibility.20 If homophily is the algorithmic mimicry of human behavior, perhaps what is asked for in Bratton’s call for the innovation of machinic agency is the conception of a xenophilic perspectivalism, one that is decoupled from anthropocentric machismo (which has been culpable of the worst dehumanizations) and capable of denaturalizing human habits bound to parochial immediacy. If we are to confront, and not blindly deny the Copernican humiliation upon our human “situation” as a generatively alienating force, it’s time we become aggregated by xenophilic influences, remap socio-political diagrams, and enable desireable coexistence.

Sebastian Olma, In Defense of Serendipity: For a Radical Politics of Innovation (London: Repeater Press, 2016). Solutionism, as Evgeny Morozov identifies it, essentially turns all problems into techno-scientific ones, rather than social, normative or governmental ones. Ian Tucker, “Evgeny Morozov: ‘We are abandoning all the checks and balances’” in The Guardian, 9 March, 2013, ➝.

For an account of Wilfrid Sellars’ “Myth of the Given” see “Slaves to the Given”, >ect 8, Podcast, ➝.

Ray Brassier, “The View from Nowhere”, in Identities: Journal of Politics, Gender and Culture Vol. 8, No. 2, Summer 2011, 6–23.

That conceptual predispositions are baked into our increasingly automated, computationally driven reality, reveals a contingent parity between the techno-scientific domains (STEM) and the humanities that demands leveraging. This constitutive parity, between disciplines of knowledge, does not mean, however, that they factor equally, socially or economically as anyone trying to fund an arts/humanities program can attest. Much like the axiom of equality Jacques Rancière puts forth in Disagreement, where he claims that the only way there is order in society is because there are those who give commands, and those who obey those commands; but crucially, for this structure of dominance to perpetuate, one needs to understand the command and understand that one must obey it – and this, he asserts, is the necessary contingency of ‘equality’ at the root of inequality. Politics, as such, is when this contingency of equality is claimed and made actionable as the demonstration of parity. Although Rancière’s formulation concerns exclusively human agents, the concept remains useful to extrapolate upon knowledge domains finding themselves powerless, merely ‘obeying’ the commands of STEM as institutions of knowledge optimize for competitive relevance – an adaption only fitting the organization of what is. See: Jacques Rancière, Disagreement: Politics and Philosophy, trans. Julie Rose, (Minneapolis: University of Minnesota Press, 1999) 16.

Claire Lehmann, “Color Goes Electric”, in Triple Canopy Issue #22, May 2016, ➝.

Helen Hester, “Technically Female: Women, Machines and Hyperemployment”, Salvage, August 2016, ➝.

Walter Kaufmann, “The Inevitability of Alienation” in Richard Schacht, Alienation (London: George Allen and Unwin Ltd., 1971) xiii-xvi.

The concrete assumes the abstract as a condition of its enablement, which is to say there is always a dynamic imbroglio between the territory and the map, materiality and ideality. See Ray Brassier, “Prometheanism and Real Abstraction”, in Speculative Aesthetics (Falmouth: Urbanomic, 2014) 72-77, and Glass Bead, “Castalia, the Game of Ends and Means” editorial, in Glass Bead Journal, Site 0, February 2016, ➝.

Donna J. Haraway, Staying with the Trouble: Making Kin in the Chthulucene (Durham: Duke University Press, 2016).

Mark Fisher, Capitalist Realism: Is There No Alternative? (London: Zero Books, 2009), 66.

See: Keller Easterling, Extrastatecraft: The Power of Infrastructure Space (London: Verso, 2015); Alberto Toscano, “Logistics and Opposition” in Mute, Vol 3., No. 2 (2011), ➝; Benjamin H. Bratton, The Stack: On Software and Sovereignty, (Cambridge: MIT Press, 2015).

Jeffrey Kluger, “Senator Throws Snowball! Climate Change Disproven!” in Time, 27 February 2015, ➝.

Ibid., Brassier, 2011.

Wendy Hui Kyong Chun, “On Hypo-Real Models or Global Climate Change: A Challenge for the Humanities” in Critical Inquiry, Vol. 41, No. 3 (Spring 2015), (Chicago: Chicago University Press), 675-703.

Mario Carpo, “The Alternative Science of Computation”, in Artificial Labor (e-flux Architecture, 2017), ➝.

Stathis Psillos, ‘An Explorer upon Untrodden Ground: Peirce on Abduction’, in Handbook of the History of Logic Volume 10, eds. John Woods, Dov Gabbay and Stephan Hartmann. (Amsterdam: Elsevier, 2011), 115-148.

Ibid., Bratton, 348.

Wendy Hui Kyong Chun, “The Middle to Come”, Panel discussion, transmediale: ever elusive, Berlin, 5 February, 2017.

Miller McPherson, Lynn Smith-Lovin, and James M Cook, “Birds Of A Feather: Homophily in Social Networks”, in Annual Review of Sociology Vol. 27, August 2001, 415-444.

Ibid., Kaufmann.

Artificial Labor is a collaboration between e-flux Architecture and MAK Wien within the context of the VIENNA BIENNALE 2017 and its theme, “Robots. Work. Our Future.”

Category

Subject

Artificial Labor is collaborative project between e-flux Architecture and MAK Wien within the context of the VIENNA BIENNALE 2017.