Robotics has an anthropomorphic obsession. We build robots in the image of ourselves, but think of them more as objects that manipulate other objects. In a utopian scenario, these anthropomorphic robots take over strenuous, inhuman labor to free humans for higher tasks and leisure, whereas in a dystopian one, they become a threat to humans, taking away their livelihood, outpacing and outdoing them, step by step, in every area of human ability and labor, both physical and mental.

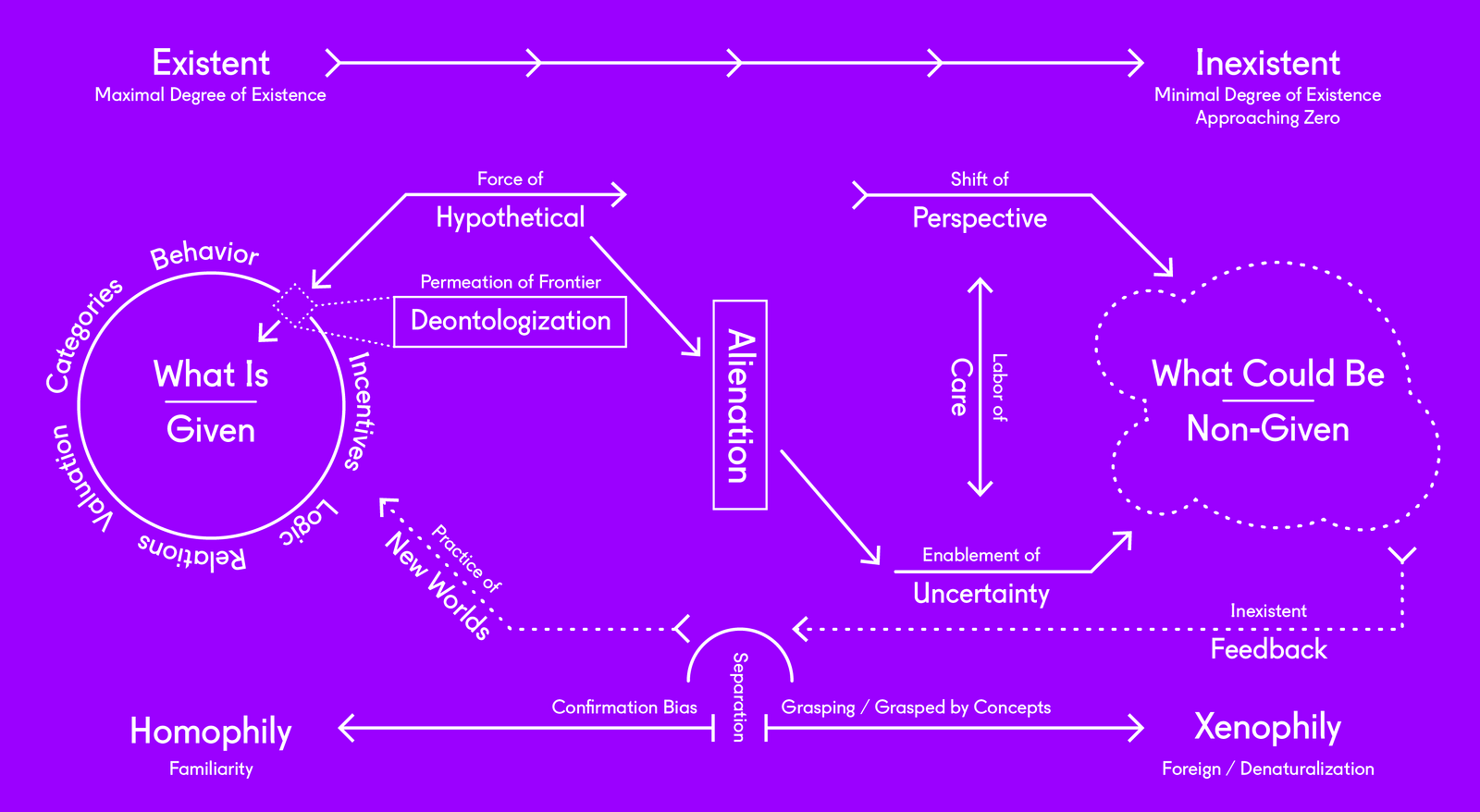

An alternative to this projected threat lies in the development of domain specific robotics, such as architectural robotics at the scale of buildings. This is a reality in which the focus of robotics is no longer solely on the materiality of the object, but rather on techniques for manipulating immaterial patterns of spatial inhabitation. Further change comes with the shift of complexity from mechanical towards algorithmic and machine-learning. Shifting complexity from the level of the physical machine to the level of control allows for the integration of a much more heterogeneous set of controls at multiple scales.

Complexity itself resists design in its ever-evolving and emergent configurations, and quickly renders any attempt to resolve it obsolete. Yet with the open-ended possibilities emerging from the fusion of adaptive computation and the physical environment, design does not lock down solutions, but rather works at a systemic level to invent novel ways of using pre-existing and newly developed physical systems. Control can evolve by linking the physical hardware of buildings to continuously evolving behavior with sensor inputs.

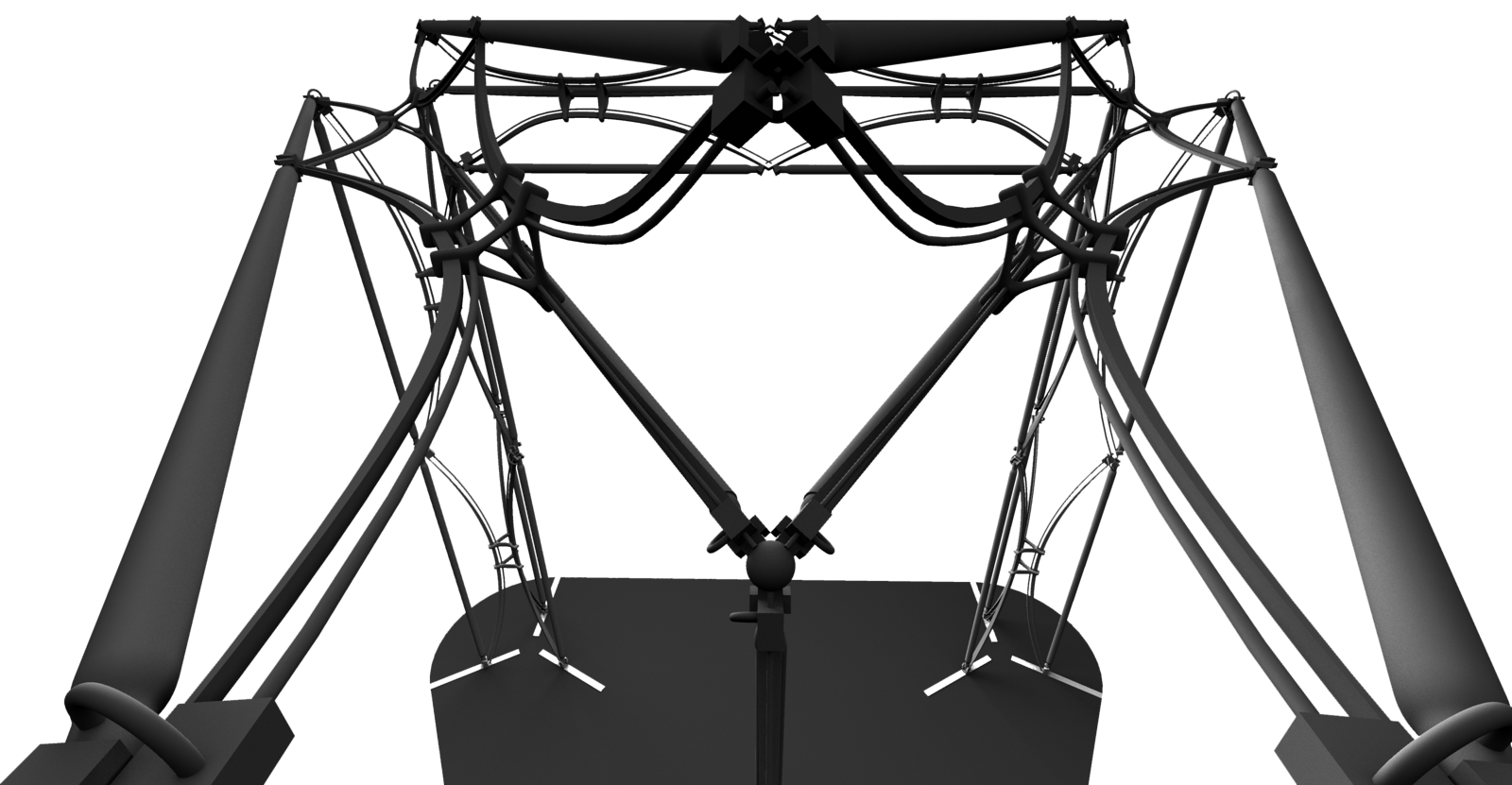

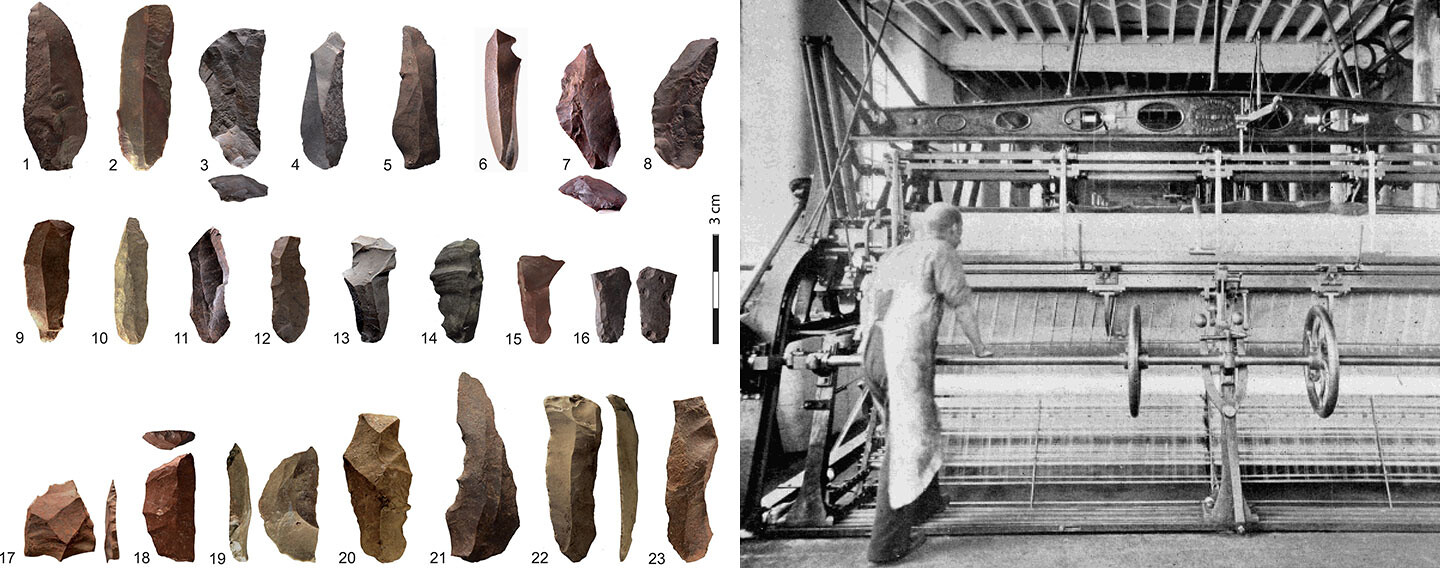

Installation of Flexing Room at the Seoul Biennale of Architecture and Urbanism 2017.

Take, for example, a standard mechanical forced-air ventilation system that manipulates an isolated interior air volume to maintain constant temperature by reading a network of thermostats. This mechanical, analog form of computation is engineered into the system’s physical structure, making it both robust and generic, but also limiting it to this single purpose. Conversely, if actuators are placed on all operable elements such as doors and windows of a building, supervised machine learning could potentially lead to the discovery of new configurations of the building’s architectural apertures for ventilation. The combination of physical and digital computation in building systems effectively turns architecture into a form of embodied computation. Design can thus continue to operate at a systemic level throughout the lifetime of a building, discovering emergent and useful ways to use pre-existing physical systems.

Humans are always in a spatially mediated relation buildings. Explicitly conceiving of buildings as environmental modulators could extend control from air to space itself, differentiating social scenarios and shaping collective social interaction. If the focus of architecture shifts from passively housing human actions towards enacting spatial agendas through buildings, an autonomous architecture may emerge.1 Shying away from the highly loaded and burdened significance “autonomy” has carried in architectural discourse since the 1970s, I propose to understand the term as it is used in the contemporary context of technology, such as in “autonomous cars” that acquire—and require—a degree of independence in decision making through algorithmic design and machine learning. Automation is not equal to autonomy, and autonomous machines rely on automatic processes in order to execute their agenda. But while an automatic system’s responses are known and fixed, an autonomous system is open-ended and independent in its self-governing decision making process. As degrees of freedom increase and architectural and urban scenarios get more complex, autonomy cannot stay confined to the scale of the object.

When architecture becomes robotic, its autonomy means that the design process must extend beyond schematics, design development, and construction, and into the lifespan of the building, becoming a learning process in the context of its environment. Design is therefore not to be understood as an isolated process at the beginning of a sequence that entails fabrication and inhabitation, but rather treated as one continuous process, linking the design process with the process of use. The term “embodied computation” stands for the expansion of computation as an abstract, predominantly computational process into a hybrid physical-computational construct. Computation needs to break out of the limitations of simply describing the object and reach into the realm of lived-in architecture, thus enabling autonomous architectural robotics.

Precedents in architectural robotics range from the visions of Cedric Price’s Fun Palace to contemporary experiments of the Hyperbody Group at TU Delft, among others.2 One important reference with a strong emphasis on AI-based human-building interaction is the ADA intelligent room project from 2002 by the Institute of Neuroinformatics, ETH, Zurich, which exhibited the beginnings of autonomy by shaping people’s behavior during their visit to the Expo.3 The goal today is to push beyond interactivity and develop architecture as an autonomous agent that actively shapes human behavior.

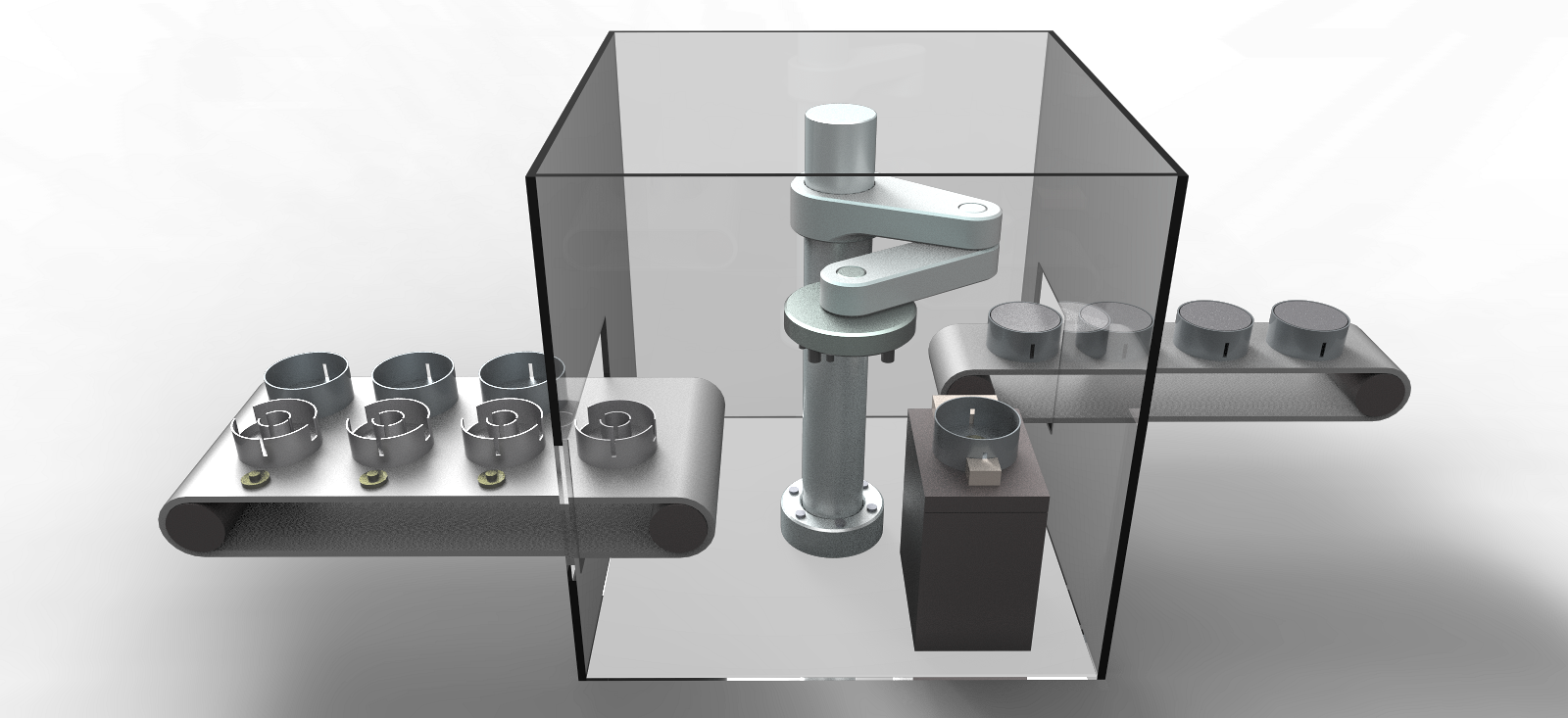

In contrast to the dominant research focus in architectural robotics today on construction automation through industrial robot arms, embodied computation approaches architecture itself as a robotic entity for experimentation. One example, The Bowtower, is an experimental prototype that uses its physical structure to solve a mathematical function with six parameters, where the incline angle of the tower is the function return value.4 The six parameters are the different air pressures in the actuators, which cause them to contract and lean the tower. To level the tower, a search algorithm is run to find a functional minimum by sequentially running different sets of air pressures on the tower and monitoring the angle return, driving it towards zero degrees. The control of the tower’s behavior is based solely on feedback through the physical structure and not on a computational simulation. The absence of a simulation model makes the tower’s control robust against unpredictable changes such as defects in the structure or human intervention. Without a codependent simulation and structure link that could get out of sync, the changes are simply compensated through sensor feedback. This concept has been expanded to a 36-actuator structure the size of a small room in the Flexing Room, a skeletal enclosure that counts the presence of people with a series of postures it strikes over time. The simple leaning angle of the tower is replaced with a rudimentary form of social feedback based on the occupation of the room. The robot has turned from an object into enclosure.

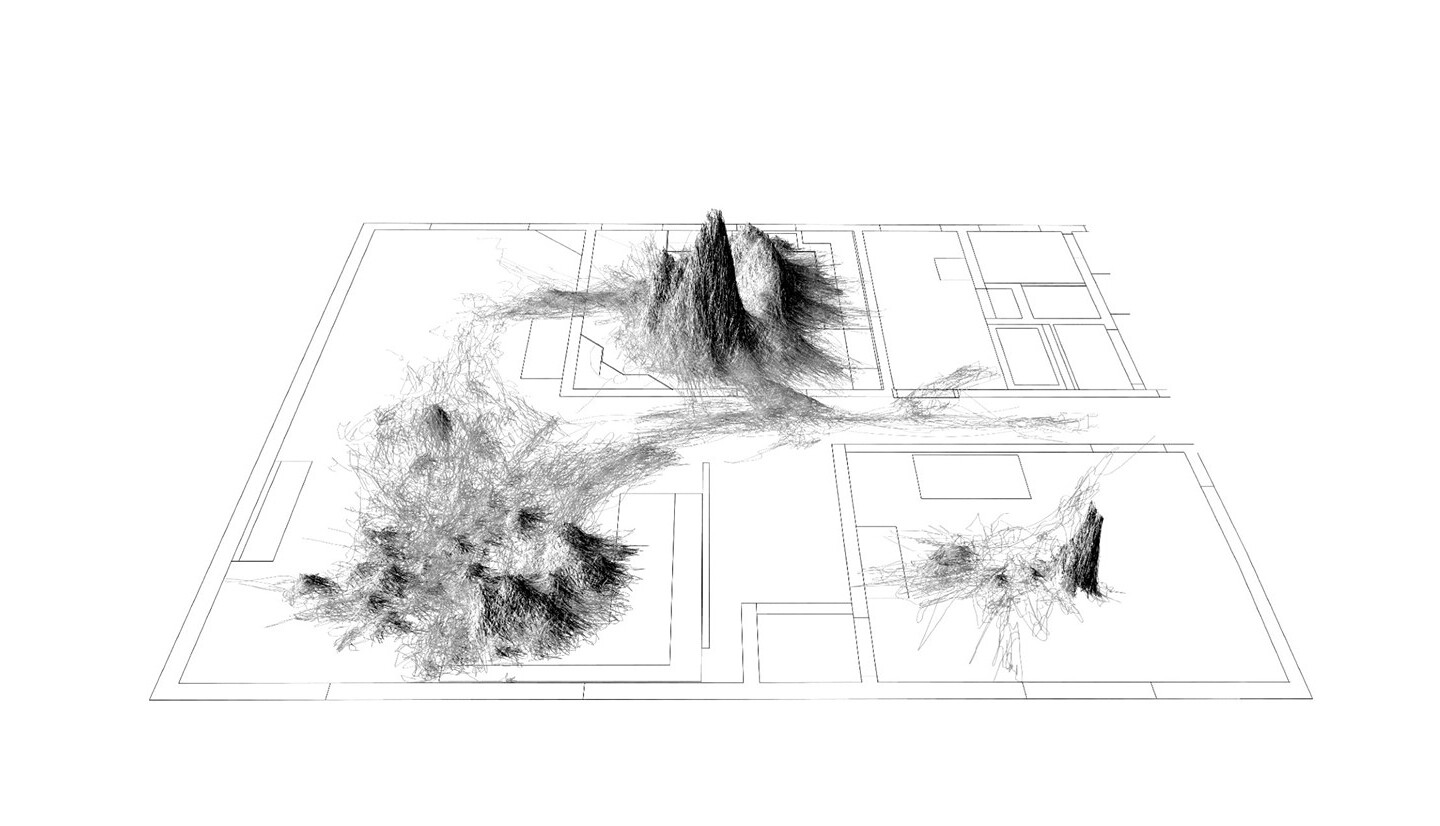

In order to speculate about the potential of autonomous architectural robotics, we must consider what buildings might be able to perceive. Towards these ends we can look to Deb Roy’s 2013 study of his child’s language development.5 Roy, director of the MIT Media Lab’s Laboratory for Social Machines, used overhead cameras to record and map all sounds from his family’s domestic environment during the first years of their child’s development. The study allowed for the precise tracing and identification of every utterance spoken in both space and time. The study revealed the spatial clustering of certain words, such the word “water” in the architectural context of the kitchen. While analysis only happened after data collection had concluded, it is fascinating to think about the possibility of architecture responding based on an active understanding of how the floor plan and program shapes inhabitants’ experiences.

A “wordscape” showing the birth of a word by mapping the data related to every utterance of the word “water” in Deb Roy’s home. Image: Philip DeCamp/Deb Roy.

The fine granularity of Roy’s spatial tracking within each room and time, combined with situated acoustic recordings, is a reminder of how simplistic the current accessory-based automation of private homes is. Networked virtual assistants such as Alexa and Google Home occupy the acoustic interface spectrum with speech-driven human-artificial intelligence communication in millions of households today, and developments for their integration into other domestic control systems are well underway. While fascinating on the level of consumer products, its development is not one that is driven by any notion of architectural potential or by engaging the spatial dimension of built form. This form of domestic automation is limited to the retrofitting of an assumed, generic space, and ignores the subtle compositional and proportional variations that inherently dwell within architecture. While the Nest Thermostat is more conceptually powerful in how it learns from the user’s behaviors over time, it has yet to become holistically integrated into the idea of architecture itself. Yet with the iRobot Company, makers of the Roomba vacuums, proposing to sell automatically-generated floor plans of its user’s homes to home automation firms like Google or Amazon, efforts to integrate these consumer products more precisely into architectural contexts are emerging.6

Despite speech-based advances, man-machine interaction is still dominated by screen-based interfaces. But the idea of robots as buildings, or buildings as robots, offers the possibility of using space itself as an interface for objects that are easily tens or even hundreds of times larger than the human body and defy the simple object-to-object interface paradigm. Architecture operates at a scale at which there would rarely ever be a one-on-one interaction between human and architectural robot through a single interface. Instead, an autonomous architectural robotics potentiates innumerable, concurrent, and disparate human-robot interactions all with the same machine: the building. Within this ecology of exchange, merely being situated in space becomes a form of expression.

If we assume our social media activity reveals much about ourselves, the next frontier is that of the spatial resolution of inhabitation. It is crucial that architects shape this development with architectural sensibilities and a critical stance to preserve the autonomy of architecture both in a technological sense and also in the sense of resisting the outright exploitation of architectural space for commercial interests. Architectural robots can vastly expand the reach of architectural design.

An inherent question related to all autonomous systems is whose agenda they follow. But we could also ask whether the agenda it is designed into the physical constructs of the system, or rather emerges in relation to its contextual environment? Architecture has never been neutral; rooms that can serve as shelter can also, as history has shown, become space for imprisonment.

See: Keith Evan Green and Mark D. Gross, Michael. “Architectural Robotics, Inevitably,” Interactions Magazine Vol 19, Issue 1 (Jan–Feb, 2012); Michael Fox and Miles Kemp, Interactive Architecture (Princeton Architectural Press, 2009); Tristan de Estree Sterk, “Using Actuated Tensegrity Structures to Produce a Responsive Architecture.” In: Connecting >> Crossroads of Digital Discourse: Proceedings of the 2003 Annual Conference of the Association for Computer Aided Design in Architecture, (Indianapolis, Indiana: Ball State University, 2003), 85–93.

Kynan Eng, Rodney J. Douglas, and Paul F. M. J. Verschure, “An Interactive Space That Learns to Influence Human Behavior,” IEEE Transactions on Systems, Man, and Cybernetics - Part A: Systems and Humans, Volume: 35, Issue 1 (Jan, 2005).

The Bowtower was developed by the author as a small scale experimental prototype at the School of Architecture at Princeton University between 2013 and 2017. It is a further development of the WhoWhatWhenAir tower developed at MIT. See: Axel Kilian, Philippe Block, Peter Schmitt, and John Snavely, “Developing a Language for Actuated Structures.” Adaptables Conference (Eindhoven: 2006).

Brandon C. Roy, Michael C. Frank, Philip DeCamp, Matthew Miller and Deb Roy, “Predicting the Birth of a Spoken Word,” Proceedings of the National Academy of Sciences of the United States of America (2015).

See ➝.

Artificial Labor is a collaboration between e-flux Architecture and MAK Wien within the context of the VIENNA BIENNALE 2017 and its theme, “Robots. Work. Our Future.”

Artificial Labor is collaborative project between e-flux Architecture and MAK Wien within the context of the VIENNA BIENNALE 2017.